TL;DR:

- POYO-1 is an innovative AI framework for analyzing large-scale neural recordings.

- Developed by researchers from Georgia Tech, Mila, Université de Montréal, and McGill University.

- Utilizes deep learning to capture fine temporal neural activity through tokenization of individual spikes.

- Employs cross-attention mechanisms and the PerceiverIO backbone for efficient representation of neural events.

- Constructed from data from seven nonhuman primates, comprising 27,000 neural units and 100+ hours of recordings.

- Demonstrates rapid adaptation to new sessions, enabling few-shot performance across various tasks.

- Unconstrained by fixed sessions or single neuron sets, it can train across subjects and diverse data sources.

- Highlights the potential of transformers in neural data processing and introduces an efficient implementation.

- Significantly enhances neural activity decoding effectiveness, achieving an R2 of 0.8952 on the NLB-Maze dataset.

- Promises a bright future for comprehensive neural data analysis on a grand scale.

Main AI News:

In this era of cutting-edge technological advancements, the realm of artificial intelligence and neuroscience intersects to birth the remarkable POYO-1 framework. Developed through a collaborative effort by visionary researchers hailing from Georgia Tech, Mila, Université de Montréal, and McGill University, this innovative AI framework sets a new standard for deciphering neural activity across extensive, diverse, and large-scale neural recordings.

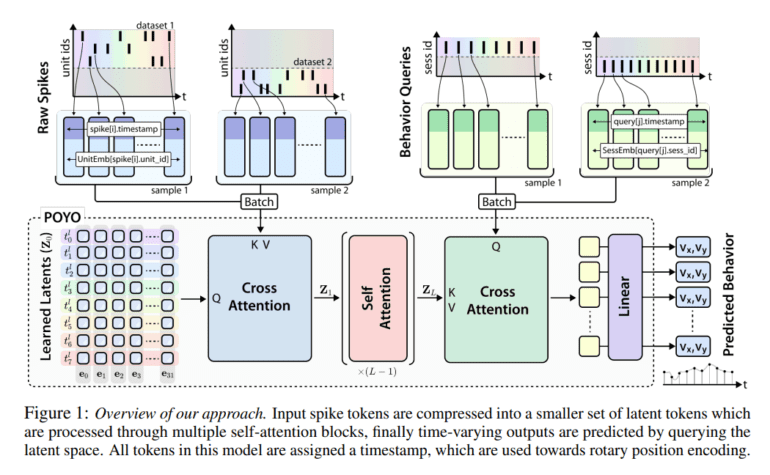

At its core, POYO-1 employs a sophisticated training framework and architecture that transcends traditional boundaries. It harnesses the power of deep learning to tokenize individual neural spikes, capturing the subtle nuances of temporal neural activity with precision. This breakthrough is made possible through the ingenious combination of cross-attention mechanisms and the formidable PerceiverIO backbone.

But what truly sets POYO-1 apart is its scalability. This remarkable framework has been meticulously constructed from data extracted from seven nonhuman primates, encompassing a staggering 27,000 neural units and over 100 hours of neural recordings. The result is a large-scale, multi-session model that showcases its adaptability, seamlessly transitioning to new sessions and demonstrating few-shot performance across a spectrum of tasks. POYO-1 not only delivers results but also presents a scalable approach to neural data analysis that is poised to revolutionize the field.

As the pages of this AI paper unfold, we delve deeper into the heart of this scalable framework. Unlike its predecessors, POYO-1 refuses to be confined to fixed sessions and a single set of neurons. It possesses the unique ability to train across subjects and leverage data from various sources, making it a formidable force in the realm of neural population dynamics modeling. The incorporation of PerceiverIO and cross-attention layers empowers it to efficiently represent neural events, enabling remarkable few-shot performance even in entirely new sessions. This is the dawn of a new era in neural data processing, where transformers shine as the torchbearers of progress.

In the broader context of machine learning advancements, it’s impossible to ignore the profound impact of large pretrained models like GPT. In the world of neuroscience, the demand for a foundational model that can bridge the gaps between diverse datasets, experiments, and subjects is palpable. Enter POYO, a framework designed to facilitate efficient training across a myriad of neural recording sessions, regardless of the neuron sets or the absence of known correspondences. Its unique tokenization scheme and the PerceiverIO architecture work in unison to model neural activity, demonstrating its remarkable transferability and its potential to decode the mysteries of the brain across sessions.

In the labyrinth of neural recordings, POYO-1 stands tall as a guiding light. Its ability to model neural activity dynamics across diverse recordings is unparalleled. It wields tokenization to capture temporal intricacies and expertly employs cross-attention and the PerceiverIO architecture. Through extensive training on vast primate datasets, this multi-session model has proven its mettle, seamlessly adapting to new sessions without the burden of specified neuron correspondences. To augment its already impressive capabilities, Rotary Position Embeddings enhance the transformer’s attention mechanism, further pushing the boundaries of neural data analysis. The use of 5 ms binning for neural activity has yielded fine-grained results on benchmark datasets, setting new standards for precision and accuracy.

The culmination of POYO-1’s capabilities is epitomized in its decoding effectiveness, as showcased through the NLB-Maze dataset, boasting an astonishing R2 value of 0.8952. This pretrained model delivers competitive results on the same dataset, even without weight modifications, underscoring its versatility and adaptability. The ability to rapidly adapt to new sessions, combined with its impressive performance across diverse tasks, underscores the framework’s immense potential for comprehensive neural data analysis on a grand scale.

Conclusion:

The introduction of POYO-1 into the market signifies a significant leap forward in the field of neural data analysis. Its scalability, adaptability, and precision make it a game-changer for businesses involved in neuroscience research and applications. As it streamlines the analysis of large-scale neural recordings, it opens up new avenues for understanding brain function and neural data processing, offering businesses a powerful tool to stay at the forefront of innovation in this field.