TL;DR:

- Microsoft faces allegations of damaging The Guardian’s journalistic reputation with an AI-generated poll.

- The poll questioned the cause of a woman’s death next to a Guardian article on Lilie James, a 21-year-old water polo coach’s tragic demise.

- Reader backlash ensued, leading to the poll’s removal, while critical comments lingered online.

- The incident sparked concerns about distress to the victim’s family and reputational damage to The Guardian.

- Anna Bateson, CEO of the Guardian Media Group, called for accountability and transparency from Microsoft.

- She requested assurances that Microsoft would not use experimental AI without consent on Guardian journalism and urged them to clarify AI usage to users.

- The debate highlights the ethical use of AI in journalism and the need for transparency and safeguards in AI applications.

Main AI News:

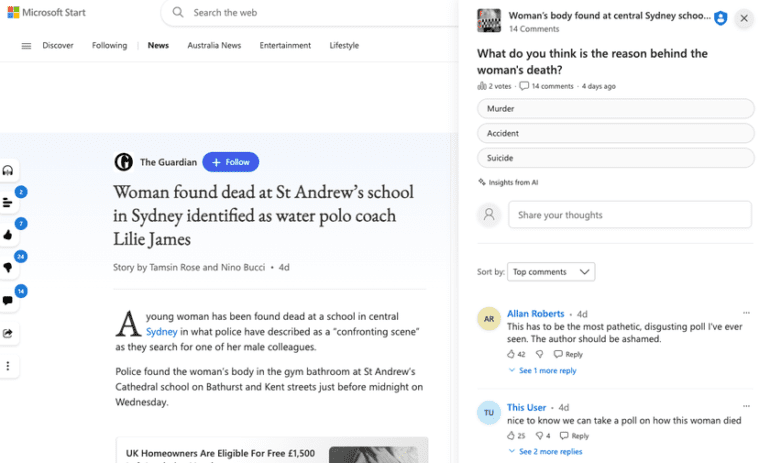

In a recent incident, The Guardian, a renowned news publisher, has raised serious allegations against Microsoft, accusing the tech giant of tarnishing its journalistic integrity. The controversy revolves around an AI-generated poll that Microsoft’s news aggregation service displayed alongside a Guardian article concerning the tragic death of Lilie James, a 21-year-old water polo coach who suffered fatal head injuries in Sydney.

This poll, crafted by an AI program, posed a stark question: “What do you think is the reason behind the woman’s death?” It presented readers with three choices: murder, accident, or suicide. The poll’s placement next to the sensitive news article sparked outrage among readers, prompting its removal. However, critical comments from readers regarding the now-deleted survey remained online, further exacerbating the situation.

The incident’s fallout extended beyond the poll itself, as readers directed their anger towards one of The Guardian’s reporters who had no involvement in the poll’s creation, calling for their dismissal. The response from readers was scathing, with one commenter describing it as “the most pathetic, disgusting poll” they had ever encountered.

Anna Bateson, Chief Executive of the Guardian Media Group, expressed her concerns about this AI-generated poll in a letter addressed to Microsoft’s President, Brad Smith. She emphasized the distress this incident could cause to Lilie James’s family and its significant reputational damage to both The Guardian and its journalists. Bateson highlighted the inappropriate use of generative AI by Microsoft on a story of public interest originally authored by Guardian journalists.

Furthermore, she underscored the vital role of a robust copyright framework in enabling publishers to negotiate the terms under which their journalism is utilized. Microsoft, in fact, holds a license to publish content from The Guardian, with the article and accompanying poll appearing on Microsoft Start, a news aggregation platform.

Bateson requested specific assurances from Smith, seeking Microsoft’s commitment not to employ experimental AI technology without The Guardian’s consent in conjunction with its journalism. Additionally, she urged Microsoft to clearly notify users when AI tools are used to generate supplementary content alongside trusted news brands like The Guardian. Bateson also argued for Microsoft to assume responsibility for the poll by adding a note to the article.

Conclusion:

This incident has ignited a significant discussion around the ethical and responsible use of AI in journalism. While the AI safety summit focuses on long-term safety, it is imperative for Microsoft and other platforms to define their strategies for prioritizing credible information, fairly compensating journalism licenses, and enhancing transparency and safeguards for consumers in AI utilization. Microsoft has responded by deactivating Microsoft-generated polls for news articles and launching an investigation into the inappropriate content, vowing to prevent such errors from recurring in the future.