TL;DR:

- DEJAVU introduces a groundbreaking solution to address the high computational cost of large language model (LLM) inference.

- The system employs a dynamic sparsity prediction algorithm and hardware-aware implementation to significantly boost LLM inference speed.

- The research team’s concept of contextual sparsity, which optimizes specific attention heads and MLP parameters, leads to improved efficiency without compromising quality.

- DEJAVU outperforms Nvidia’s FasterTransformer library by over 2× in end-to-end latency for open-source LLMs like OPT-175B.

- This innovation has the potential to make LLMs more accessible to the wider AI community and unlock new AI applications.

Main AI News:

In the realm of AI, large language models (LLMs) like GPT-3, PaLM, and OPT have long been celebrated for their impressive contextual learning capabilities. However, one glaring issue has persisted—their high computational cost during inference. Attempts to mitigate this challenge through sparsity techniques have often come up short, requiring costly retraining or sacrificing the model’s in-context learning prowess.

Addressing this predicament, a collaborative research endeavor involving Rice University, Zhe Jiang University, Stanford University, University of California, San Diego, ETH Zurich Adobe Research, Meta AI (FAIR), and Carnegie Mellon University has introduced DEJAVU. This pioneering system employs a cost-effective algorithm that dynamically predicts contextual sparsity for each layer. In tandem with an asynchronous and hardware-aware implementation, DEJAVU delivers a substantial boost in LLM inference speed.

The Quest for Optimal Sparsity

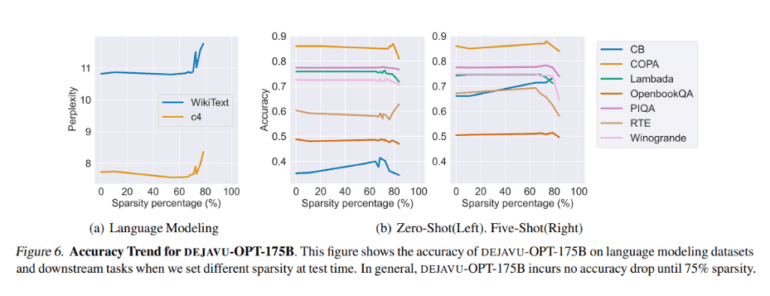

The research team embarked on a quest to define the ideal sparsity for LLMs. Their objectives were clear: avoid model retraining, preserve quality and in-context learning, and enhance wall-clock time speed on modern hardware. To achieve these ambitious goals, they introduced the concept of contextual sparsity—small, input-dependent subsets of attention heads and MLP parameters that yield nearly identical results to the full model for a given input.

The Key Insight: Contextual Sparsity

The team’s hypothesis was groundbreaking: contextual sparsity exists for pre-trained LLMs with any input. This revelation guided their efforts to dynamically prune specific attention heads and MLP parameters during inference, all without altering the pre-trained models. DEJAVU leverages this innovation to optimize LLMs for applications with stringent latency constraints.

Efficient Dynamic Sparsity Prediction

A central component of DEJAVU is a low-cost, learning-based algorithm that predicts sparsity on-the-fly. When given the input for a particular layer, this algorithm anticipates a relevant subset of attention heads or MLP parameters in the subsequent layer and loads them only for computation. An asynchronous predictor, akin to a classic branch predictor, is also introduced to mitigate sequential overhead.

Hardware-Aware Implementation

DEJAVU goes the extra mile by incorporating a hardware-aware implementation of sparse matrix multiplication. This integration leads to a remarkable reduction in latency for open-source LLMs like OPT-175B. In fact, it outperforms the state-of-the-art FasterTransformer library from Nvidia by more than 2× in end-to-end latency, all while maintaining exceptional quality. Even the widely used Hugging Face implementation lags behind at small batch sizes.

A Leap Forward in LLM Inference

DEJAVU’s use of asynchronous lookahead predictors and hardware-efficient sparsity is a game-changer for LLM inference. The promising empirical results underscore the potential of contextual sparsity to drastically reduce inference latency when compared to state-of-the-art models. The research team envisions their work as a significant step toward making LLMs more accessible to the broader AI community, potentially opening the door to exciting new AI applications.

Conclusion:

DEJAVU’s innovation in contextual sparsity and efficient inference speed optimization represents a game-changing development in the market of large language models. It not only addresses the long-standing issue of high computational cost during inference but also opens up exciting possibilities for broader AI community adoption and the exploration of new AI applications. This advancement holds the potential to reshape the landscape of AI and machine learning, offering improved efficiency and accessibility.