TL;DR:

- Transformer models face limitations in handling lengthy data sequences.

- A novel memory system, Memoria, inspired by human memory principles, enhances transformer performance.

- Memoria stores and retrieves information at multiple memory levels, resembling human memory associations.

- Initial experiments show Memoria significantly improves long-term dependency understanding in various tasks.

- Memoria’s potential to revolutionize machine learning offers promising advancements in AI and natural language processing.

Main AI News:

In the realm of artificial intelligence, the need to conquer the limitations of memory capacity within transformers has become a pressing challenge. These transformers, celebrated for their ability to decipher intricate patterns within sequential data, excel in various domains but face hurdles when confronted with extensive data sequences. The conventional method of expanding input length calls for a paradigm shift in replicating the selective and efficient data processing akin to the human cognitive framework, inspired by well-established neuropsychological principles.

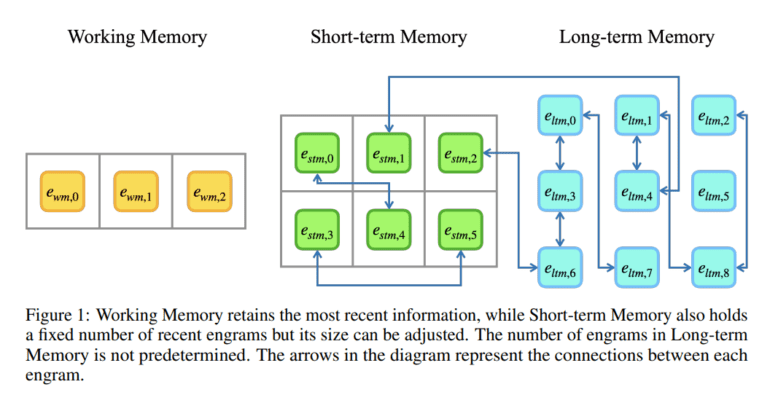

Amidst these limitations, a promising solution has emerged, drawing inspiration from the intricacies of human memory. This groundbreaking memory system, aptly named ‘Memoria,’ has demonstrated remarkable potential in bolstering the performance of transformer models. Memoria operates by storing and retrieving information, known as “engram,” across multiple memory levels: working memory, short-term memory, and long-term memory. It achieves this by adjusting connection weights in accordance with Hebb’s rule, closely mirroring the way human memory associations are forged.

Preliminary experiments with Memoria have yielded highly encouraging results. When seamlessly integrated with existing transformer-based models, this innovative approach substantially enhances their ability to account for long-term dependencies across a diverse range of tasks. It goes above and beyond the capabilities of conventional sorting and language modeling techniques, providing an effective remedy to the short-term memory constraints that have long plagued traditional transformers.

This cutting-edge memory architecture, untethered from reliance on specific individuals or institutions, carries the potential to revolutionize the field of machine learning. As researchers delve deeper into its capabilities, Memoria is poised to find applications in complex tasks, further amplifying the prowess of transformer-based models. This development marks a significant leap forward in the ongoing mission to equip transformers with the capacity to navigate extensive data sequences, promising remarkable advancements in the realms of artificial intelligence and natural language processing.

Conclusion:

Memoria’s breakthrough memory system holds the potential to reshape the machine learning landscape, providing a solution to the limitations faced by transformers. This innovation promises to unlock new possibilities in AI applications and significantly impact the market by enabling transformers to excel in handling extensive data sequences, fueling advancements in artificial intelligence and natural language processing.