TL;DR:

- Researchers at Microsoft, Peking University, and Xi’an Jiaotong University introduce ‘LeMa,’ an AI learning method inspired by human problem-solving.

- LeMa helps large language models (LLMs) improve their math problem-solving skills by learning from their own mistakes.

- The technique involves generating and correcting flawed reasoning paths for math problems, resulting in significant performance improvements.

- Specialized LLMs like WizardMath and MetaMath benefit from LeMa, achieving impressive accuracy rates on challenging tasks.

- The research, including code and data, is open-source, fostering further exploration in the AI community.

- LeMa represents a major milestone in AI, bringing AI systems closer to human-like learning processes.

- This breakthrough has broad implications, particularly in AI-reliant industries like healthcare, finance, and autonomous vehicles.

Main AI News:

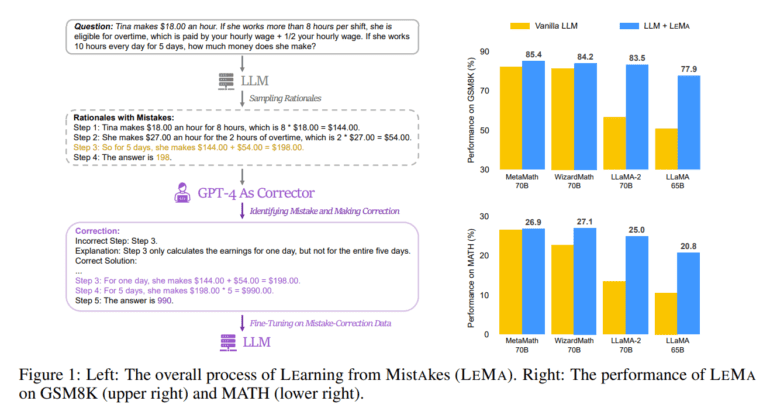

In a groundbreaking development, a collaboration between researchers from Microsoft Research Asia, Peking University, and Xi’an Jiaotong University has yielded an innovative approach to bolster the problem-solving abilities of large language models (LLMs). This revolutionary technique, named Learning from Mistakes (LeMa), empowers AI systems to learn from their errors, mirroring the way humans enhance their problem-solving skills.

Taking inspiration from the human learning process, where students learn from their mistakes to improve future performance, the researchers applied this concept to LLMs. LeMa leverages mistake-correction data pairs generated by GPT-4 to fine-tune these models.

The process begins with models like LLaMA-2 generating flawed reasoning paths for math word problems. GPT-4 then identifies errors in the reasoning, provides explanations, and offers corrected reasoning paths. The corrected data is used to further train the original models.

The results of LeMa are truly remarkable. Across a range of backbone LLMs and mathematical reasoning tasks, LeMa consistently outperforms traditional fine-tuning methods, as reported by the researchers.

Moreover, specialized LLMs such as WizardMath and MetaMath have also benefited significantly from LeMa, achieving an impressive 85.4% pass@1 accuracy on GSM8K and 27.1% on MATH. These results surpass the performance of non-execution open-source models on these challenging tasks.

However, the significance of this breakthrough extends beyond improved reasoning capabilities in AI models. It signifies a major stride towards AI systems that can learn and improve from their mistakes, aligning them more closely with the learning processes of humans.

The research team has made their work, including code, data, and models, publicly available on GitHub. This open-source approach encourages the broader AI community to continue exploring this path, potentially leading to further advances in machine learning.

The emergence of LeMa stands as a pivotal moment in AI, indicating that machine learning processes can emulate the human learning experience. This development has the potential to revolutionize industries heavily reliant on AI, such as healthcare, finance, and autonomous vehicles, where error correction and continuous learning are paramount.

As the field of AI continues its rapid evolution, the integration of human-like learning processes, exemplified by learning from mistakes, becomes a critical factor in the pursuit of more efficient and effective AI systems.

Conclusion:

The introduction of ‘LeMa’ marks a significant advancement in the AI market. By enabling AI systems to learn from their mistakes in a manner akin to human learning, this breakthrough promises to enhance problem-solving capabilities across various industries. The open-source nature of the research encourages collaboration and innovation, positioning AI for even more transformative developments in the future.