TL;DR:

- Neural networks are invaluable tools in various fields, including image recognition and natural language processing.

- Interpreting and controlling neural network operations has been a challenge due to their dense and continuous computations.

- Stanford researchers introduce “codebook features,” a novel method that enhances interpretability and control using vector quantization.

- Codebook features transform the network’s hidden states into a sparse, understandable representation.

- This approach differs from traditional methods and provides a more comprehensive view of the network’s decision-making processes.

- Experiments demonstrate the effectiveness of codebook features in sequence and language modeling tasks.

- Codebook features outperform individual neurons in classifying states and linguistic features.

- The innovation promises to revolutionize neural network interpretability and has significant potential for complex language processing tasks.

Main AI News:

In today’s rapidly evolving landscape of artificial intelligence, neural networks have firmly established themselves as indispensable tools. These computational powerhouses excel in domains ranging from image recognition to natural language processing and predictive analytics. Yet, beneath their impressive capabilities lies a challenge that has persisted for years: understanding and managing the inner workings of neural networks. Unlike traditional computers, neural networks engage in dense and continuous internal computations, rendering them enigmatic black boxes.

In response to this longstanding issue, a pioneering research team from Stanford University introduces a groundbreaking solution: “codebook features.” This novel approach promises to not only enhance the interpretability of neural networks but also grant greater control over their operations. The key innovation lies in leveraging vector quantization, which discretizes the network’s hidden states into a sparse combination of vectors. This transformation provides a more comprehensible representation of the network’s internal processes, bridging the gap between complexity and clarity.

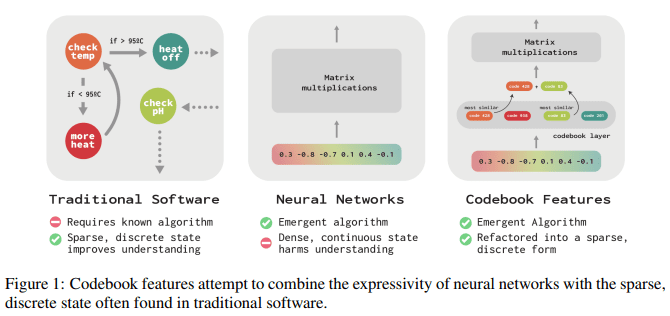

The opacity and lack of interpretability inherent in neural networks have posed substantial challenges to their widespread adoption. The research team’s proposal, codebook features, strives to reconcile this by harmonizing the expressive power of neural networks with the sparsity and discreteness characteristic of conventional software. The method involves crafting a codebook during training, comprising a set of learned vectors. This codebook meticulously defines every potential state within a network’s layer at any given moment, enabling researchers to map the network’s hidden states into a more interpretable format.

At the heart of this innovative approach is the codebook’s application in identifying the top-k most analogous vectors for the network’s activations. These selected vectors are aggregated and transmitted to the subsequent layer, engendering a sparse and discrete bottleneck within the network. This transformation converts the dense and continuous computations of a neural network into a format that is amenable to interpretation, enabling a deeper understanding of its internal processes. In contrast to traditional methods that rely on individual neurons, codebook features offer a more holistic and coherent perspective on the network’s decision-making mechanisms.

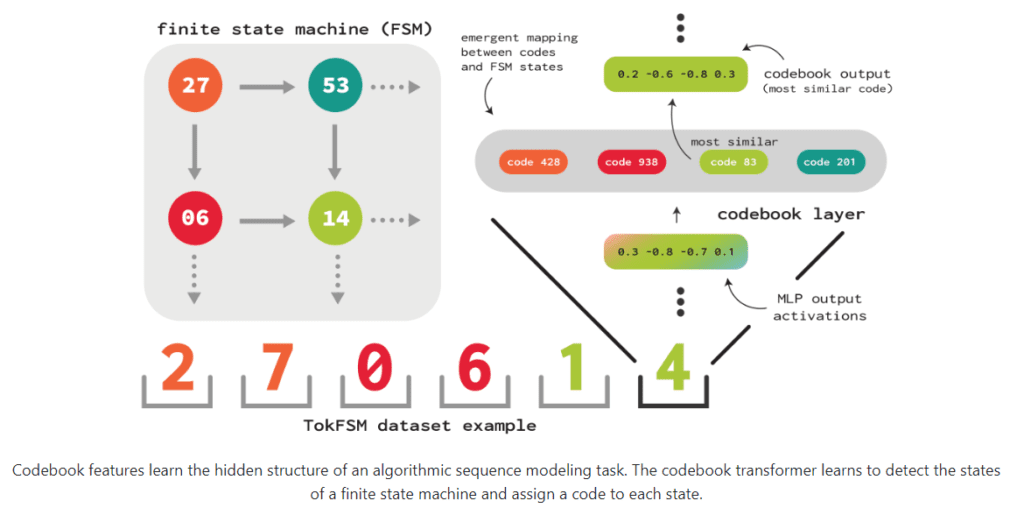

To validate the efficacy of the codebook features method, the research team embarked on a series of rigorous experiments encompassing sequence modeling tasks and language modeling benchmarks. In their investigation of a sequence modeling dataset, the team equipped the model with codebooks at each layer. This approach led to the allocation of distinct codes to nearly every Finite State Machine (FSM) state within the MLP layer’s codebook. Remarkably, these codes exhibited an impressive ability to classify FSM states with over 97% precision, surpassing the performance of individual neurons.

Furthermore, the researchers uncovered that the codebook features method excelled in capturing a wide array of linguistic phenomena within language models. Through an analysis of specific codes, they discerned representations of various linguistic features, including punctuation, syntax, semantics, and topics. Notably, the method’s prowess in classifying basic linguistic features exceeded that of individual neurons in the model. This noteworthy observation underscores the immense potential of codebook features in enhancing the interpretability and control of neural networks, particularly in complex language processing tasks.

Source: Marktechpost Media Inc.

Conclusion:

The introduction of codebook features represents a significant milestone in the quest for more interpretable and manageable neural networks. This innovative method, born from rigorous research and experimentation, promises to revolutionize our understanding of these computational marvels and pave the way for more effective utilization across diverse domains. The fusion of neural network prowess with the clarity offered by codebook features holds the promise of unlocking new frontiers in AI applications, ultimately propelling us toward a future where artificial intelligence is more comprehensible and controllable than ever before.