TL;DR:

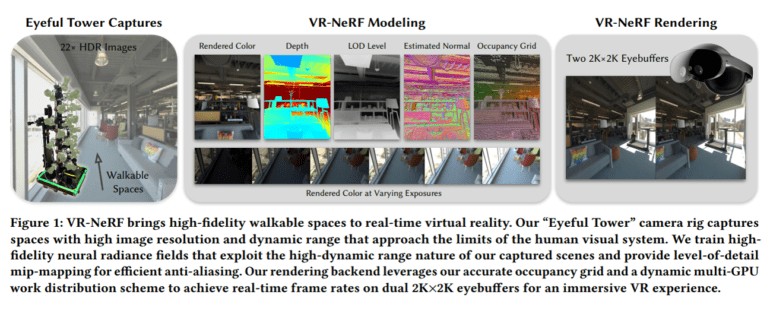

- VR-NeRF, an advanced AI system, redefines VR with high-fidelity walkable space capture.

- It addresses limitations in existing VR methods, allowing users to explore real-world spaces seamlessly.

- A dataset of thousands of 50-megapixel HDR images enables high-fidelity view synthesis.

- NeRF, designed for this dataset, offers real-time VR rendering with uncompromised quality.

- A unique multi-camera rig captures HDR photos, while a custom GPU renderer ensures consistent high-quality rendering.

- VR-NeRF optimizes the balance between image quality and speed with instant neural graphics primitives (NGPs).

- Extensive testing validates VR-NeRF’s ability to produce high-quality VR renderings with a wide dynamic range.

Main AI News:

In the ever-evolving realm of virtual reality (VR), a groundbreaking innovation has emerged, setting new standards for high-fidelity capture and rendering of walkable spaces. The pioneers behind this technological leap are the meta researchers who have introduced VR-NeRF, an advanced end-to-end AI system that promises to reshape the VR landscape.

As affordable VR technology gains traction, the demand for immersive visual media experiences continues to soar. Realistic VR photography and video have become the holy grail of this industry. However, existing approaches have typically fallen into two categories, each with its own set of limitations.

The first category focuses on high-fidelity view synthesis but confines users to a small headbox with a diameter of less than 1 meter, restricting their movements within a limited space. On the other hand, the second category offers scene-scale free-viewpoint view synthesis, allowing users greater freedom of movement. However, this freedom comes at the cost of lower image quality and framerate.

To overcome these constraints and usher in a new era of VR realism, the authors of this paper introduce VR-NeRF, a system that empowers users to explore real-world spaces seamlessly in a VR environment. What sets VR-NeRF apart is its reliance on a dataset comprising thousands of 50-megapixel HDR images, some exceeding a staggering 100 gigapixels. This wealth of data fuels VR-NeRF’s ability to achieve unparalleled high-fidelity view synthesis.

The rise of Neural Radiance Fields (NeRFs) has been remarkable, thanks to their knack for generating top-tier novel-view synthesis. However, NeRFs have often struggled with large and intricate scenes. Enter the method devised by these pioneering researchers, NeRF, tailored precisely to their high-fidelity dataset. This adaptation enables real-time VR rendering with uncompromised quality.

The secret weapon in the VR-NeRF arsenal is a one-of-a-kind multi-camera rig, meticulously designed to capture a multitude of uniformly distributed HDR photos of a scene. This hardware innovation underpins the system’s prowess.

Complementing the hardware is a custom GPU renderer exclusive to VR-NeRF. This renderer not only ensures high-fidelity rendering but also maintains a consistent frame rate of 36 Hz, delivering an utterly immersive VR experience. The researchers have further refined instant neural graphics primitives (NGPs), enhancing color accuracy and enabling flexible rendering at various levels of detail. The delicate balance between quality and speed optimization sets VR-NeRF in a league of its own.

The researchers have left no stone unturned in validating the efficacy of their approach. Rigorous testing on challenging high-fidelity datasets and a meticulous comparison with existing baselines have showcased VR-NeRF’s ability to produce high-quality VR renderings of walkable spaces, complete with a wide dynamic range.

Conclusion:

VR-NeRF represents a significant leap in VR technology, promising unparalleled realism and freedom of movement. This innovation has the potential to reshape the VR market, offering creators and consumers a new era of immersive experiences and opening doors to diverse applications across industries.