TL;DR:

- LocoMuJoCo, a pioneering benchmark, has been introduced by top-notch researchers to revolutionize the evaluation and comparison of Imitation Learning (IL) algorithms.

- This benchmark offers a diverse set of environments, including quadrupeds, bipeds, and musculoskeletal human models, coupled with real motion capture data, expert data, and sub-optimal data for comprehensive evaluation.

- It emphasizes the need for metrics rooted in probability distributions and biomechanical principles for precise behavior quality assessment.

- LocoMuJoCo is Python-based and compatible with Gymnasium and Mushroom-RL libraries, providing a wide array of tasks and datasets for locomotion research.

- Researchers can explore various IL paradigms, such as embodiment mismatches, expert actions, and sub-optimal states, with baselines for classical IRL and adversarial IL approaches.

- The benchmark is user-friendly, with an intuitive interface, handcrafted metrics, and state-of-the-art baseline algorithms.

- It promotes extensibility to common RL libraries with user-friendly interfaces.

Main AI News:

In a groundbreaking move, researchers from the Intelligent Autonomous Systems Group, Locomotion Laboratory, German Research Center for AI, Centre for Cognitive Science, and Hessian.AI have unveiled LocoMuJoCo, a cutting-edge machine learning benchmark designed to usher in a new era of rigorous evaluation and comparison for Imitation Learning (IL) algorithms. This innovative benchmark not only addresses the limitations of existing measures but also introduces a fresh perspective on locomotion tasks.

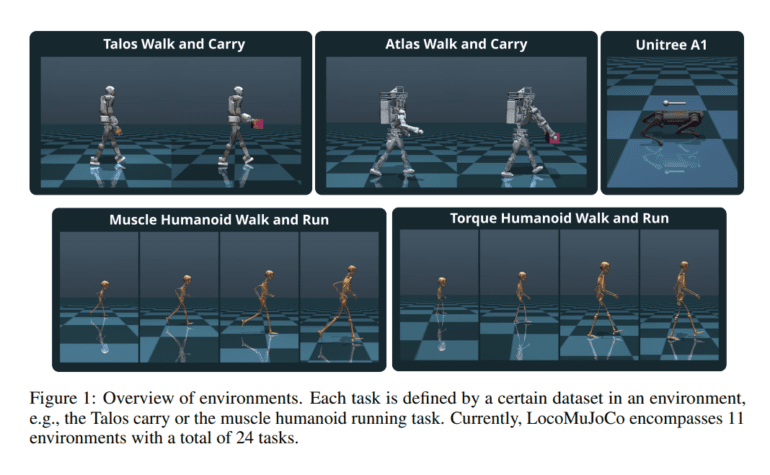

LocoMuJoCo boasts a rich array of diverse environments, encompassing quadrupeds, bipeds, and musculoskeletal human models. What sets it apart is the inclusion of real noisy motion capture data, ground truth expert data, and ground truth sub-optimal data, which together enable evaluations across a spectrum of difficulty levels. This comprehensive approach stands in stark contrast to the often simplified tasks seen in previous benchmarks.

The benchmark’s significance lies in its emphasis on metrics grounded in probability distributions and biomechanical principles for effective behavior quality assessment. By doing so, it addresses the critical need for more nuanced evaluation criteria in the realm of Imitation Learning.

LocoMuJoCo, built on Python, aims to tackle standardization issues that have plagued existing IL benchmarks. Its compatibility with Gymnasium and Mushroom-RL libraries opens up a world of possibilities, offering a wide range of tasks and datasets for humanoid and quadruped locomotion, as well as musculoskeletal human models. With LocoMuJoCo, researchers can explore various IL paradigms, including embodiment mismatches, learning with or without expert actions, and handling sub-optimal expert states and actions. Notably, it provides baselines for classical Imitation Reinforcement Learning (IRL) and adversarial IL approaches such as GAIL, VAIL, GAIfO, IQ-Learn, LS-IQ, and SQIL, all implemented seamlessly with Mushroom-RL.

This benchmark is not just diverse in its offerings but also user-friendly, featuring an intuitive interface for dynamic randomization and a multitude of partially observable tasks for training agents across different embodiments. It comes complete with handcrafted metrics and state-of-the-art baseline algorithms, making it a comprehensive resource for IL research. Furthermore, LocoMuJoCo’s extensibility, with user-friendly interfaces to common RL libraries, ensures that it can evolve alongside the ever-changing landscape of machine learning.

LocoMuJoCo represents a leap forward in imitation learning for locomotion tasks, bridging the gap with its diverse environments and comprehensive datasets. Its meticulous design allows for a thorough evaluation and comparison of IL algorithms, underpinned by handcrafted metrics and cutting-edge baselines. Quadrupeds, bipeds, and musculoskeletal human models are all part of its repertoire, making it adaptable for various scenarios and difficulty levels.

Conclusion:

LocoMuJoCo is poised to overcome the limitations of existing standards and elevate the evaluation of IL algorithms to new heights. With its extensive environments, data sets, and compatibility with common RL libraries, it is a beacon of innovation in the field. The research community is encouraged to explore the potential of LocoMuJoCo further, especially in the development of metrics grounded in probability distributions and biomechanical principles. As we step into the future, the possibilities are boundless, and LocoMuJoCo is leading the way.