TL;DR:

- Hammerspace introduces a groundbreaking data architecture for Large Language Models (LLMs).

- This architecture enables unified data management, combining supercomputing-class parallel file systems and seamless access via standard NFS.

- Key components include a high-performance file system, standards-based software approach, compatibility with commodity hardware, streamlined data pipelines, high-speed data path, fault-tolerant design, and objective-based data placement.

- Hammerspace sets a new gold standard for AI architectures, empowering organizations to leverage data from diverse sources and orchestrate continuous learning.

Main AI News:

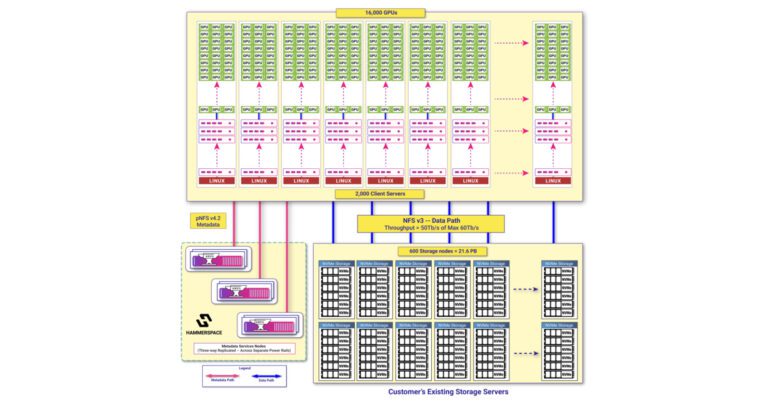

In a groundbreaking move, Hammerspace has unveiled its state-of-the-art data architecture designed exclusively for training Large Language Models (LLMs) within hyperscale environments. This visionary architecture stands as the world’s sole solution, empowering artificial intelligence (AI) experts to craft a unified data infrastructure that combines the prowess of a supercomputing-class parallel file system with the convenience of seamless access for both applications and research via the standard NFS protocol.

The success of AI strategies hinges on the ability to seamlessly expand to a vast number of GPUs while maintaining the flexibility to access local and distributed data repositories. Furthermore, organizations must harness data resources regardless of the underlying hardware or cloud infrastructure, all while upholding rigorous data governance standards. These demands take center stage in the development of LLMs, which often require the utilization of hundreds of billions of parameters, tens of thousands of GPUs, and hundreds of petabytes of diverse unstructured data.

Hammerspace’s momentous announcement introduces a proven architecture that uniquely addresses the performance, deployment ease, and support for software and hardware standards necessary to meet the distinct requirements of LLM data pipelines and data storage.

Hammerspace’s Key Components for LLM Success:

- Hammerspace Ultra High-Performance File System: AI architects and technologists now have the capability to leverage existing networks, storage hardware, and compute clusters while strategically integrating new infrastructure as their AI operations expand. Hammerspace seamlessly consolidates the entire data pipeline into a unified, parallel global file system, harmoniously incorporating existing infrastructure and data with emerging datasets and resources. The essence of a parallel file system architecture proves pivotal for training AI, where myriad processes or nodes must access the same data concurrently. Hammerspace delivers efficient and simultaneous data access, mitigates workflow bottlenecks, and optimizes overall resource utilization across client servers, GPUs, networks, and data storage nodes.

- Hammerspace Standards-Based Software Approach: Hammerspace’s parallel file system client, integrated with Linux as an NFS4.2 client, leverages Hammerspace’s pioneering contribution of FlexFiles into the Linux ecosystem. This approach affords standard Linux client servers direct, high-performance access to data via Hammerspace’s software. Employing a standard NAS interface empowers researchers and applications alike to effortlessly access data through the widely adopted NFS protocol, connecting them to a thriving community dedicated to troubleshooting, enhancing, and maintaining a standards-based environment.

- Hammerspace on Commodity Hardware: Hammerspace offers a software-defined data platform compatible with any standards-based hardware, including white box Linux servers, Open Compute Project (OCP) hardware, Supermicro, and more. This flexibility allows organizations to maximize their existing hardware investments while benefiting from cost-effective scalability.

- Hammerspace Streamlined Data Pipelines: The Hammerspace architecture constructs a unified, high-performance global data environment, facilitating concurrent and continuous execution of all phases of LLM training and inference workloads. Hammerspace stands out for its unique ability to dismantle data silos, effortlessly accessing training data dispersed across diverse data centers and cloud storage systems, irrespective of vendor or location. By harnessing training data wherever it resides, Hammerspace streamlines AI workflows, minimizing the need for extensive data copying and movement into a consolidated repository. This approach reduces overhead and mitigates the risk of introducing errors and inaccuracies in LLMs. At the application level, data is accessed through a standard NFS file interface, ensuring direct access to files in a format typical for applications.

- Hammerspace High-Speed Data Path: Hammerspace’s approach minimizes network transmissions and data hops at every conceivable point within the data path. This strategy guarantees nearly 100 percent utilization of available infrastructure, delivering a streamlined, high-bandwidth, low-latency data path that seamlessly connects applications, compute resources, and data storage nodes. For in-depth insights into this innovation and its benefits, refer to the IEEE article titled “Overcoming Performance Bottlenecks With a Network File System in Solid State Drives” by David Flynn and Thomas Coughlin.

- Hammerspace Fault-Tolerant Design: LLM environments are colossal and intricate, boasting extensive power and infrastructure requirements. These AI systems often revolve around continually updated models fed by fresh data. Hammerspace excels at maintaining peak performance even during system outages, enabling AI technologies to shift their focus from recovering from power, network, or system failures to ensuring persistence through those challenges.

- Hammerspace Objective-Based Data Placement: Hammerspace’s software decouples the file system layer from the storage layer, empowering independent scaling of I/O and IOPS at the data layer. This groundbreaking approach enables the coexistence of ultra-high-performance NVMe storage with lower-cost, lower-performing, and geographically dispersed storage tiers, including cloud resources, within a global data environment. Data orchestration between tiers and locations occurs seamlessly in the background, guided by objective-based policies. These software objectives facilitate robust automation, ensuring data is placed on the appropriate nodes for the required performance when in use. During periods of inactivity, data can remain on high-performance storage nodes or be automatically relocated to a more efficient location, reducing storage costs for inactive data. This approach guarantees that data is always accessible to fully utilize GPUs and network capacities when needed. Integrated machine learning (ML) capabilities within the Hammerspace architecture further enhance data placement, ensuring related datasets are stored in high-performance, local NVMe storage from the moment the first file is accessed.

David Flynn, Founder and CEO of Hammerspace, emphasizes, “The most powerful AI initiatives will draw insights from data originating from diverse sources. A high-performance data environment is pivotal for the initial success of AI model training. More importantly, it enables the orchestration of data from multiple origins for continuous learning. Hammerspace has firmly established itself as the gold standard for AI architectures on a grand scale.”

Conclusion:

Hammerspace’s groundbreaking architecture signifies a game-changing development for the AI market. It addresses critical challenges in LLM training, such as data access, scalability, and fault tolerance. By seamlessly integrating existing infrastructure with new datasets and resources, Hammerspace empowers organizations to harness AI’s full potential. This innovation sets a new standard for AI architectures at scale, making it a key player in the rapidly evolving AI market.