TL;DR:

- Tencent AI Lab introduces Chain-of-Noting (CON) to enhance the reliability of Retrieval-Augmented Language Models (RALMs).

- CON-equipped RALMs show significant improvements in open-domain QA, achieving higher Exact Match scores and out-of-scope question rejection rates.

- CON addresses limitations in RALMs by improving noise robustness and reducing dependence on retrieved documents.

- The framework generates sequential reading notes for retrieved documents, resulting in more accurate and contextually relevant responses.

- RALMs with CON outperform standard RALMs, achieving a notable increase in EM scores and rejection rates.

- CON balances direct retrieval, inferential reasoning, and knowledge gap acknowledgment, resembling human information processing.

- Its implementation involves designing reading notes, data collection, and model training.

- Future research may extend CON’s application to diverse domains, optimize retrieval strategies, and assess user satisfaction.

Main AI News:

In the ever-evolving landscape of artificial intelligence, Tencent AI Lab has emerged as a trailblazer, continuously pushing the boundaries of what’s possible. In their latest endeavor, researchers from Tencent AI Lab have embarked on a mission to address a critical challenge in the realm of retrieval-augmented language models (RALMs). These advanced models, while powerful, often fall short of providing reliable responses due to their tendency to retrieve irrelevant information. Enter the game-changing solution: CHAIN-OF-NOTING (CON).

CON is poised to revolutionize the landscape of RALMs by significantly boosting their reliability and robustness. The results speak for themselves – RALMs equipped with CON exhibit remarkable performance enhancements, particularly in open-domain question-answering scenarios. Notably, they achieve substantial gains in Exact Match (EM) scores and rejection rates for out-of-scope questions.

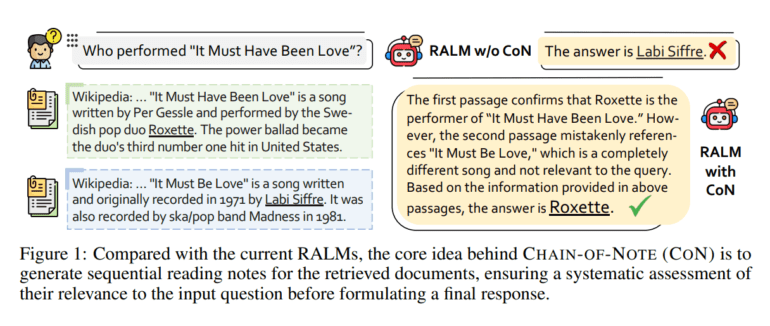

The core focus of this groundbreaking research is to tackle the limitations that have plagued RALMs. It places a strong emphasis on noise robustness and reduced dependence on retrieved documents. The innovative CON approach accomplishes this by generating sequential reading notes for retrieved documents, enabling a comprehensive evaluation of relevance. Case studies underscore the transformative impact of CON, as it enhances the model’s grasp of document relevance. This, in turn, results in more precise and contextually relevant responses by effectively filtering out extraneous or less trustworthy content.

CON not only outperforms standard RALMs but also strikes a harmonious balance between direct retrieval, inferential reasoning, and acknowledging knowledge gaps, closely mirroring human information processing. Its implementation involves the meticulous design of reading notes, rigorous data collection, and thorough model training. This comprehensive approach not only addresses current limitations in RALMs but also elevates their reliability to unprecedented heights.

A key feature of CON is its ability to generate sequential reading notes for retrieved documents, a feature that sets it apart from traditional RALMs. Trained on an LLaMa-2 7B model with ChatGPT-created training data, CON demonstrates remarkable prowess, especially in high-noise scenarios. It categorizes reading notes into direct answers, useful context, and unknown scenarios, showcasing a robust mechanism for assessing document relevance. In comparison with LLaMa-2 without Information Retrieval (IR), a baseline method, CON’s superiority is evident in its capacity to filter out irrelevant content, thereby enhancing response accuracy and contextual relevance.

The impact of RALMs equipped with CON is nothing short of astonishing. These enhanced models achieve an impressive average increase of +7.9 in EM scores for documents with high noise levels. Additionally, CON exhibits an outstanding +10.5 improvement in rejection rates for real-time questions beyond pre-training knowledge. Evaluation metrics encompass EM score, F1 score, and reject rate for open-domain QA. The case studies underscore CON’s efficacy in deepening the understanding of RALMs, addressing the challenges posed by noisy and irrelevant documents, and enhancing overall robustness.

CON framework stands as a monumental leap forward in enhancing RALMs. By generating sequential reading notes for retrieved documents and seamlessly integrating this information into the final answer, RALMs equipped with CON consistently outperform their traditional counterparts, showcasing a remarkable average improvement. This innovation effectively tackles the limitations that have hindered standard RALMs, fostering a deeper understanding of relevant information and bolstering overall performance across various open-domain QA benchmarks.

As we look to the future, the potential applications of the CON framework are boundless. Future research endeavors may explore its adaptability to diverse domains and tasks, assessing its generalizability and efficacy in fortifying RALMs. Further investigations into varied retrieval strategies and document ranking methods hold promise in optimizing the retrieval process, ultimately enhancing the relevance of retrieved documents. User studies evaluating the usability and satisfaction of RALMs equipped with CON in real-world scenarios will be pivotal in assessing response quality and trustworthiness. Lastly, the fusion of CON with additional external knowledge sources and techniques like pre-training or fine-tuning promises to further elevate RALM performance and adaptability to unprecedented heights.

Conclusion:

Tencent AI Lab’s CON framework represents a significant advancement in the field of Retrieval-Augmented Language Models (RALMs). This breakthrough technology not only enhances the reliability and relevance of RALMs but also sets the stage for their broader adoption across various industries. Businesses and organizations seeking to leverage AI-powered language models for improved customer interactions, content generation, and information retrieval should closely monitor the development and implementation of CON, as it promises to bring more accuracy and contextuality to AI-driven applications, ultimately leading to higher user satisfaction and trust.