TL;DR:

- Alibaba’s Qwen-Audio Series introduces large-scale audio-language models.

- Qwen-Audio offers universal audio understanding without task-specific fine-tuning.

- Qwen-Audio-Chat extends capabilities to support multi-turn dialogues and diverse audio scenarios.

- The model handles diverse audio types, including speech, natural sounds, music, and songs.

- Qwen-Audio bridges the gap between LLMs and audio comprehension with a multi-task framework.

- It outperforms baselines on various challenging audio tasks.

- The series showcases impressive results, setting new standards in audio understanding.

Main AI News:

Alibaba Group’s research team is making waves in the world of large-scale audio-language models with their latest innovation – the Qwen-Audio Series. In a move that addresses the longstanding challenge of limited pre-trained audio models for diverse tasks, this development promises to revolutionize the field.

The Qwen-Audio Series introduces a groundbreaking hierarchical tag-based multi-task framework designed to eliminate interference issues that have plagued previous models during co-training. What truly sets Qwen-Audio apart is its ability to achieve remarkable performance across benchmark tasks without the need for task-specific fine-tuning.

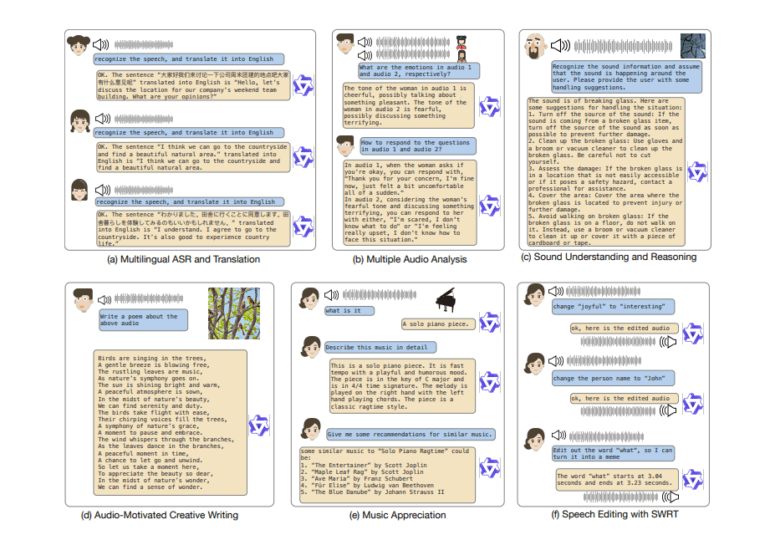

Qwen-Audio-Chat, an extension built upon the foundation of Qwen-Audio, takes it a step further by supporting multi-turn dialogues and a wide range of audio-central scenarios. This showcases its prowess in universal audio understanding, making it a game-changer in the industry.

One of the key strengths of Qwen-Audio lies in its ability to handle diverse audio types and tasks, surpassing prior works that focused solely on speech. Qwen-Audio incorporates human speech, natural sounds, music, and songs, allowing for co-training on datasets with varying granularities. The model excels in speech perception and recognition tasks without the need for task-specific modifications. Qwen-Audio-Chat extends these capabilities to align seamlessly with human intent, supporting multilingual, multi-turn dialogues from both audio and text inputs. It’s a testament to the comprehensive audio understanding that this model offers.

While Large Language Models (LLMs) excel in general artificial intelligence, they have often fallen short in audio comprehension. Qwen-Audio steps in to bridge this gap by scaling pre-training to cover 30 tasks and a wide spectrum of audio types. A multi-task framework further mitigates interference, enabling knowledge sharing and resulting in impressive performance across benchmarks without task-specific fine-tuning. Qwen-Audio-Chat, as an extension, offers support for multi-turn dialogues and diverse audio-centric scenarios, showcasing its comprehensive audio interaction capabilities within LLMs.

In essence, Qwen-Audio and Qwen-Audio-Chat represent a significant leap in the domain of universal audio understanding and flexible human interaction. Qwen-Audio adopts a multi-task pre-training approach, optimizing the audio encoder while freezing language model weights. Conversely, Qwen-Audio-Chat employs supervised fine-tuning, optimizing the language model while preserving audio encoder weights. This dynamic training process, encompassing both multi-task pre-training and supervised fine-tuning, results in models that are adaptable and exhibit a comprehensive audio understanding.

The performance of Qwen-Audio is nothing short of remarkable. It consistently outperforms baselines by a substantial margin on various challenging tasks, such as AAC, SWRT ASC, SER, AQA, VSC, and MNA. Moreover, it establishes state-of-the-art results on CochlScene, ClothoAQA, and VocalSound, showcasing its robust audio understanding capabilities. Qwen-Audio’s superior performance in diverse analyses underlines its effectiveness and competence in achieving state-of-the-art results in complex audio tasks.

The Qwen-Audio Series introduces large-scale audio-language models with universal understanding across diverse audio types and tasks. Developed through a multi-task training framework, these models facilitate knowledge sharing and overcome interference challenges presented by varying textual labels in different datasets. Achieving impressive performance across benchmarks without task-specific fine-tuning, Qwen-Audio stands as a beacon of progress, surpassing previous efforts. Qwen-Audio-Chat extends these capabilities, enabling multi-turn dialogues and supporting diverse audio scenarios, showcasing robust alignment with human intent and facilitating multilingual interactions.

The future of Qwen-Audio holds even greater promise as it explores expanding capabilities for different audio types, languages, and specific tasks. Refining the multi-task framework or exploring alternative knowledge-sharing approaches could address interference issues in co-training. Investigating task-specific fine-tuning has the potential to further enhance performance. Continuous updates, driven by new benchmarks, datasets, and user feedback, are aimed at improving universal audio understanding. Qwen-Audio-Chat is also in the process of refinement to align seamlessly with human intent, support multilingual interactions, and enable dynamic multi-turn dialogues. Stay tuned for the exciting developments in the world of audio-language models brought to you by Alibaba’s Qwen-Audio Series.

Conclusion:

Alibaba’s Qwen-Audio Series represents a significant advancement in the market, offering universal audio understanding without the need for task-specific fine-tuning. Its ability to handle diverse audio types and excel in challenging tasks positions it as a game-changer, bridging the gap between Large Language Models and audio comprehension. This innovation is poised to have a transformative impact on industries reliant on audio data, from customer service and voice assistants to content generation and audio analysis.