TL;DR:

- Generative models like GANs can create 3D avatars from 2D images.

- Demand for high-quality, versatile 3D avatars is growing.

- Existing methods struggle with texture, relying on costly 3D scans.

- The new method achieves state-of-the-art quality and models loose clothing.

- Unified design, multiple discriminators, and novel generator enhance results.

Main AI News:

In the ever-evolving landscape of artificial intelligence, generative models have taken center stage. Among these, Generative Adversarial Networks (GANs) stand out for their ability to breathe life into 2D images, rendering lifelike objects and individuals. The implications of this technology are vast, but a growing demand for diverse and high-quality virtual 3D avatars has arisen. These avatars must possess the unique capability to be manipulated in terms of pose and camera viewpoint, all while maintaining 3D consistency.

To meet this burgeoning need for 3D avatars, the research community has embarked on a quest to develop generative models capable of automatically creating 3D representations of humans and their attire based on input parameters like body pose and shape. Yet, despite significant strides, many existing methods have a blind spot – they often overlook the crucial element of texture and instead rely on pristine 3D scans of humans for training. The problem? Acquiring such scans is not only expensive but also severely limits their availability and diversity.

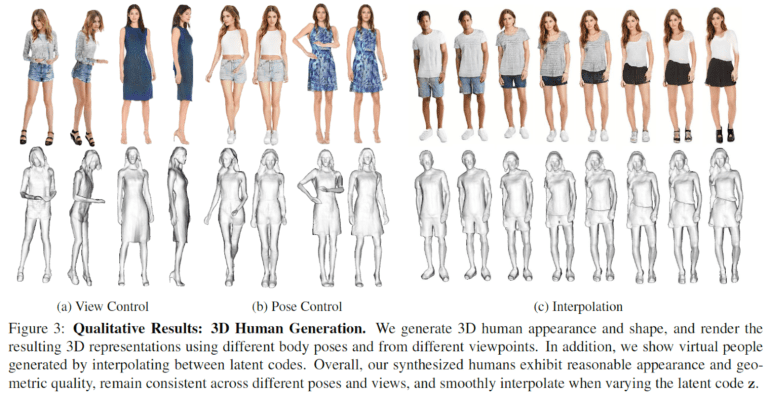

The path toward creating a method for generating 3D human shapes and textures from unstructured image data is a thorny one. Each training instance showcases unique shapes and appearances, each observed from specific viewpoints and poses. While recent advancements in 3D-aware GANs have yielded remarkable results for rigid objects, they struggle when it comes to the intricate articulation of the human form. Some recent work has shown promise in learning articulated humans, but they grapple with issues of quality, resolution, and the complexities of modeling loose clothing.

In this article, the authors introduce a groundbreaking approach to 3D human generation from 2D image collections, setting a new standard in both image and geometry quality, all while effectively tackling the challenge of modeling loose clothing.

Their method adopts a unified design that can model both the human body and its attire, departing from the traditional approach of representing humans with separate body parts. Multiple discriminators are brought into the fold to enrich geometric details and focus on the regions that matter most to human perception.

A novel generator design takes center stage, offering high image quality and the flexibility to handle loose clothing, all while modeling 3D humans comprehensively within a canonical space. The articulation module, known as Fast-SNARF, takes charge of the intricate dance of body parts, expertly adapted to the generative context. Additionally, their model embraces the concept of empty-space skipping, optimizing and accelerating rendering in areas devoid of significant content, thus enhancing overall efficiency.

Their modular 2D discriminators are guided by normal information, allowing them to consider the directionality of surfaces in 3D space. This guidance enables the model to concentrate on the regions that hold the greatest perceptual significance for human observers, ultimately resulting in a more accurate and visually pleasing outcome. Furthermore, these discriminators prioritize geometric minutiae, elevating the overall quality of the generated images. This, the authors believe, is the key to achieving a more realistic and visually captivating representation of 3D human models.

Conclusion:

The development of a groundbreaking AI technique for generating 3D avatars from 2D images opens up exciting opportunities in various industries, including gaming, virtual fashion, and virtual reality. This advancement addresses the market’s demand for high-quality, customizable 3D avatars, reducing the reliance on expensive 3D scans and significantly improving the quality of generated models. It’s a game-changer that can revolutionize the way we interact with virtual environments and characters, making it a significant leap forward for the market’s potential and innovation.