TL;DR:

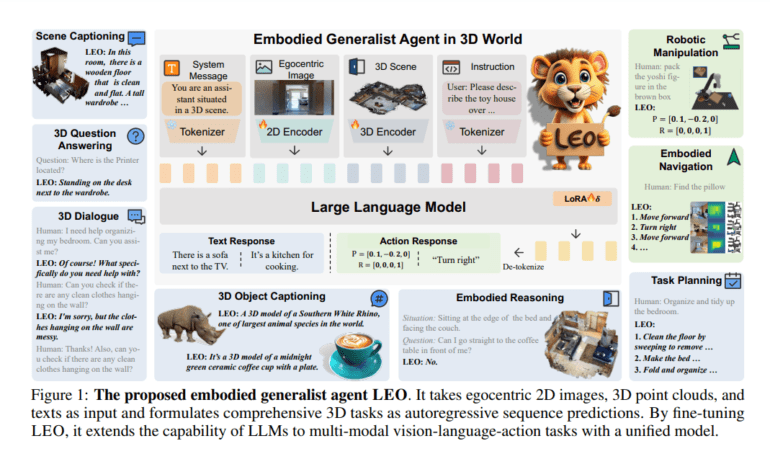

- LEO is a cutting-edge, multi-modal AI agent designed for versatile 3D world interaction.

- Generalist agents like LEO excel in complex 3D environments, offering adaptability and robust problem-solving skills.

- LEO employs techniques from reinforcement learning, computer vision, and spatial reasoning for effective navigation and interaction.

- Developed by a collaborative team from top institutions, LEO leverages LLM-based architecture for generically embodied capabilities.

- LEO’s autoregressive training objectives and 3D encoder facilitate task-agnostic training and flexible adaptation to various tasks.

- Extensive dataset creation and innovative methodologies like scene-graph-based prompting enhance LEO’s performance.

- The evaluation shows LEO’s proficiency in tasks like embodied navigation and robotic manipulation, with performance gains from scaling up training data.

- LEO bridges the gap between 3D vision language and embodied movement, showcasing the potential of joint learning.

Main AI News:

In the realm of artificial intelligence, the emergence of generalist agents capable of seamlessly navigating multiple domains without the need for extensive reprogramming or retraining has been nothing short of a game-changer. These versatile agents are designed to transcend boundaries, wielding a remarkable ability to harness knowledge and skills across diverse domains, demonstrating remarkable flexibility and adaptability in tackling a wide array of challenges. Within the realm of training and research simulations, especially those set in complex 3D environments, these generalist agents have proven to be invaluable.

Consider, for instance, the training simulations tailored for pilots or surgeons. Here, these agents serve as invaluable tools, capable of faithfully replicating a myriad of scenarios and responding with precision and agility. Their role in facilitating immersive training experiences cannot be overstated.

Yet, as impressive as these generalist agents are, they face formidable challenges when it comes to operating within the intricate landscapes of three-dimensional spaces. Their journey entails learning robust representations that can generalize seamlessly across the ever-diverse 3D environments and making calculated decisions that take into account the multifaceted nature of their surroundings. To navigate this terrain, these agents often rely on a potent blend of techniques sourced from reinforcement learning, computer vision, and spatial reasoning, enabling them to navigate and interact with remarkable effectiveness within these complex 3D worlds.

Enter LEO, a groundbreaking creation born from the collaborative efforts of researchers at the Beijing Institute for General Artificial Intelligence, CMU, Peking University, and Tsinghua University. LEO represents a paradigm shift in the world of AI, harnessing the power of LLM-based architecture to create a generically embodied, multi-modal, and multitasking agent.

At its core, LEO possesses the remarkable ability to perceive, ground, reason, plan, and act, all underpinned by shared model architectures and weights. This multifaceted agent perceives the world through an egocentric 2D image encoder, providing it with an embodied view and an object-centric 3D point cloud encoder, granting it a third-person global perspective.

LEO’s versatility extends further through its autoregressive training objectives, enabling it to be trained with task-agnostic inputs and outputs. The 3D encoder is instrumental in generating object-centric tokens for each observed entity, offering unparalleled flexibility across a wide spectrum of tasks.

Fundamentally, LEO is founded upon the bedrock principles of 3D vision-language alignment and 3D vision-language-action integration. To equip LEO with the necessary training data, the research team meticulously curated and generated an extensive dataset, encompassing object-level and scene-level multi-modal tasks of unprecedented scale and complexity. This demanding dataset required a deep understanding of and interaction with the 3D world, pushing the boundaries of AI research.

The team’s innovation doesn’t stop there; they have introduced scene-graph-based prompting and refinement methods, alongside the Object-centric Chain-of-Thought (O-CoT) approach, to elevate the quality of generated data. These methodologies significantly enrich the dataset’s scale and diversity, while also effectively eliminating the potential for hallucination in LLMs.

The thorough evaluation of LEO underscores its prowess, with the agent excelling in diverse tasks such as embodied navigation and robotic manipulation. Notably, the team observed consistent performance enhancements with the simple act of scaling up the training data.

The results speak for themselves: LEO’s responses are imbued with rich and informative spatial relations, firmly grounded in the intricate tapestry of 3D scenes. LEO effortlessly identifies concrete objects within its surroundings, discerning the precise actions associated with these objects. In essence, LEO serves as a bridge between 3D vision language and embodied movement, as the research team’s findings underscore the feasibility of joint learning in this transformative field.

Conclusion:

LEO’s emergence represents a significant leap in AI capabilities, particularly in the realm of embodied multi-modal agents. Its adaptability, robustness, and versatility hold immense promise for industries, training simulations, and beyond, making it a potential game-changer in the market for AI-driven solutions in complex 3D environments.