TL;DR:

- AWS and NVIDIA expand their strategic partnership for generative AI.

- The collaboration brings advanced infrastructure, software, and services.

- Key initiatives include NVIDIA GH200 Superchips in the cloud and DGX Cloud on AWS.

- Project Ceiba aims to build the world’s fastest GPU-powered AI supercomputer.

- AWS introduces new Amazon EC2 instances powered by NVIDIA GPUs.

- The partnership enhances AWS’s position as a GPU computing powerhouse.

- This collaboration accelerates the adoption and development of generative AI across industries.

Main AI News:

In a significant development for the world of AI, Amazon Web Services, Inc. (AWS), a subsidiary of Amazon.com, Inc., and NVIDIA have jointly unveiled an expansive strategic partnership aimed at ushering in cutting-edge infrastructure, software and services to fuel the growth of generative artificial intelligence (AI). This collaboration marks a significant milestone in their enduring relationship, which has played a pivotal role in driving the generative AI era by providing early machine learning (ML) pioneers with the computational horsepower needed to push the boundaries of technology.

Under this ambitious partnership, several key initiatives have been set in motion:

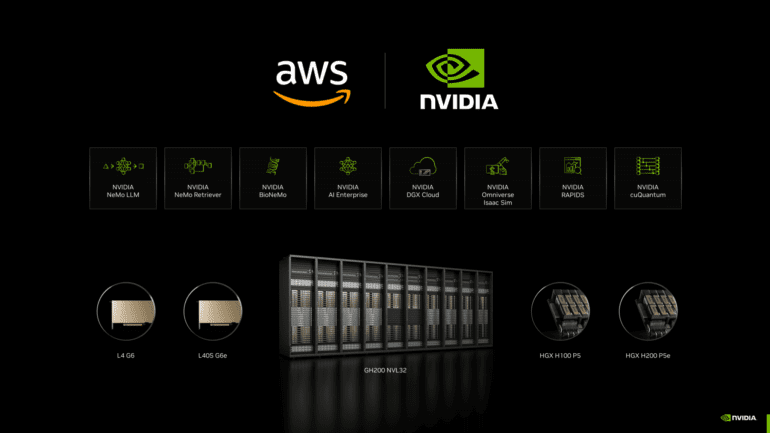

- NVIDIA GH200 Grace Hopper Superchips in the Cloud: AWS is set to become the inaugural cloud provider to introduce NVIDIA GH200 Grace Hopper Superchips, harnessing the power of new multi-node NVLink technology. This revolutionary platform combines 32 Grace Hopper Superchips via NVIDIA NVLink and NVSwitch technologies into a single instance, accessible through Amazon Elastic Compute Cloud (Amazon EC2) instances. With the backing of Amazon’s formidable networking capabilities (EFA), advanced virtualization (AWS Nitro System), and hyper-scale clustering (Amazon EC2 UltraClusters), customers will now have the ability to scale their operations to unprecedented levels with thousands of GH200 Superchips at their disposal.

- NVIDIA DGX™ Cloud on AWS: Another pioneering offering is the NVIDIA DGX™ Cloud, a dedicated AI-training-as-a-service, hosted on AWS. This collaboration ushers in the first-ever DGX Cloud featuring GH200 NVL32, offering developers access to unparalleled shared memory in a single instance. DGX Cloud on AWS is set to accelerate the training of cutting-edge generative AI models, some of which may exceed 1 trillion parameters.

- Project Ceiba: AWS and NVIDIA are joining forces on an ambitious project to design the world’s fastest GPU-powered AI supercomputer. This groundbreaking system, equipped with GH200 NVL32 and Amazon EFA interconnect, will be hosted by AWS for NVIDIA’s own research and development team. With a staggering 16,384 NVIDIA GH200 Superchips and the ability to process a mind-boggling 65 exaflops of AI, this supercomputer is poised to drive the next wave of generative AI innovation.

- New Amazon EC2 Instances: AWS will introduce three additional Amazon EC2 instances, further enhancing their AI and HPC offerings. P5e instances, powered by NVIDIA H200 Tensor Core GPUs, will cater to large-scale generative AI and HPC workloads. Additionally, G6 and G6e instances, fueled by NVIDIA L4 GPUs and NVIDIA L40S GPUs, respectively, will address a wide array of applications, including AI fine-tuning, inference, graphics, and video workloads. G6e instances are especially suitable for developing 3D workflows and digital twins, facilitated by NVIDIA Omniverse™, a platform for building generative AI-enabled 3D applications.

This strategic alliance underscores the commitment of both AWS and NVIDIA to deliver state-of-the-art generative AI solutions to a diverse range of industries. As Adam Selipsky, CEO at AWS, aptly puts it, “We continue to innovate with NVIDIA to make AWS the best place to run GPUs, combining next-gen NVIDIA Grace Hopper Superchips with AWS’s EFA powerful networking, EC2 UltraClusters’ hyper-scale clustering, and Nitro’s advanced virtualization capabilities.”

Jensen Huang, founder and CEO of NVIDIA, echoes this sentiment, stating, “Generative AI is transforming cloud workloads and putting accelerated computing at the foundation of diverse content generation. Driven by a common mission to deliver cost-effective state-of-the-art generative AI to every customer, NVIDIA and AWS are collaborating across the entire computing stack, spanning AI infrastructure, acceleration libraries, foundation models, to generative AI services.”

This strategic collaboration between AWS and NVIDIA promises to revolutionize the landscape of generative AI, HPC, design, and simulation, empowering businesses and researchers to push the boundaries of what’s possible in the world of artificial intelligence.

Conclusion:

The strategic collaboration between AWS and NVIDIA marks a pivotal moment in the generative AI landscape. It solidifies AWS’s position as a leading provider of GPU computing solutions and opens up exciting opportunities for businesses across various industries to harness the power of AI. This partnership is set to drive innovation, accelerate AI research, and pave the way for groundbreaking applications, ultimately reshaping the competitive dynamics of the AI market.