TL;DR:

- Lund University and Halmstad University study the integration of AI and satellite imagery for poverty assessment.

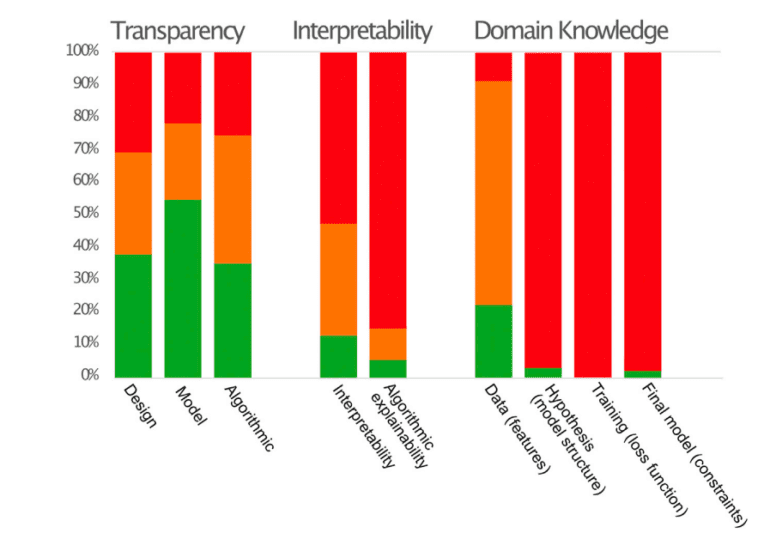

- Transparency, interpretability, and domain knowledge are key factors in explainable machine learning.

- Analysis of 32 papers reveals varying levels of these elements in poverty prediction.

- The current state falls short of scientific requirements for understanding poverty and welfare.

- Emphasizing explainability for broader acceptance in the development community.

Main AI News:

Recent research led by experts from Lund University and Halmstad University has cast a discerning eye on the realm of explainable AI within the context of poverty estimation, leveraging the power of satellite imagery and deep machine learning. With an unwavering focus on transparency, interpretability, and domain knowledge, an exhaustive examination of 32 scholarly papers has unveiled the nuanced landscape where these critical facets of explainable machine learning exhibit varying degrees of proficiency, ultimately falling short of fully satisfying the stringent demands for scientific enlightenment in the spheres of poverty and welfare.

The comprehensive study uncovers a spectrum of disparities in the status of these fundamental components through an in-depth analysis of 32 papers that venture into the realm of poverty and wealth prediction. These papers draw from survey data as their ground truth, navigating urban and rural terrains while harnessing the potential of deep neural networks. It argues convincingly that the prevailing state of affairs in this field does not align with the scientific prerequisites for unraveling the complexities of poverty and welfare. With unwavering conviction, this review underscores the paramount importance of explainability as the linchpin for broader acceptance and dissemination within the global development community.

Our introduction sets the stage by addressing the formidable challenges associated with identifying vulnerable communities and decoding the determinants of poverty. It lays bare the stark realities of information gaps and the inherent limitations of household surveys. However, it is here that the spotlight shines brightly on the transformative potential of deep machine learning and the untapped wealth of insights concealed within satellite imagery. This enlightening journey emphasizes the imperatives of explainability, transparency, interpretability, and domain knowledge as the cornerstones of the scientific process. We meticulously evaluate the current status of explainable machine learning in predicting poverty and wealth, ingeniously employing survey data, satellite imagery, and deep neural networks as our guiding compass. The ultimate goal is to foster a culture of broader acceptance and dissemination within the global development fraternity.

Conducting an integrative literature review, we scrutinized 32 studies that meticulously met specific criteria encompassing poverty prediction, survey data utilization, the strategic incorporation of satellite imagery, and the formidable capabilities of deep neural networks. Within these pages, the utilization of attribution maps to decipher the intricacies of deep-learning imaging models comes to the forefront. We thoughtfully assess the properties of these models in pursuit of interpretability. The overarching objective of this review is to provide a panoramic overview of the nuances of explainability as they manifest within the body of literature under examination, thereby gauging their potential contribution to the ever-expanding reservoir of knowledge in the domain of poverty prediction.

Our incisive review of the literature illuminates a nuanced tapestry, one where the core elements of explainable machine learning—transparency, interpretability, and domain knowledge—exhibit a patchwork of strengths and weaknesses. Interpretability and explainability emerge as areas of vulnerability, with scant efforts being invested in the interpretation of models or the elucidation of predictive data. While domain knowledge is a familiar presence in feature-based models used for selection purposes, its presence in other facets of modeling remains conspicuously sparse. Experimental results cast revealing insights, shedding light on critical aspects such as the limitations of wealth indices and the profound impact of low-resolution satellite images. Amidst this mosaic of findings, one paper emerges as a standout, characterized by a robust hypothesis and a resounding endorsement of the role of domain knowledge.

In the labyrinthine intersection of poverty, machine learning, and satellite imagery, the landscape is punctuated by variations in the status of transparency, interpretability, and domain knowledge within the domain of explainable machine learning approaches. Explainability, a pivotal pillar for achieving widespread acceptance within the development community, transcends mere interpretability. Transparency within the reviewed papers manifests as a mosaic, with some exemplifying meticulous documentation while others languish in the shadows of reproducibility concerns. The frailties within interpretability and explainability persist as only a handful of researchers undertake the arduous task of model interpretation or the elucidation of predictive data. While domain knowledge enjoys a measure of prevalence in feature-based models used for selection, its application in other facets of modeling remains largely uncharted territory. As we chart a course forward, sorting and ranking among impact features emerge as a crucial and tantalizing avenue for future research endeavors.

Conclusion:

The integration of satellite imagery and deep learning in poverty assessment, as explored in this review, highlights the critical importance of transparency, interpretability, and domain knowledge in the field of explainable AI. The analysis of 32 scholarly papers underscores the need for further advancements to meet scientific requirements in poverty prediction. This research signals opportunities for businesses and organizations to invest in solutions that enhance the transparency and interpretability of AI models, ultimately contributing to more effective poverty and welfare assessments in the market.