TL;DR:

- MIT and Meta AI collaborate to introduce a groundbreaking object reorientation controller.

- The controller utilizes a single depth camera for real-time reorientation of diverse objects.

- Challenges in existing methods include specificity, limited range, and reliance on costly sensors.

- The student vision policy network minimizes generalization gaps across datasets.

- Reinforcement learning is employed to train the controller in simulation, achieving zero-shot transfer to the real world.

- Rigorous testing demonstrates the system’s effectiveness in reorienting 150 objects in real-time.

- Future research may explore shape feature integration and visual inputs for improved performance.

Main AI News:

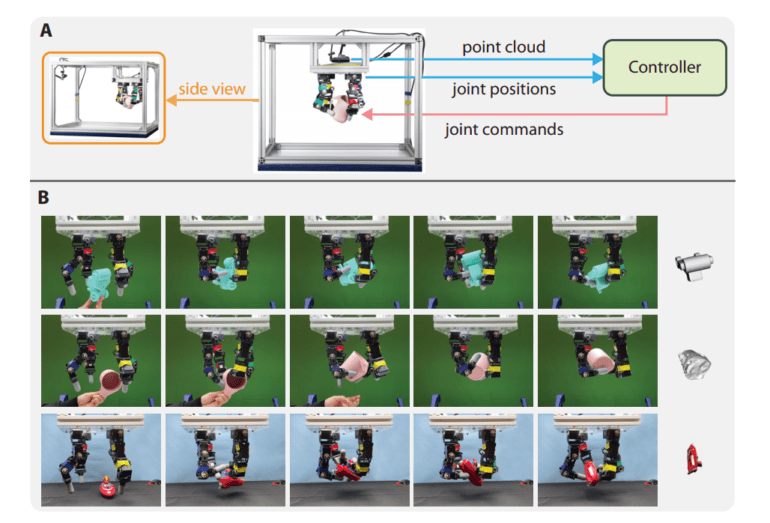

MIT and Meta AI have teamed up to introduce a groundbreaking solution in the realm of robotics. Their latest development, an object reorientation controller, is set to redefine the landscape of real-time in-hand object manipulation. What sets this innovation apart is its ability to leverage a single depth camera to effortlessly reorient a wide array of objects, all in real-time.

The driving force behind this advancement is the pressing need for a versatile and efficient object manipulation system—one that can adapt to new conditions without the need for a consistent pose of key points across objects. This pioneering platform not only excels in object reorientation but also extends its capabilities to various dexterous manipulation tasks, promising exciting prospects for future research and improvement.

Challenges in the existing methods for object reorientation have prompted the need for such a revolutionary solution. These challenges include a narrow focus on specific objects, limited range, slow manipulation, reliance on costly sensors, and the production of simulation outcomes that often fall short when applied in real-world scenarios. Bridging the gap between simulation and reality is a formidable task, with success rates often hinging on error thresholds that vary depending on the task at hand.

To address these limitations, the student vision policy network has undergone rigorous training, demonstrating minimal generalization gaps across datasets. This represents a significant leap forward in the field of robotics, promising enhanced capabilities for robotic hand dexterity.

But how does this breakthrough work? The method involves harnessing the power of reinforcement learning to train a vision-based object reorientation controller in a simulated environment, followed by seamless deployment in the real world—achieving a remarkable zero-shot transfer. The training process incorporates a convolutional network with enhanced capacity and a gated recurrent unit, all within a table-top setup using the Isaac Gym physics simulator. The reward function, which incorporates success criteria and additional shaping terms, plays a pivotal role in the method’s efficacy.

The effectiveness of this approach is validated through rigorous testing on both 3D-printed and real-world objects, with a comprehensive comparison between simulation and real-world results, based on error distribution and success rates within defined thresholds. The results speak volumes about the system’s effectiveness in addressing the challenges of sim-to-real transfer and its potential for precision enhancements without additional assumptions.

In a remarkable feat, a single controller trained in simulation demonstrated the ability to reorient a staggering 150 objects when deployed in the real world—showcasing its real-time performance at 12 Hz using a standard workstation. The accuracy of object reorientation and its adaptability to new object shapes were validated using an OptiTrack motion capture system.

In conclusion, this study introduces a real-time controller, born out of reinforcement learning, which excels in the art of object reorientation in the real world. While the system’s median reorientation time averages around seven seconds, it prompts contemplation regarding the role of shape information in reorientation tasks and the complexities of transferring simulation results to the real world. Nevertheless, this controller holds immense promise for in-hand dexterous manipulation, particularly in less structured environments, underlining the necessity for precision improvements.

As we look ahead, future research avenues beckon. Exploring the integration of shape features to enhance controller performance, especially in terms of precise manipulation and adaptation to new shapes, holds great potential. Additionally, the incorporation of visual inputs for training could offer solutions to the limitations faced by current reinforcement learning controllers, which heavily rely on full-state information simulation. Comparative studies with prior works and the exploration of dexterous manipulation using open-source hardware remain compelling areas for further investigation.

Conclusion:

This innovative object reorientation controller represents a significant advancement in the field of robotics, offering versatile and efficient solutions to real-world challenges. Its potential applications in dexterous manipulation and adaptability to various object shapes make it a game-changer in less structured environments. This development underscores the growing market demand for precision-enhancing robotics solutions, particularly in industries where object manipulation is crucial.