TL;DR:

- CoDi-2 is a revolutionary Multimodal Large Language Model (MLLM).

- Developed by UC Berkeley, Microsoft Azure AI, Zoom, and UNC-Chapel Hill.

- Excels in complex multimodal instruction processing, image generation, vision transformation, and audio editing.

- Outperforms its predecessor, CoDi, with encoders and decoders for audio and vision inputs.

- Utilizes pixel loss and token loss in training, showcasing remarkable zero-shot capabilities.

- Focuses on modality-interleaved instruction following and multi-round multimodal chat.

- Adapts to different styles and generates content based on various subject matters.

- Significant advancements in in-context learning, audio manipulation, and fine-grained control.

Main AI News:

In a collaborative effort between UC Berkeley, Microsoft Azure AI, Zoom, and UNC-Chapel Hill, researchers have unveiled the CoDi-2 Multimodal Large Language Model (MLLM). This groundbreaking innovation addresses the complex challenge of generating and comprehending intricate multimodal instructions while excelling in subject-driven image generation, vision transformation, and audio editing tasks. CoDi-2 represents a pivotal advancement in establishing a comprehensive multimodal foundation within the AI research landscape.

Building upon the achievements of its predecessor, CoDi, CoDi-2 surpasses expectations in tasks such as subject-driven image generation and audio editing. Its architectural prowess encompasses specialized encoders and decoders for audio and vision inputs. The training process incorporates pixel loss from diffusion models, seamlessly melding it with token loss. CoDi-2 shines with remarkable zero-shot and few-shot capabilities, particularly in areas like style adaptation and subject-driven generation.

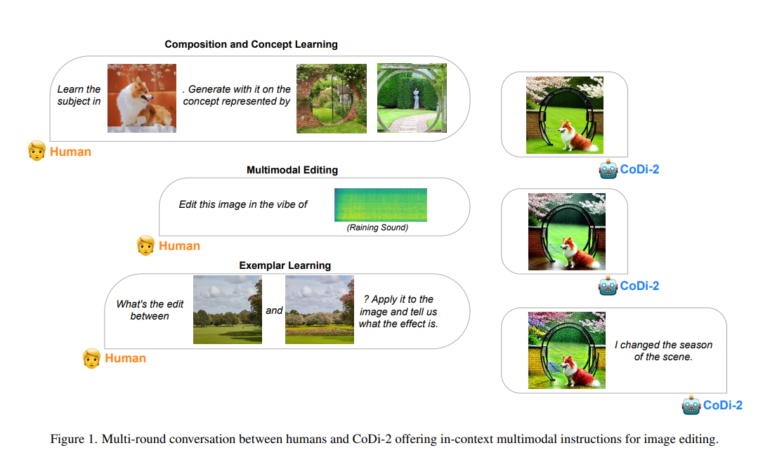

CoDi-2 tackles the intricate realm of multimodal generation, with a focus on zero-shot fine-grained control, modality-interleaved instruction following, and multi-round multimodal chat. Powered by a Large Language Model (LLM) as its cognitive engine, CoDi-2 aligns different modalities with the language during encoding and generation. This innovative approach equips the model to decipher complex instructions and generate coherent multimodal outputs with finesse.

The CoDi-2 architecture seamlessly integrates encoders and decoders for audio and vision inputs within a multimodal large language model framework. Trained on a diverse generation dataset, CoDi-2 harnesses the power of pixel loss from diffusion models in tandem with token loss during the training phase. Exhibiting superior zero-shot capabilities, it outperforms its predecessors in subject-driven image generation, vision transformation, and audio editing, demonstrating competitive performance and remarkable generalization across novel, uncharted tasks.

CoDi-2 flaunts an extensive repertoire of zero-shot capabilities in multimodal generation, excelling in in-context learning, reasoning, and any-to-any modality generation through multi-round interactive conversations. Evaluation results underscore its highly competitive zero-shot performance and robust adaptability to new, unforeseen tasks. CoDi-2 stands out in audio manipulation tasks, achieving unparalleled excellence in adding, dropping, and replacing elements within audio tracks, as evidenced by its consistently lowest scores across all metrics. It underscores the significance of in-context learning, concept adaptation, editing proficiency, and fine-grained control in advancing high-fidelity multimodal generation.

In summation, CoDi-2 emerges as a state-of-the-art AI system, displaying exceptional proficiency across diverse tasks, ranging from intricate instruction following to contextual learning, reasoning, interactive chat, and content editing across various input-output modes. Its remarkable ability to adapt to diverse styles and generate content across a spectrum of subject matters, coupled with its audio manipulation prowess, marks it as a major milestone in multimodal foundation modeling. CoDi-2 signifies an impressive endeavor to create a comprehensive system capable of handling an array of tasks, even those for which it has not been explicitly trained.

Looking ahead, the future of CoDi-2 is poised to enhance its multimodal generation capabilities further. Plans include refining in-context learning, expanding conversational aptitudes, and accommodating additional modalities. The pursuit of improved image and audio fidelity through techniques like diffusion models remains a central focus. Future research endeavors may also involve thorough evaluations and comparisons with other models to discern the strengths and limitations of CoDi-2. Stay tuned for the exciting developments in the realm of AI and multimodal capabilities brought forth by CoDi-2.

Conclusion:

CoDi-2 represents a game-changing development in the field of multimodal AI. Its ability to excel in various complex tasks and adapt to diverse styles and subjects holds great promise for the market. This innovation can revolutionize industries such as content generation, image processing, and audio editing, making it a valuable asset for businesses seeking high-fidelity multimodal solutions.