TL;DR:

- AMD has introduced the Instinct MI300X accelerator and Instinct M1300A APU for large language models (LLMs).

- MI300X outperforms Nvidia’s H100 in LLM training and inference tasks.

- Strategic partnership with Microsoft for Azure integration and collaboration with Meta for data centers.

- MI300A APU for data centers offers higher performance and 30x energy efficiency improvement.

- AMD’s Ryzen 8040 series brings AI capabilities to mobile devices.

- Ryzen 8040 promises faster video editing and gaming, challenging Intel’s offerings.

- AMD expects major manufacturers to incorporate Ryzen 8040 chips in early 2024.

- Next-generation Strix Point NPUs are to be released in 2024.

- The Ryzen AI Software Platform enables developers to offload models into NPUs, reducing CPU power consumption.

Main AI News:

In the competitive arena of AI chip development, AMD has taken a significant stride to challenge Nvidia’s dominance. AMD’s latest offerings, the Instinct MI300X accelerator, and the Instinct M1300A, accelerated processing unit (APU), are strategically designed to cater to the demands of training and running large language models (LLMs).

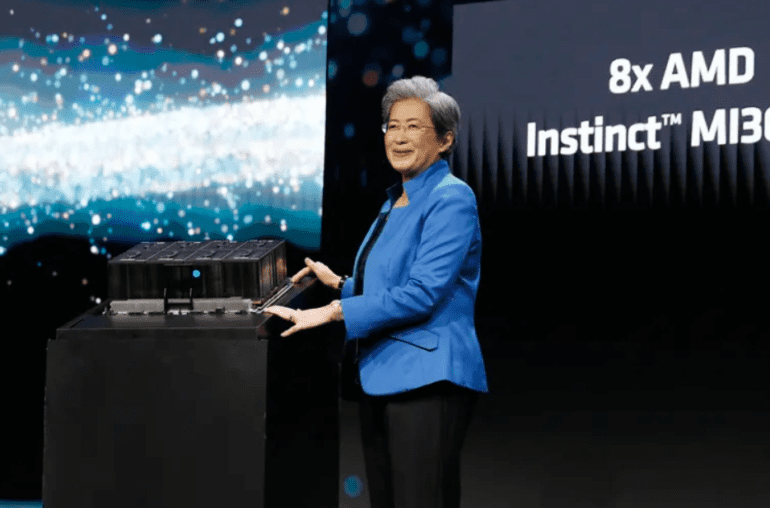

These cutting-edge products boast enhanced memory capacity and improved energy efficiency compared to their predecessors, according to AMD. Lisa Su, the CEO of AMD, emphasized the vital role of GPUs in driving AI adoption, recognizing the growing complexity and size of LLMs.

During a presentation, Su proudly declared the MI300X as the world’s highest-performing accelerator. She asserted that MI300X not only rivals Nvidia’s H100 chips in training LLMs but also outperforms them on the inference side, exhibiting a 1.4 times better performance when working with Meta’s colossal 70 billion parameter LLM, Llama 2.

In a strategic partnership with Microsoft, AMD has integrated MI300X into Azure virtual machines, expanding its reach into the cloud computing realm. Furthermore, Meta has announced its intention to deploy MI300 processors in its data centers, underlining the growing demand for AMD’s AI prowess.

AMD has also introduced the MI300A APU tailored for data centers, a move expected to expand its total addressable market to a staggering $45 billion. This APU combines CPUs and GPUs, offering not only higher-performance computing and faster model training but also a remarkable 30 times improvement in energy efficiency compared to its competitors, including the H100. It introduces unified memory, eliminating the need to transfer data between different devices.

The MI300A APU is poised to power the formidable El Capitan supercomputer, developed by Hewlett Packard Enterprise at the Lawrence Livermore National Laboratory. This supercomputer is projected to deliver over two exaflops of performance, cementing AMD’s role in shaping the future of high-performance computing.

Although pricing details remain undisclosed, Su’s excitement during the Code Conference hinted at AMD’s aspiration to tap into a broader user base, extending beyond cloud providers to encompass enterprises and startups.

In addition to its strides in the data center realm, AMD announced the Ryzen 8040 processor series, designed to integrate native AI functions into mobile devices. Boasting 1.6 times more AI processing performance than its predecessors, this series also incorporates neural processing units (NPUs). AMD claims that the Ryzen 8040 will not only excel in AI processing but also substantially accelerate video editing by 65% and gaming by 77%, surpassing competing products such as Intel’s chips.

Consumers can expect products from manufacturers like Acer, Asus, Dell, HP, Lenovo, and Razer to incorporate the Ryzen 8040 chips, with availability slated for the first quarter of 2024. Su also hinted at the release of the next generation of Strix Point NPUs in 2024.

AMD’s commitment to the AI ecosystem extends to software as well, with the Ryzen AI Software Platform now widely accessible. This platform empowers developers to offload AI models into the NPU, reducing CPU power consumption. Users will gain access to foundation models like the speech recognition model Whisper and large language models like Llama 2.

In a relentless AI chip arms race among industry giants like AMD, Nvidia, and Intel, AMD’s latest innovations mark a significant milestone in the quest to harness the potential of artificial intelligence. While Nvidia has held a significant market share with its H100 GPUs for training models like OpenAI’s GPT, AMD’s advancements signal a compelling challenge to the status quo.

Conclusion:

AMD’s latest AI chip innovations, along with strategic partnerships and expanded product offerings, position the company as a formidable challenger to Nvidia’s dominance in the AI chip market. With advancements in data center and mobile computing, AMD is poised to capture a significant share of the growing AI market, appealing to a broad range of customers, from enterprises to startups.