TL;DR:

- Stanford University unveils an AI framework for enhancing the interpretability and generative capabilities of visual models.

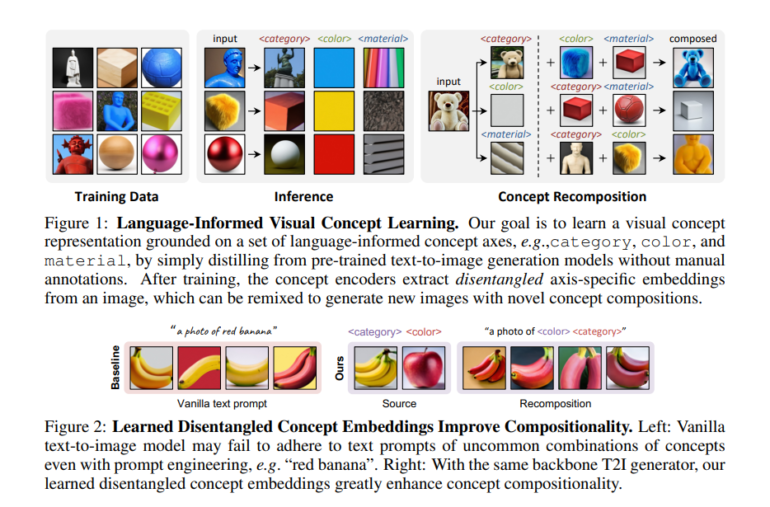

- The framework integrates language-informed concept representation and concept encoders aligned with language-specified concept axes.

- It extracts concept embeddings from test images, generating novel visual compositions, and excels in disentanglement and realism.

- The study recommends using larger datasets, exploring pre-trained models, and expanding concept axes for future development.

Main AI News:

Researchers at Stanford University have unveiled a groundbreaking Artificial Intelligence (AI) framework designed to elevate the interpretability and generative capabilities of existing models, particularly for a wide range of visual concepts. In today’s rapidly evolving landscape of AI and computer vision, the demand for enhanced interpretability and creative potential within models is more pressing than ever.

This pioneering framework, developed by Stanford University researchers, is centered around the notion of learning a language-informed visual concept representation. It involves the training of concept encoders that specialize in encoding information in alignment with language-informed concept axes. These axes are firmly anchored to text embeddings derived from a pre-trained Visual Question Answering (VQA) model, thus establishing a robust language-to-vision connection.

The core functionality of this framework hinges on the concept encoders, which are meticulously trained to encode information in harmony with language-informed concept axes. Through this process, the model is empowered to extract concept embeddings from entirely new test images. Subsequently, it has the remarkable capability to generate images featuring novel compositions of visual concepts, all while demonstrating adaptability to previously unseen concepts.

A distinguishing characteristic of this approach lies in its fusion of visual prompts and text queries, which together enable the extraction of graphical images. This method underscores the paramount importance of grounding vision in language, particularly in the realm of text-to-image generation models.

Ultimately, the overarching goal of this research endeavor is to develop systems with the ability to recognize visual concepts in a manner akin to human perception. The framework introduces a paradigm where concept encoders align precisely with language-specified concept axes. These highly specialized encoders excel at extracting concept embeddings from images, thereby facilitating the generation of images featuring innovative concept compositions.

Within the framework’s structure, concept encoders undergo rigorous training to encode visual information along language-informed concept axes. During the inference stage, the model seamlessly extracts concept embeddings from freshly encountered images. This seamless process empowers the model to generate ideas characterized by novel concept compositions. Comparative evaluations substantiate its prowess, showcasing superior results in concept recomposition compared to alternative methods.

Notably, this innovative language-based visual concept learning framework surpasses conventional text-based approaches. Its efficacy is underscored by its ability to effectively extract concept embeddings from test images, generate fresh compositions of visual concepts, and exhibit superior disentanglement and compositionality. Comparative analyses further highlight its proficiency in capturing color variations, while human evaluations consistently yield high scores for realism and fidelity to editing instructions.

In summation, this study presents a formidable framework for acquiring language-informed visual concepts through the distillation of knowledge from pre-trained models. Its impact is notably observed in the realm of visual concept editing, where it excels in achieving greater disentanglement of concept encoders and the generation of images featuring novel compositions of visual ideas. The study underscores the efficiency of employing visual prompts and text queries to exert precise control over image generation, resulting in heightened realism and adherence to editing directives.

To further enhance this groundbreaking framework, the study recommends the utilization of larger and more diverse training datasets. Moreover, it encourages exploration into the integration of various pre-trained vision-language models and the inclusion of additional concept axes to bolster flexibility. Rigorous evaluation across diverse visual concept editing tasks and datasets is also advocated. The study identifies the potential for bias mitigation in natural images and envisions promising applications in image synthesis, style transfer, and visual storytelling. As the AI landscape continues to evolve, this framework stands as a testament to the boundless possibilities that lie at the intersection of language and visual concepts.

Conclusion:

Stanford’s AI framework signifies a significant leap in the market’s potential for advanced visual concept understanding and creative applications. It opens doors for industries ranging from content generation to design, offering the promise of more human-like visual AI solutions. Businesses that embrace this technology can gain a competitive edge by harnessing its superior generative capabilities and enhanced interpretability.