TL;DR:

- Researchers from the University of Wisconsin–Madison and Amazon Web Services collaborate to improve Large Language Models’ (LLMs) bug detection during code generation.

- Automatic program repair leverages Code-LLMs to alleviate the burden of identifying and fixing programming bugs.

- Benchmark datasets like buggy-HumanEval and buggy-FixEval are introduced to evaluate Code-LLMs in the presence of synthetic and realistic bugs.

- Proposed mitigation methods include Removal-then-completion, Completion-then-rewriting, and Rewriting-then-completion, with a focus on enhancing code completion with potential bugs.

- Code-LLMs like RealiT and INCODER-6B play a significant role in code fixing and improving mitigation strategies.

- The presence of potential bugs leads to a more than 50% reduction in passing rates for Code-LLMs.

- The Heuristic Oracle highlights the importance of bug localization in performance evaluation.

- Likelihood-based methods show diverse performance on different bug datasets, indicating bug nature influences aggregation method choice.

- Post-mitigation methods offer performance improvements, but a gap remains, calling for further research in enhancing code completion with potential bugs.

Main AI News:

Programming complexity often leads to errors in code, but advancements in large language models (LLMs) have aimed to mitigate these issues. However, even the most sophisticated LLMs sometimes miss bugs in the code context. To address this challenge, a collaborative study by researchers from the University of Wisconsin–Madison and Amazon Web Services delves into improving LLMs’ ability to detect potential bugs during code generation.

Automatic program repair using Code-LLMs seeks to ease the burden of identifying and rectifying programming bugs. Just as adversarial examples can confound models in various domains, subtle code transformations can hinder code-learning models. Established benchmarks like CodeXGLUE, CodeNet, and HumanEval have played a pivotal role in advancing code completion and program repair. To bolster data availability, methods for generating artificial bugs through code mutants or learning to introduce bugs have been developed.

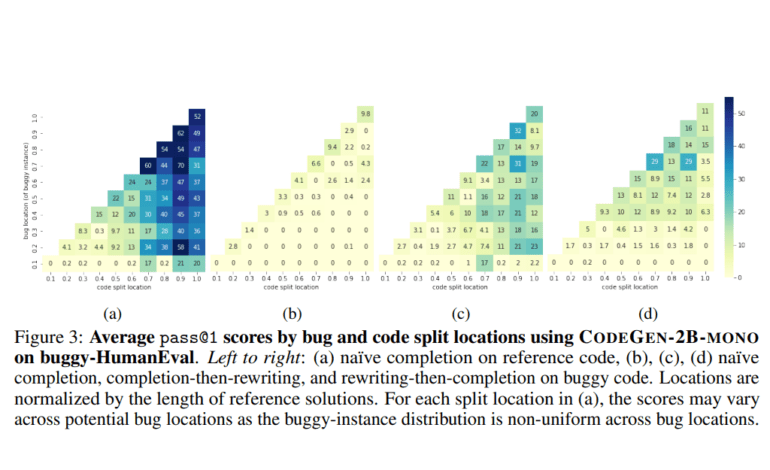

While Transformer-based language models for code have made significant strides in code completion, they often fall short in identifying bugs—a common occurrence in software development. This research introduces the concept of “buggy-code completion” (bCC), wherein potential bugs exist in the code context, probing Code-LLMs’ behavior in such scenarios. To evaluate Code-LLMs in the presence of synthetic and realistic bugs, benchmark datasets such as buggy-HumanEval and buggy-FixEval have been introduced, revealing substantial performance degradation. The study also explores post-mitigation methods to address this issue.

Proposed mitigation strategies encompass three approaches: Removal-then-completion, which eliminates buggy code fragments; Completion-then-rewriting, involving bug fixes post-completion using models like RealiT; and Rewriting-then-completion, which resolves bugs by rewriting code lines before completion. Performance, measured by pass rates, favors Completion-then-rewriting and Rewriting-then-completion. Code-LLMs like RealiT and INCODER-6B serve as code fixers, enhancing the effectiveness of these methods.

The presence of potential bugs significantly impacts the generation performance of Code-LLMs, leading to a more than 50% reduction in passing rates for a single bug. With bug localization knowledge, the Heuristic Oracle highlights a noticeable performance gap between buggy-HumanEval and buggy-FixEval, underscoring the importance of bug localization. Likelihood-based methods exhibit varying performance on the two datasets, suggesting that the nature of bugs influences the choice of aggregation methods. Post-mitigation techniques, including removal-then-completion and rewriting-then-completion, offer performance improvements. Nonetheless, a gap remains, emphasizing the necessity for further research in enhancing code completion in the presence of potential bugs.

Conclusion:

This research underscores the critical need for robust bug detection and mitigation strategies in the field of large language models. As software development continues to advance, businesses must invest in solutions that enhance code completion while addressing potential bugs. Improved code-LLMs and bug localization methods will play a pivotal role in maintaining code quality and reducing errors, making them invaluable assets in the evolving market of software development and automation.