TL;DR:

- The advent of Large Language Models (LLMs) like GPT has transformed natural language processing.

- LLMs face challenges due to static knowledge bases, leading to outdated information and inaccuracies.

- Traditional methods involve fine-tuning LLMs with domain-specific data but have limitations.

- Researchers have introduced Retrieval-Augmented Generation (RAG) as an innovative solution.

- RAG merges parameterized knowledge with dynamically accessible external data sources.

- RAG significantly reduces model hallucinations and enhances response reliability.

- It incorporates current and domain-specific information into LLM-generated responses.

- RAG’s retrieval system scans external databases for relevant, accurate data.

- This dynamic methodology bridges the gap between static knowledge and real-time information.

Main AI News:

In the realm of natural language processing, the advent of Large Language Models (LLMs) such as GPT has ushered in a revolution. These remarkable models exhibit unparalleled language comprehension and generation capabilities, but they grapple with certain challenges. Their static knowledge base often poses hurdles, resulting in outdated information and response inaccuracies, particularly in situations requiring domain-specific insights. This gap necessitates innovative strategies to surmount the limitations of LLMs, ensuring their practical utility and dependability across diverse, knowledge-intensive tasks.

Traditionally, addressing these challenges involved fine-tuning LLMs with domain-specific data. While this approach can yield significant improvements, it comes with its own set of drawbacks. It demands substantial resource investments and specialized expertise, constraining its adaptability in the face of the ever-evolving information landscape. Moreover, this approach lacks the capability to dynamically update the model’s knowledge base, a crucial requirement for handling rapidly changing or highly specialized content. These limitations underscore the imperative need for a more flexible and dynamic methodology to enhance LLMs.

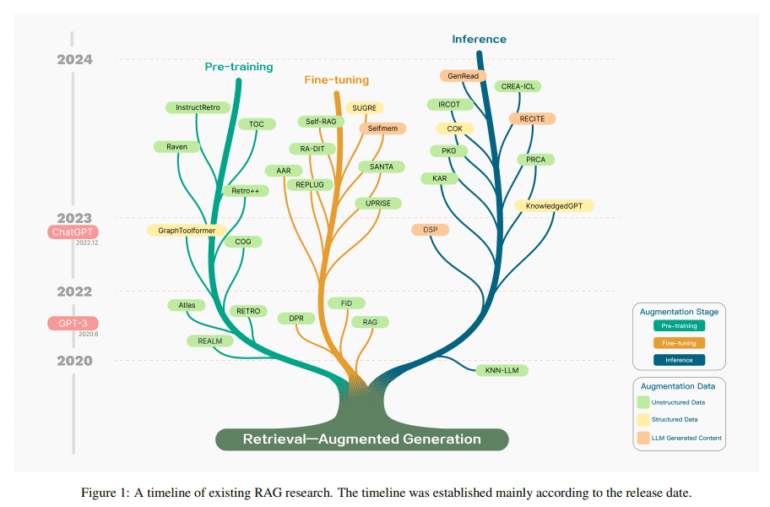

In response to this need, researchers from prestigious institutions such as Tongji University, Fudan University, and Tongji University have introduced a survey on Retrieval-Augmented Generation (RAG). This innovative approach ingeniously merges the model’s parameterized knowledge with dynamically accessible, non-parameterized external data sources. RAG’s workflow begins with the identification and extraction of relevant information from external databases in response to a query. This extracted data serves as the cornerstone for the LLM to generate its responses. This process significantly enriches the model’s responses with current and domain-specific information, effectively reducing the occurrence of hallucinations, a common issue in LLM-generated responses.

Delving deeper into the mechanics of RAG, the process commences with a sophisticated retrieval system that meticulously scours extensive external databases to pinpoint information germane to the query. This system is finely tuned to ensure the relevance and accuracy of the sourced information. Once the relevant data is identified, it seamlessly integrates into the LLM’s response generation process. Consequently, the LLM, armed with this freshly sourced information, becomes better equipped to generate responses that are not only accurate but also up-to-date, addressing the inherent limitations of purely parameterized models.

The impact of RAG-augmented LLMs has been nothing short of remarkable. Notably, a significant reduction in model hallucinations has been observed, thereby enhancing the reliability of responses. Users can now receive answers that not only draw upon the model’s extensive training data but also incorporate the latest information from external sources. This unique feature of RAG, which allows for citing the sources of retrieved information, adds an extra layer of transparency and trustworthiness to the model’s outputs. RAG’s proficiency in dynamically incorporating domain-specific knowledge renders these models versatile and adaptable to a wide range of applications.

Conclusion:

Retrieval-Augmented Generation (RAG) represents a groundbreaking advancement in natural language processing, mitigating the limitations of static knowledge in Large Language Models (LLMs). This innovation enhances the reliability and accuracy of LLM-generated responses, making them more adaptable and versatile. For the market, RAG holds the potential to revolutionize language understanding and generation applications, opening up new possibilities for industries reliant on advanced natural language processing.