TL;DR:

- Synclr introduces a groundbreaking approach to learning visual representations exclusively from synthetic images and captions.

- Current AI models depend on large real-world datasets, which pose challenges in terms of scalability and curation.

- Synclr explores the potential of synthetic data generated by generative models, leveraging their latent variables and hyperparameters.

- This approach offers compactness and the ability to generate endless data samples.

- Synclr redefines granularity in visual classes by utilizing text-to-image diffusion models to align images with specific captions.

- Comparative analysis reveals superior performance in various tasks, including linear probing accuracy and semantic segmentation.

Main AI News:

In the realm of artificial intelligence, the latest breakthroughs are often driven by the data upon which the models are trained. In a recent collaboration between Google and MIT CSAIL, a pioneering approach called Synclr has been introduced. This novel AI methodology focuses exclusively on learning visual representations from synthetic images and captions, completely sidestepping the need for real-world data.

The efficacy of any model’s representation hinges on the quantity, quality, and diversity of the data it is exposed to. This is where Synclr makes its mark, tapping into the vast potential of synthetic data. The premise is simple: the output is directly proportional to the input. The catch, however, is that modern visual representation learning algorithms predominantly rely on colossal real-world datasets. While collecting massive amounts of unfiltered data is indeed feasible, its uncurated nature poses challenges in terms of scaling and utility.

To alleviate the financial burden associated with acquiring real-world data, collaborative research explores the possibility of leveraging synthetic data generated by commercially available generative models. This approach, distinct from traditional data-driven learning, harnesses the latent variables, conditioning variables, and hyperparameters of these models to curate large-scale training sets.

One compelling advantage of using models as data sources is their compactness, making them easier to store and share compared to unwieldy datasets. Additionally, models possess the unique ability to generate an endless stream of data samples, albeit with limited variability.

The research shifts the paradigm of visual representation learning by employing generative models to redefine the granularity of visual classes. Traditional self-supervised methods tend to treat each image independently, disregarding their semantic commonalities. In contrast, supervised learning algorithms can categorize them more efficiently.

While collecting numerous images that align with a specific caption is a challenge in real data, text-to-image diffusion models excel in this regard. They can generate multiple images that precisely match a given caption, providing a level of granularity that is inherently challenging to mine from real data.

The study’s findings reveal that Synclr outperforms SimCLR and supervised training in terms of caption-level granularity. Notably, it offers the advantage of extensibility through online class or data augmentation, enabling scalability to unlimited classes, unlike conventional datasets like ImageNet-1k/21k with fixed class counts.

The proposed Synclr system comprises three stages:

- Synthesizing a substantial collection of picture captions by leveraging large language models.

- Generating synthetic images and captions using a text-to-image diffusion model, resulting in a dataset of 600 million photos.

- Training models for visual representations through masked image modeling and multi-positive contrastive learning.

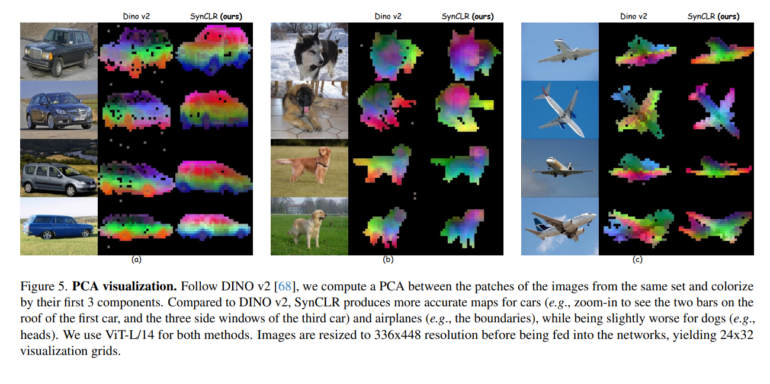

Comparative analysis showcases Synclr’s prowess, with remarkable results in various domains, from linear probing accuracy on ImageNet-1K to fine-grained classification tasks and semantic segmentation on ADE20k.

Conclusion:

Synclr’s innovative approach to visual representation learning offers a promising alternative to the traditional reliance on large real-world datasets. Harnessing the power of synthetic data generated from generative models not only reduces costs but also provides opportunities for improved granularity in visual class descriptions. This shift in methodology has the potential to reshape the AI market by making advanced visual representation learning more accessible and efficient for a wide range of applications.