TL;DR:

- Generative AI is undergoing a transformation, focusing on human-like responses, innovation, and problem-solving.

- Innovations like the Gemini model and OpenAI’s Q* project emphasize MoE, multimodal learning, and the path to AGI.

- The central challenge is creating models that mimic human cognitive abilities and align with ethical standards.

- Researchers highlight advancements in key model architectures, including Transformer Models and MoE models.

- MoE models are efficient, task-specific, and essential for handling diverse data types.

- The Gemini model showcases state-of-the-art performance in multimodal tasks.

- These advancements redefine AI research and applications, promising a future of innovation and ethical AI development.

Main AI News:

Generative Artificial Intelligence is on the brink of a monumental transformation. With a focus on creating AI systems that emulate human-like responses, innovation, and problem-solving, this field is at the forefront of technological advancement. Innovations like the Gemini model and OpenAI’s Q* project have redefined the landscape by emphasizing the integration of Mixture of Experts (MoE), multimodal learning, and the journey towards Artificial General Intelligence (AGI). This evolution signifies a profound departure from traditional AI techniques, ushering in more integrated and dynamic systems.

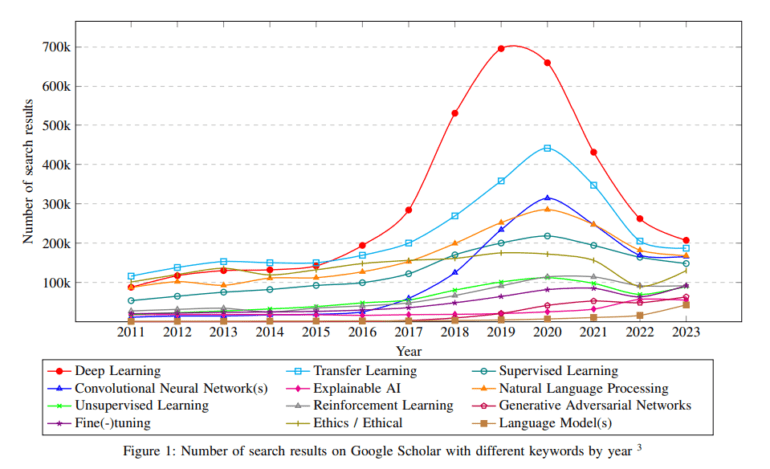

At the core of generative AI lies the challenge of developing models that can effectively replicate intricate human cognitive abilities while handling a wide array of data types, including text, images, and sound. Balancing these technological advancements with ethical standards and societal norms adds another layer of complexity. As the complexity and volume of AI research continue to grow, there is a pressing need for efficient methods to synthesize and evaluate the expanding knowledge landscape.

A collaborative effort by researchers from Academies Australasia Polytechnic, Massey University, Auckland, Cyberstronomy Pty Ltd, and RMIT University has yielded comprehensive insights into advancements in key model architectures. These include Transformer Models, Recurrent Neural Networks, MoE models, and Multimodal Models. The study also delves into the challenges posed by AI-themed preprints, assessing their impact on peer-review processes and scholarly communication. With a strong emphasis on ethical considerations, the study outlines a strategic approach to future AI research. This approach advocates for a balanced and conscientious integration of MoE, multimodality, and Artificial General Intelligence within the realm of generative AI.

While transformer models have traditionally held a central role in AI architectures, they are now being complemented and, at times, replaced by more dynamic and specialized systems. Although Recurrent Neural Networks have proven effective for sequence processing, they are gradually being overshadowed by newer models due to their limitations in handling long-range dependencies and efficiency. Researchers are increasingly introducing advanced models such as MoE and multimodal learning methodologies to address these evolving needs. MoE models play a pivotal role in handling diverse data types, particularly in multimodal contexts, by seamlessly integrating text, images, and audio for specialized tasks. This trend is driving substantial investment in research involving complex data processing and the development of autonomous systems.

The intricate and nuanced methodology behind MoE models and multimodal learning cannot be understated. These models are celebrated for their efficiency and task-specific performance, harnessing the power of multiple expert modules. Their contribution to understanding and leveraging complex structures, often found in unstructured datasets, is invaluable. Notably, MoE models have a profound impact on AI’s creative capabilities, enabling technology to actively engage in and contribute to creative endeavors. This redefines the intersection of technology and art.

The Gemini model stands as a testament to the state-of-the-art performance achievable in various multimodal tasks, including natural image and audio comprehension, video understanding, and mathematical reasoning. These remarkable advancements signal a future where AI systems will significantly expand their logic, contextual knowledge, and creative problem-solving abilities. As a result, they will reshape the landscape of AI research and applications, paving the way for an exciting era of innovation and ethical AI development.

Conclusion:

The evolution of generative AI, with its emphasis on integrated systems, ethical considerations, and advanced models like MoE and the Gemini model, signifies a transformative period in the AI market. As AI systems become more capable of emulating human-like responses and problem-solving, they are poised to revolutionize various industries, offering innovative solutions while adhering to ethical standards. This evolution presents significant opportunities for businesses to leverage these advancements and stay at the forefront of technological innovation.