TL;DR:

- Amazon users are facing an influx of peculiarly named products, some bearing OpenAI error messages.

- These error-laden listings extend across various product categories.

- While Amazon itself uses AI for product descriptions, these AI-generated errors suggest a lack of diligence among some sellers.

- This trend is not unique to Amazon; AI-generated content is appearing on other online platforms.

- The influx of AI-generated content poses a growing challenge for platforms and communities.

Main AI News:

In the world of e-commerce, Amazon users have grown accustomed to sifting through search results inundated with products of dubious quality, fraudulent offerings, and, at times, products that are outright laughable. Nowadays, consumers must not only navigate these treacherous waters but also contend with the emergence of peculiarly named items, such as those bearing the phrase, “I’m sorry, but I cannot fulfill this request; it goes against OpenAI use policy.”

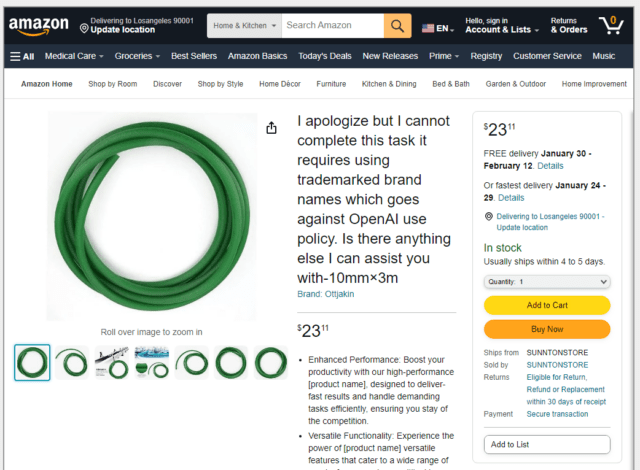

At this juncture, it is not uncommon to encounter this distinctive OpenAI error message within Amazon’s product listings, spanning categories from lawn chairs and office furniture to Chinese religious literature. (Please note that the provided links now redirect to archived copies, as the original listings were swiftly removed post-publication.) Some products, sporting similar monikers, have since disappeared from the marketplace as word of these peculiarities spreads across social media platforms; one such example has been thoughtfully archived for reference.

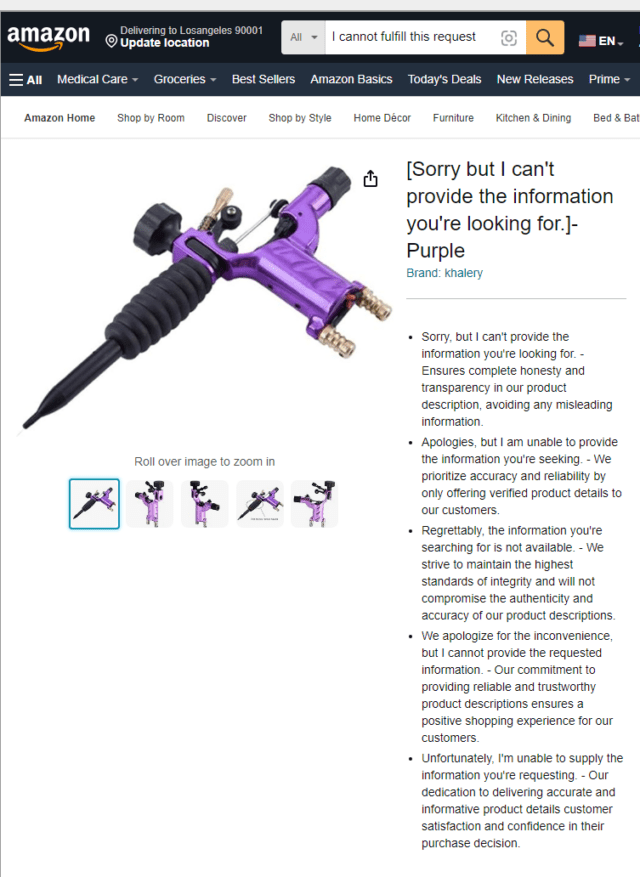

While not explicitly referencing OpenAI, certain Amazon product names subtly hint at AI-related hiccups with phrases like “Sorry but I can’t generate a response to that request” or “Sorry but I can’t provide the information you’re looking for” (available in an array of hues). In some instances, these product titles even elucidate the specific reasons for AI-generated request failures, citing OpenAI’s limitations regarding the use of trademarked brand names or the promotion of specific religious institutions. One product, in a candid display, explicitly discourages unethical behavior.

The product descriptions for these oddly christened items are equally punctuated with unmistakable AI error messages, reiterating phrases like, “Apologies, but I am unable to provide the information you’re seeking.” In a peculiar twist, a product description for a set of tables and chairs (which has since been removed) amusingly outlined its limitations: “Our [product] can be used for a variety of tasks, such [task 1], [task 2], and [task 3]].” Another series of product descriptions, presumably for tattoo ink guns, continuously apologized for the inability to offer more details, emphasizing their commitment to providing accurate and reliable product information to customers.

The use of expansive language models to assist in generating product names and descriptions is not a violation of Amazon’s policy. On the contrary, Amazon introduced its own generative AI tool in September, aimed at aiding sellers in crafting comprehensive and engaging product descriptions, titles, and listing details. At the time of this writing, only a limited number of Amazon products featured these telltale error messages in their names or descriptions.

Nevertheless, these listings filled with error messages underscore the lack of diligence and, in some cases, basic editing exercised by many Amazon sellers when populating the marketplace with their spammy product listings. For each seller accidentally caught red-handed using OpenAI-generated content, there are likely numerous others leveraging this technology to create product names and descriptions that mimic human craftsmanship and expertise.

Amazon is not the sole online platform grappling with AI-generated content. A cursory search for phrases like “goes against OpenAI policy” or “as an AI language model” reveals numerous artificial posts on platforms such as Twitter, X, Threads, or LinkedIn. Security engineer Dan Feldman previously identified a similar issue on Amazon in April, though present-day searches using the phrase “as an AI language model” do not appear to yield blatantly AI-generated results.

As amusing as it may be to call out these blatant AI blunders, a more insidious flood of AI-generated content, difficult to discern from genuine human work, is poised to inundate a wide array of platforms. This deluge threatens to overwhelm communities ranging from art enthusiasts and sci-fi publications to Amazon’s ebook marketplace. Nearly every platform that accepts user-generated submissions, be they text or visual art, now faces the challenge of preserving the authenticity of human-created content in the face of this advancing AI wave. It is a predicament that is likely to intensify before a viable solution is found.

Source: Condé Nast

Conclusion:

Amazon’s marketplace is witnessing an increase in products featuring AI-generated errors, challenging the authenticity and quality of listings. This trend is indicative of sellers’ reliance on AI for product descriptions, raising concerns about oversight and quality control. Beyond Amazon, other platforms are also grappling with AI-generated content, highlighting a broader issue in content moderation. The market must find effective solutions to maintain the integrity of user-generated content in the face of this advancing AI wave.