TL;DR:

- Large Language Models (LLMs) raise privacy concerns due to their training on web data.

- Unlearning is a solution to make LLMs more privacy-conscious by selectively ‘forgetting’ sensitive information.

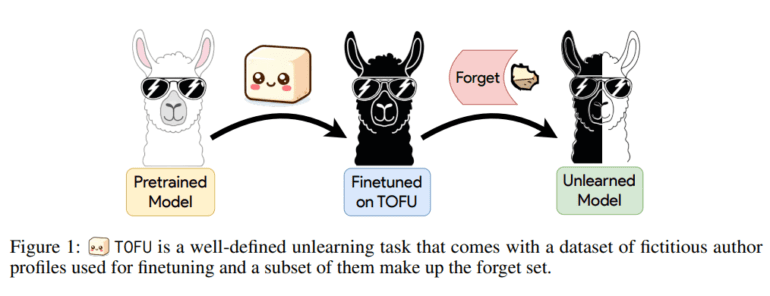

- Researchers from Carnegie Mellon introduced the Task of Fictitious Unlearning (TOFU) to evaluate unlearning in LLMs.

- TOFU assesses forget quality and model utility, revealing the limitations of existing unlearning methods.

- TOFU sets a benchmark, provides a robust dataset, and challenges the research community to improve unlearning techniques.

Main AI News:

Large Language Models (LLMs) have become essential tools in various fields, thanks to their ability to process and generate vast amounts of text. However, their training on extensive web data raises concerns about privacy and the inadvertent exposure of sensitive information. To tackle these ethical and legal challenges, the concept of unlearning has emerged, offering a promising solution.

Unlearning involves the deliberate modification of LLMs after their initial training to selectively ‘forget’ specific elements of their training data. The primary objective is to make these models more privacy-conscious by erasing sensitive information, without the need for costly and impractical retraining from scratch.

The central challenge in this context is devising effective methods to unlearn sensitive data from LLMs while maintaining their utility. Traditional approaches to unlearning have mainly focused on classification models, leaving generative models like LLMs relatively unexplored. These generative models, widely used in real-world applications, pose a more significant threat to individual privacy due to their ability to generate text.

In response to this gap, researchers from Carnegie Mellon University have introduced the Task of Fictitious Unlearning (TOFU) benchmark. TOFU comprises a meticulously designed dataset of 200 synthetic author profiles, each featuring 20 question-answer pairs. Among these, a subset known as the ‘forget set’ is designated for unlearning. This unique dataset empowers researchers to systematically evaluate unlearning techniques with varying levels of task complexity.

TOFU evaluates unlearning along two critical dimensions:

- Forget Quality: This dimension assesses unlearning efficacy by employing multiple performance metrics. Researchers have introduced new evaluation datasets with varying levels of relevance, ensuring a thorough evaluation of the unlearning process.

- Model Utility: To gauge the utility of unlearned models, a metric is introduced. It compares the probability of generating accurate answers to false ones on the forget set. This statistical test contrasts unlearned models with the gold standard retained models, which were never exposed to sensitive data.

TOFU’s findings indicate that existing unlearning methods fall short of achieving effective data erasure. This underscores the necessity for continued research and innovation in developing unlearning techniques that enable models to behave as if they have never encountered sensitive data.

The TOFU framework holds profound significance in the field of LLMs and data privacy:

- Pioneering Benchmark: TOFU establishes a groundbreaking benchmark for unlearning within the context of LLMs, offering a structured and quantifiable approach to assess unlearning techniques.

- Robust Dataset: The framework includes a dataset of fictitious author profiles, ensuring that the only source of information to be unlearned is well-defined and can be rigorously evaluated.

- Comprehensive Evaluation: TOFU provides a holistic evaluation scheme, taking into account both forget quality and model utility, thus providing a comprehensive measure of unlearning efficacy.

- Challenging the Status Quo: By exposing the limitations of existing unlearning algorithms, TOFU challenges the research community to develop more effective and privacy-centric solutions.

While TOFU represents a significant step forward in unlearning research, it is essential to acknowledge its limitations. The framework primarily focuses on entity-level forgetting, omitting instance-level and behavior-level unlearning, which are also essential aspects of this domain. Additionally, TOFU does not address the alignment of LLM behavior with human values, which can be seen as another facet of unlearning in the context of ethical AI.

Conclusion:

TOFU introduces a pioneering benchmark for unlearning in Large Language Models, highlighting the need for better privacy-centric solutions. While it focuses on entity-level forgetting, it leaves out instance-level and behavior-level unlearning. Additionally, it does not address aligning LLM behavior with human values, indicating room for further research in ethical AI. This development has significant implications for the market, urging businesses to invest in more robust data privacy measures for AI applications.