TL;DR:

- InfoBatch, a groundbreaking AI framework, offers lossless training acceleration through dynamic data pruning.

- Traditional computer vision training methods strain computational resources, hindering accessibility for researchers.

- Existing solutions, such as dataset distillation and corset selection, often compromise model performance.

- InfoBatch’s dynamic and unbiased data pruning maintains a gradient expectation, achieving unprecedented efficiency without sacrificing accuracy.

- It outperforms state-of-the-art methods by at least tenfold in efficiency across various tasks.

- Practical benefits include substantial cost savings in computational resources and time, making AI more accessible.

Main AI News:

Balancing training efficiency with performance has long been a challenge in the realm of computer vision. Traditional training methods often rely on vast datasets, straining computational resources and presenting a hurdle for researchers lacking access to high-powered computing infrastructures. Unfortunately, many existing solutions aimed at reducing the sample size for training introduce new complexities or compromise the model’s original performance, undermining their effectiveness.

Addressing this challenge is essential for optimizing the training of deep learning models, which is both resource-intensive and critical. The key issue lies in meeting the computational demands of extensive datasets while maintaining the model’s effectiveness—a pressing concern for the field of machine learning, where practical and accessible applications depend on the harmonious coexistence of efficiency and performance.

Existing solutions encompass methods such as dataset distillation and corset selection, both designed to minimize the training sample size. However, they bring their own set of challenges. Static pruning methods, for instance, select samples based on specific metrics before training, often incurring additional computational costs and struggling with generalizability across various architectures or datasets. On the other hand, dynamic data pruning methods aim to reduce training costs by decreasing the number of iterations but face limitations in achieving lossless results and operational efficiency.

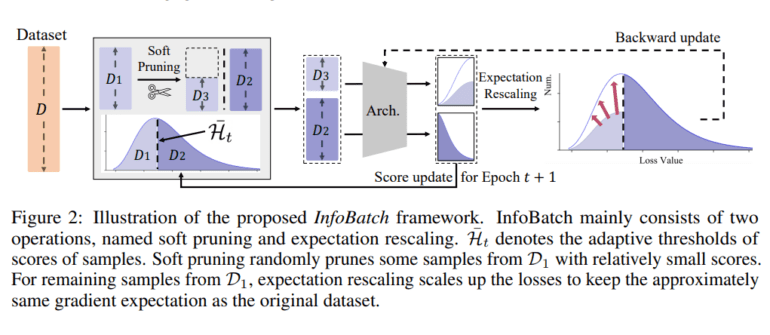

Enter InfoBatch, a revolutionary framework introduced by researchers from the National University of Singapore and Alibaba Group. InfoBatch stands apart from previous methodologies due to its dynamic and unbiased approach to data pruning. It continuously updates a loss-based score for each data sample during training. The framework then selectively prunes less informative samples with low scores while compensating for this pruning by scaling up the gradients of the remaining samples. This ingenious strategy effectively maintains a gradient expectation similar to the original, unpruned dataset, preserving the model’s performance.

InfoBatch has proven its ability to significantly reduce computational overhead, surpassing previous state-of-the-art methods by at least tenfold in efficiency. Remarkably, this efficiency gain does not come at the cost of performance. InfoBatch consistently achieves lossless training results across various tasks, including classification, semantic segmentation, vision pertaining, and fine-tuning language model instruction. In practical terms, this translates to substantial cost savings in computational resources and time. For example, when applied to datasets like CIFAR10/100 and ImageNet1K, InfoBatch has been shown to save up to 40% of the overall cost. Furthermore, for specific models like MAE and diffusion models, the cost savings soar to an impressive 24.8% and 27%, respectively.

Conclusion:

InfoBatch represents a game-changing advancement in AI training, addressing the critical challenge of balancing efficiency and performance in computer vision. This innovative framework has the potential to significantly reduce the computational costs associated with AI training while maintaining optimal model performance. As a result, it opens up new possibilities for researchers and businesses by making machine learning more accessible and cost-effective, paving the way for broader adoption and innovation in the AI market.