TL;DR:

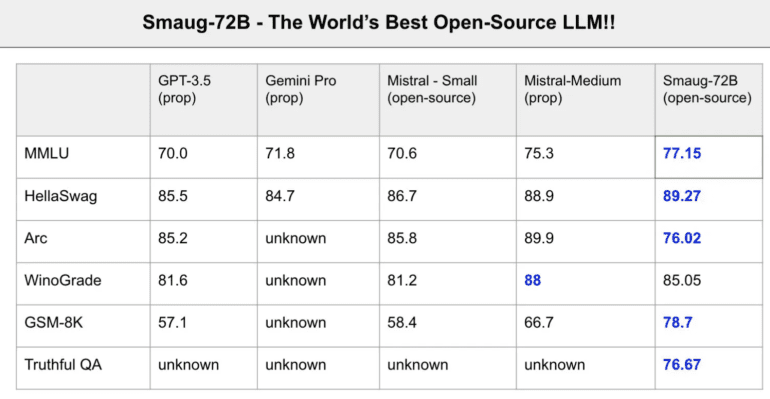

- Smaug-72B, a new open-source language model, outperforms proprietary models like GPT-3.5 and Mistral Medium.

- Developed by Abacus AI, Smaug-72B builds upon Qwen-72B, surpassing it in various benchmarks.

- Achieved the highest average score of 80+ on the Hugging Face Open LLM leaderboard.

- Excels in reasoning and math tasks due to advanced fine-tuning techniques.

- Qwen also releases Qwen 1.5, a suite of powerful language models, and Qwen-VL-Max, a competitive vision language model.

- The emergence of Smaug-72B and Qwen 1.5 signifies a significant leap in open-source AI innovation, challenging Big Tech’s dominance.

Main AI News:

In a groundbreaking development, a new open-source language model has ascended to the pinnacle of excellence, as per the latest rankings unveiled by Hugging Face, a premier platform for natural language processing (NLP) research and applications.

Termed “Smaug-72B,” this model was publicly unveiled today by Abacus AI, a startup specializing in resolving intricate challenges within the artificial intelligence and machine learning realm. Smaug-72B stands as a refined iteration of “Qwen-72B,” a potent language model introduced a mere few months ago by the Alibaba Group’s research team, Qwen.

What sets today’s launch apart is Smaug-72B’s superior performance over GPT-3.5 and Mistral Medium, two of the foremost proprietary large language models crafted by OpenAI and Mistral, respectively, across numerous popular benchmarks. Moreover, Smaug-72B outshines its precursor, Qwen-72B, by a substantial margin in various evaluations.

Per the Hugging Face Open LLM leaderboard, which gauges the efficacy of open-source language models across diverse natural language understanding and generation tasks, Smaug-72B now reigns as the premier and solitary open-source model to maintain an average score exceeding 80 across all major LLM evaluations.

While still trailing the 90-100 point range indicative of human-level proficiency, the emergence of Smaug-72B heralds a potential paradigm shift, wherein open-source AI could soon rival the capabilities of Big Tech, historically veiled in secrecy. Essentially, Smaug-72B’s launch has the potential to redefine the trajectory of AI advancement, harnessing the creativity of entities beyond a select cadre of affluent corporations.

Unleashing the Power of Open Source

“Smaug-72B from Abacus AI is now accessible on Hugging Face, leading the LLM leaderboard, and represents the premier model with an 80+ average score!! Essentially, it stands as the globe’s paramount open-source foundational model,” proclaimed Bindu Reddy, CEO of Abacus AI, in an X.com announcement.

“Our subsequent objective involves disseminating these methodologies via a research paper and integrating them into several premier Mistral Models, including miqu (a 70B fine-tune of LLama-2),” she elaborated. “The methodologies we’ve employed specifically target reasoning and mathematical competencies, elucidating the elevated GSM8K scores! Further insights will be expounded upon in our forthcoming paper.”

With its debut, Smaug-72B becomes the inaugural open-source model to achieve an average score of 80 on the Hugging Face Open LLM leaderboard, representing a remarkable milestone within the realm of natural language processing and open-source AI.

Particularly excelling in reasoning and mathematical tasks, Smaug-72B owes its prowess to the methodologies applied by Abacus AI during the fine-tuning process. These techniques, slated for elucidation in an upcoming research paper, address the vulnerabilities inherent in large language models while augmenting their capabilities.

Smaug-72B’s emergence merely epitomizes the recent surge in prominence of open-source language models. Qwen, the brains behind Qwen-72B, recently introduced Qwen 1.5, a suite of compact yet potent language models spanning from 0.5B to 72B parameters.

Outperforming established proprietary models like Mistral-Medium and GPT-3.5, Qwen 1.5 boasts a 32k context length and seamlessly integrates with various tools and platforms for expedited and localized inference. Furthermore, Qwen unveiled Qwen-VL-Max, an expansive vision language model, which competes with Gemini Ultra and GPT-4V, the apex proprietary vision language models from Google and OpenAI, respectively.

Implications for the Future of AI

The emergence of Smaug-72B and Qwen 1.5 has sparked fervent discussions and anticipation within the AI community and beyond. Esteemed experts and influencers have lauded the accomplishments of Abacus AI and Qwen, extolling their contributions to open-source AI.

“It’s astounding to contemplate that merely a year ago, models like Dolly captured our attention,” remarked Sahar Mor, an influential AI analyst, via a LinkedIn post, marveling at the strides made by open-source models over the past year.

Presently available on Hugging Face, Smaug-72B and Qwen 1.5 are accessible for download, utilization, and customization by anyone. Abacus AI and Qwen have also disclosed plans to submit their models to the llmsys human evaluation leaderboard, a novel benchmark evaluating language model performance across human-like tasks and scenarios. Additionally, both entities have hinted at forthcoming projects and aspirations, aimed at proliferating open-source models and deploying them across diverse domains and applications.

Smaug-72B and Qwen 1.5 symbolize the latest chapter in the rapid and remarkable evolution of open-source AI. They embody a burgeoning wave of AI innovation and democratization, challenging the hegemony of major tech conglomerates while paving the way for fresh possibilities and opportunities for all. Though only time will determine Smaug-72B’s enduring reign atop the Hugging Face leaderboard, it’s evident that open-source AI is poised for a momentous start to the year.

Conclusion:

The emergence of Smaug-72B and Qwen 1.5 signals a profound shift in the landscape of open-source AI. With these models surpassing proprietary counterparts and achieving remarkable performance milestones, the market can anticipate increased competition and innovation. This trend underscores the growing democratization of AI, offering new opportunities for diverse stakeholders while challenging the longstanding dominance of major tech corporations.