TL;DR:

- AWS AI Labs unveils CodeSage, a cutting-edge bidirectional encoder representation model for source code.

- Traditional approaches in code representation learning face limitations in scalability and data comprehensiveness.

- CodeSage pioneers a two-stage training scheme, surpassing conventional methodologies in capturing semantic and structural nuances.

- The model strategically blends randomness in masking with the structured framework of programming languages, enhancing performance across diverse tasks.

- Comprehensive evaluation demonstrates CodeSage’s superiority in code generation, classification, and semantic search tasks.

- CodeSage signifies a leap forward in leveraging vast data sets and advanced pretraining strategies for precise representation of programming languages.

Main AI News:

Within the dynamic realm of artificial intelligence, the pursuit of refining the synergy between machines and programming languages remains fervent. This journey delves deep into the intricate domain of code representation learning, a pivotal field that harmonizes human and computational comprehension of programming languages. While traditional methodologies have laid the groundwork, they grapple with constraints in model scalability and data comprehensiveness, hindering the nuanced understanding essential for advanced code manipulation tasks.

The crux of the matter lies in the complexity of training models adept at comprehending and generating programming code efficiently. Current approaches predominantly rely on large language models, prioritizing optimization through masked language modeling objectives. Yet, these methods often falter, struggling to fully grasp the unique amalgamation of syntax and semantics inherent in programming languages, including the integration of natural language elements within code.

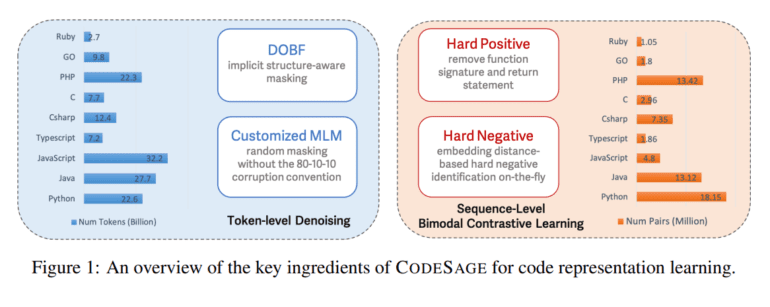

The recent unveiling of CODE SAGE by researchers at AWS AI Labs heralds a groundbreaking departure, introducing an innovative bidirectional encoder representation model tailored explicitly for source code. This model introduces a pioneering two-stage training regimen, harnessing an extensive dataset surpassing the conventional scale in this domain. The methodology is revolutionary, intertwining identifier deobfuscation with an enhanced iteration of masked language modeling objectives, transcending traditional masking techniques. Crafted meticulously, this approach aims to capture the intricate semantic and structural subtleties of programming languages more effectively.

At the core of CODE SAGE’s methodology lies its strategic amalgamation of randomness in masking with the structured framework of programming languages, further enriched through contrastive learning. This entails constructing challenging negative and positive examples, showcasing significant superiority over existing models across a diverse spectrum of downstream tasks. This meticulous examination of the constituents pivotal to effective code representation learning illuminates the significance of token-level denoising and the crucial role of challenging examples in augmenting model performance.

A comprehensive evaluation underscores CODE SAGE’s supremacy across multiple metrics. The model demonstrates exceptional prowess in code generation and classification tasks, surpassing its predecessors by a considerable margin. Particularly noteworthy is its performance in semantic search tasks, both intra and inter-language, epitomizing the model’s adeptness in leveraging vast data sets and sophisticated pretraining strategies to encapsulate the multifaceted nature of programming languages with unparalleled precision.

Conclusion:

The introduction of CodeSage by AWS AI Labs marks a significant advancement in the field of code representation learning. Its innovative approach, superior performance, and ability to capture semantic and structural nuances indicate a promising future for more efficient and accurate interaction between machines and programming languages. This development underscores the increasing importance of sophisticated AI models tailored for specific domains, signaling potential opportunities for businesses to enhance their productivity and capabilities in software development and related fields.