TL;DR:

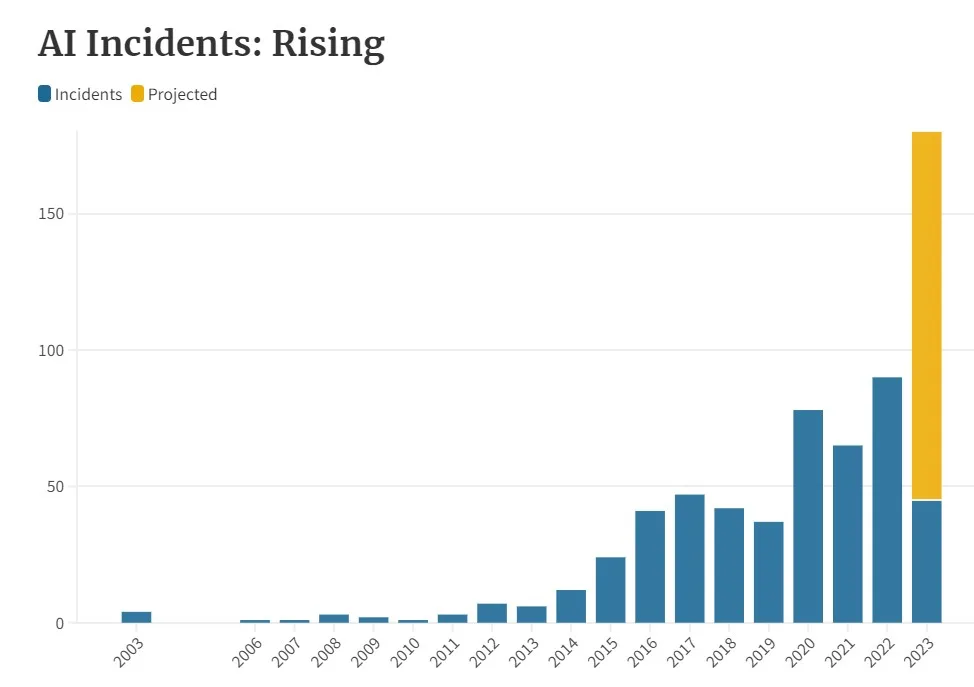

- The frequency of AI-related accidents and near-misses is increasing rapidly and is expected to only intensify in the coming years.

- The number of AI incidents is expected to more than double by the end of 2023, following a similar trajectory to Moore’s law.

- The widespread use of AI presents new challenges for measuring safety, as it is difficult to measure the “distance” AI is traveling.

- The rapid advancements in AI technology have resulted in a significant increase in AI-related incidents, both socially unacceptable and fatal in nature.

- One of the most concerning incidents recorded in the AI Incident Database involved the closest the world has ever come to a full-scale nuclear war.

- The AI Incident Database, run by The Responsible AI Collaborative, is a not-for-profit organization established to promote AI safety and is open-source, relying on a global network of volunteers for support.

- It is crucial for policymakers, commercial entities, and society as a whole to take proactive measures to minimize the frequency of AI-related incidents and ensure the safe and responsible use of AI technology.

Main AI News:

As AI systems continue to permeate various industries, the frequency of AI-related accidents and near-misses has skyrocketed. This rising trend of incidents, ranging from self-driving car accidents to AI systems producing offensive content, is expected to only intensify in the coming years, according to AI experts. In 2023, the rollout of AI systems has reached new heights, with numerous organizations racing to develop learning models in fields such as image generation, finance, and automated tasks.

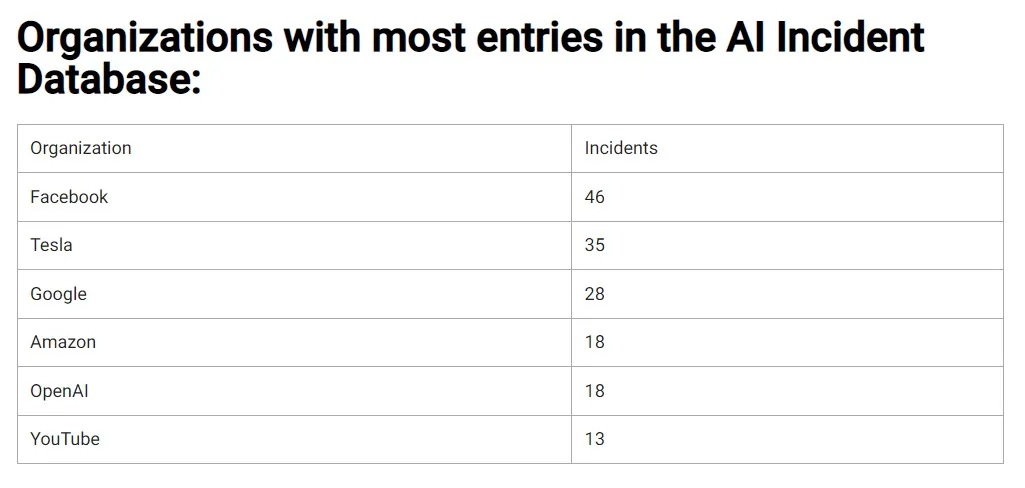

However, with the exponential growth of AI deployment comes a growing number of problematic incidents, some of which have had devastating consequences. The AI Incident Database, which tracks AI-related mistakes and incidents, paints a concerning picture. Currently, the database has documented over 500 incidents, with a sharp increase in the number of incidents reported in 2023 alone. In 2022, the database recorded 90 incidents, while in the first three months of 2023, 45 incidents have already been reported.

At this rate, the number of AI incidents is expected to more than double by the end of 2023. According to Sean McGregor, founder of the AI Incident Database project and a Ph.D. in machine learning, “We expect AI incidents to follow a similar trajectory to Moore’s law, with incidents far more than doubling in 2023.”

Moore’s law, a prediction made by Intel co-founder Gordon Moore in 1965, states that the number of transistors on a circuit would double approximately every two years, leading to a corresponding increase in computing speed and capability. However, the widespread use of AI presents new challenges for measuring safety. As McGregor explains: “It’s difficult to measure the safety of AI systems because we only observe the failures, not the successes. There’s no way to measure the ‘distance’ AI is traveling, unlike in other business sectors such as aviation“.

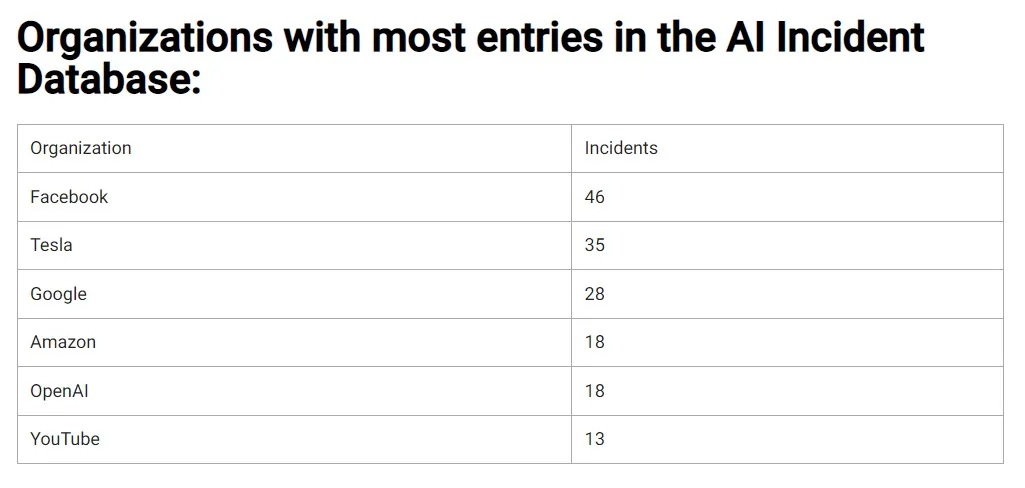

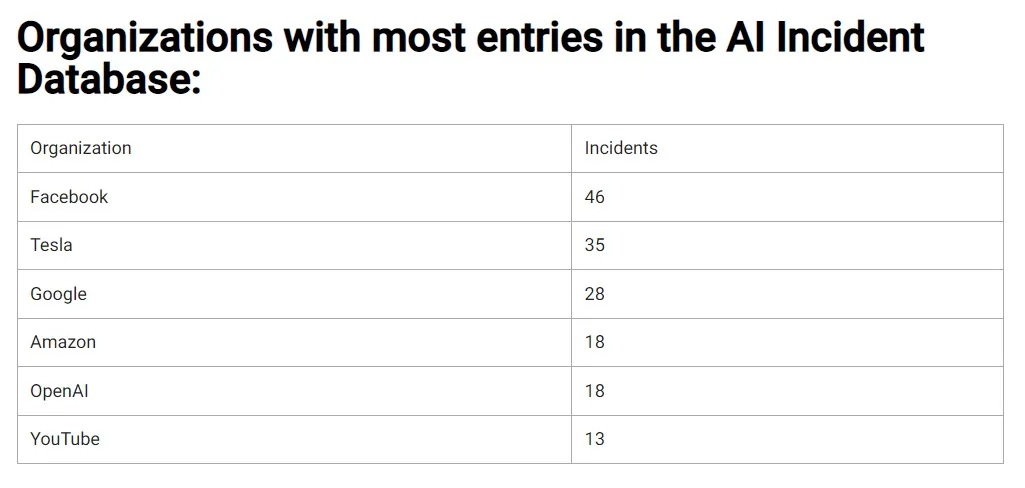

Source: AI Incident Database

The rapid advancements in AI technology have resulted in a significant increase in the number of AI-related accidents and incidents, both socially unacceptable and fatal in nature. From the incident of Google Photos labeling Black individuals as “gorillas” to a fatal crash caused by a Tesla driver relying on autopilot, the consequences of AI gone wrong are far-reaching.

One of the most concerning incidents recorded in the AI Incident Database was the closest the world has ever come to a full-scale nuclear war. In 1983, a Soviet lieutenant colonel made the critical decision to ignore a false alarm of a missile attack from the U.S., potentially saving millions of lives and the future of civilization.

The cause of the false alarm was later determined to be sunlight reflecting off clouds. The AI Incident Database, run by The Responsible AI Collaborative, is a not-for-profit organization established to promote AI safety and is open-source, relying on a global network of volunteers for support. With AI systems becoming increasingly prevalent, it is vital for policymakers, commercial entities, and society as a whole to take proactive measures to minimize the frequency of AI-related incidents.

According to Sean McGregor, founder of the database, “As AI continues to play a larger role in our daily lives, it is imperative that we take the necessary steps to ensure its safe and responsible use. This includes building the social infrastructure necessary to minimize the occurrence of AI-related incidents and ensuring that policymakers and commercial actors are equipped to handle the challenges posed by AI technology.”

Source: NEWSWEEK DIGITAL LLC

Conlcusion:

The increasing frequency of AI-related incidents presents both a challenge and an opportunity for the market. On the one hand, the rising number of AI incidents highlights the need for increased investment in AI safety and responsible use of AI technology. On the other hand, the growing concern about AI safety presents a significant business opportunity for companies offering solutions to minimize the occurrence of AI-related incidents.

Companies that can demonstrate their ability to provide safe and responsible AI technology will likely enjoy a competitive advantage in the market, and there may be a growing demand for their services. As AI continues to play a larger role in various industries, it is crucial for businesses to stay ahead of the curve and be proactive in addressing the challenges posed by AI technology.