- GNNBench addresses the absence of a standardized benchmark for GNNs, enhancing system design and evaluation.

- Developed by William & Mary researchers, GNNBench streamlines tensor data exchange and supports custom APIs for PyTorch and TensorFlow.

- It exposes critical measurement issues, promoting fair comparisons and driving advancements in GNN research.

- Challenges like graph format disparities and framework limitations are tackled, ensuring stable APIs and consistent evaluations.

- GNNBench introduces a pioneering DLPack protocol for seamless tensor exchange, enhancing system flexibility.

- Its versatile integration with PyTorch, TensorFlow, and MXNet empowers researchers to explore diverse environments.

- Overall, GNNBench fosters reproducibility, comprehensive evaluation, and innovation in GNN systems.

Main AI News:

In the realm of Graph Neural Networks (GNNs), the absence of a standardized benchmark has been a silent bottleneck, overshadowing potential pitfalls in system design and evaluation processes. Established benchmarks like Graph500 and LDBC fall short when applied to GNNs due to fundamental disparities in computations, storage mechanisms, and heavy reliance on deep learning frameworks. The quest for GNN systems revolves around optimizing runtime and memory efficiency while preserving model semantics. However, the journey is riddled with challenges stemming from design flaws and inconsistent evaluation methodologies, which ultimately impede progress.

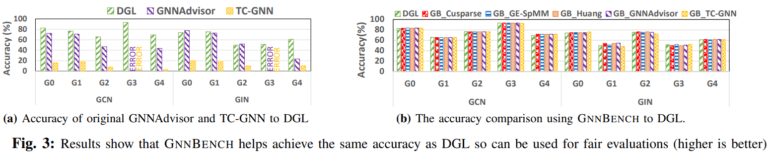

Enter GNNBENCH, a brainchild of William & Mary researchers, poised to redefine the landscape of GNN system innovation. This versatile platform is meticulously crafted to streamline the exchange of tensor data, empower custom classes within System APIs, and seamlessly integrate with leading frameworks such as PyTorch and TensorFlow. By amalgamating various GNN systems, GNNBENCH has unearthed critical measurement discrepancies, with a mission to alleviate researchers from integration complexities and evaluation ambiguities. Its unwavering stability, productivity enhancements, and framework-agnostic nature pave the way for accelerated prototyping and equitable comparisons, thereby propelling advancements in GNN system research while tackling integration hurdles and ensuring uniform evaluations.

In the pursuit of equitable and efficient benchmarking practices, GNNBENCH confronts the primary challenges haunting existing GNN systems head-on. It endeavors to furnish stable APIs for frictionless integration and precise evaluations, tackling issues like instability caused by diverse graph formats and kernel variations across different systems. Moreover, it addresses limitations posed by PyTorch and TensorFlow plugins in accommodating custom graph objects, along with the necessity of additional metadata for GNN operations in system APIs, which often lead to inconsistencies. The complexity surrounding DGL’s framework overhead and integration procedures further accentuates the exigency for a standardized and extensible benchmarking framework like GNNBENCH.

GNNBENCH introduces a groundbreaking producer-only DLPack protocol, revolutionizing tensor exchange between DL frameworks and third-party libraries. Unlike conventional methods, this protocol empowers GNNBENCH to harness DL framework tensors without the hassle of ownership transfer, thus enhancing system flexibility and reusability. The seamless generation of integration codes facilitates effortless adaptation to diverse DL frameworks, fostering unparalleled extensibility. Complemented by a domain-specific language (DSL) that automates code generation for system integration, GNNBENCH offers researchers a streamlined avenue to prototype and implement kernel fusion or other system innovations. These innovative mechanisms bestow GNNBENCH with the agility to cater to diverse research needs efficiently and effectively.

With its versatile integration capabilities spanning popular deep learning frameworks like PyTorch, TensorFlow, and MXNet, GNNBENCH emerges as the quintessential playground for platform experimentation. While its primary evaluation may lean towards PyTorch, its compatibility with TensorFlow, notably exemplified in GCN, underscores its adaptability to any mainstream DL framework. This adaptability not only empowers researchers to explore diverse environments without constraints but also facilitates precise comparisons and insights into GNN performance. GNNBENCH’s inherent flexibility not only enhances reproducibility but also fosters comprehensive evaluation practices, which is imperative for driving GNN research forward in varied computational landscapes.

Conclusion:

GNNBench represents a pivotal advancement in the market for Graph Neural Network systems. Addressing longstanding challenges in benchmarking and evaluation streamlines development processes, fosters fair comparisons, and accelerates innovation. Its introduction of novel protocols and seamless integration capabilities sets a new standard for efficiency and adaptability in the field. GNNBench’s impact is poised to catalyze further growth and competitiveness within the market for GNN systems, driving both research and industry applications forward.