- Google Research introduces ScreenAI, an advanced AI model for comprehending UIs and infographics.

- ScreenAI, based on PaLI architecture, achieves top-tier performance across various tasks.

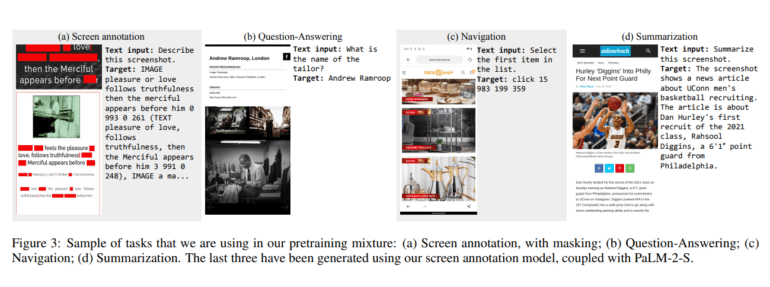

- Pre-trained on a diverse dataset, ScreenAI excels in answering questions, summarizing content, and navigating screens.

- Sets new performance records on benchmarks like WebSRC and MoTIF, surpassing other models in tasks such as Chart QA and InfographicVQA.

- Google releases three new evaluation datasets to foster further research and development in the field.

- Key modifications to the PaLI architecture enable ScreenAI to adapt to diverse resolutions and aspect ratios.

- Meticulously curated pretraining data, annotated using an automated pipeline, forms the foundation of ScreenAI’s capabilities.

- Rigorous fine-tuning across multiple datasets confirms ScreenAI’s status as a frontrunner in AI-driven understanding of UIs and infographics.

Main AI News:

In a recent breakthrough, Google Research unveiled ScreenAI, a cutting-edge multimodal AI model designed to comprehend infographics and user interfaces (UIs) with unprecedented precision. Built upon the robust PaLI architecture, ScreenAI showcases state-of-the-art performance across various tasks, marking a significant leap in AI technology’s capabilities.

ScreenAI’s development journey began with its pre-training phase, where it ingested a vast dataset of screenshots procured through web crawling and automated app interactions. Leveraging a suite of off-the-shelf AI models, including Optical Character Recognition (OCR) for annotating screenshots and a Large Language Model (LLM) for generating user queries, Google researchers meticulously crafted a comprehensive training regimen. The culmination of this effort is a formidable five billion parameter model adept at answering queries, summarizing content, and navigating UI screens with unparalleled accuracy.

Notably, ScreenAI has shattered performance records on prominent benchmarks such as WebSRC and MoTIF, surpassing its counterparts in tasks like Chart QA, DocVQA, and InfographicVQA. To foster broader advancements in the field, Google has generously shared three new evaluation datasets tailored for screen-based question-answering (QA) models, enabling researchers worldwide to benchmark and refine their creations effectively.

Despite its remarkable achievements, Google acknowledges the ongoing quest for excellence, especially concerning behemoths like GPT-4 and Gemini. Embracing this spirit of collaboration and competition, Google has made its dataset available to propel further research endeavors, facilitating the refinement of AI models geared towards screen-related tasks.

At the heart of ScreenAI lies the Pathways Language and Image model (PaLI) architecture, a sophisticated blend of Vision Transformer (ViT) and encoder-decoder Large Language Model (LLM) components. To adapt to the diverse resolutions and aspect ratios inherent in UIs and infographics, Google engineers introduced a pivotal modification, enhancing the image patching mechanism using insights from the Pix2Struct model. This adaptive approach empowers ScreenAI to flexibly process input images of varying dimensions, ensuring robust performance across diverse contexts.

Fueling ScreenAI’s prowess is a meticulously curated corpus of pretraining data, meticulously annotated using an automated pipeline. By discerning and categorizing UI and infographic elements, such as images, text, and buttons, Google’s system generates a comprehensive screen schema annotation, laying the groundwork for subsequent training phases.

To evaluate ScreenAI’s efficacy, researchers subjected it to rigorous fine-tuning across an array of publicly available datasets, spanning navigation, summarization, and QA domains. Impressively, ScreenAI not only established new benchmarks in multiple arenas but also demonstrated competitiveness against established benchmarks, reaffirming its status as a frontrunner in the evolving landscape of AI-driven understanding.

As excitement mounts within the tech community, users eagerly anticipate Google’s next moves with ScreenAI. Speculations abound regarding its potential integration into search result ranking algorithms, underscoring the transformative implications of this groundbreaking innovation. While Google has yet to release the model’s code or weights, their gesture of openness through the release of evaluation datasets on platforms like GitHub signifies a commitment to fostering collaborative progress in AI research and development.

Conclusion:

Google’s unveiling of ScreenAI marks a significant milestone in the AI landscape, particularly in the realm of UI and infographic comprehension. With its stellar performance and contributions to research through dataset releases, ScreenAI not only demonstrates Google’s prowess in AI innovation but also signals a promising direction for the market, fostering collaboration and competition among industry players to push the boundaries of AI technology further.