- MicroGraph introduces a high-efficiency approach to graph comprehension with just 3 billion parameters.

- It combines streamlined visual encoding and Program-of-Thoughts learning strategies for superior performance.

- The model outperforms larger counterparts in both efficacy and speed across various benchmarks.

- By mastering numerical computations through iterative Python program generation, MicroGraph achieves precise answers efficiently.

- The GraphQA-PoT dataset supports this learning paradigm, enriching available resources for training and evaluation.

- MicroGraph’s impact extends beyond technology, setting a precedent for future research in graph comprehension.

- Embracing Visual Token Consolidation ensures efficient encoding of high-resolution graph images without sacrificing data fidelity.

Main AI News:

Graphs serve as indispensable aids for data visualization in disseminating information, making business decisions, and conducting academic research. As multimodal data volumes surge, there emerges a pressing necessity for automated graph comprehension, a focal point gaining traction within the research community. Recent strides in Multimodal Large Language Models (MLLMs) showcase remarkable aptitude in grasping images and executing instructions with efficacy. Nonetheless, prevailing graph comprehension models grapple with myriad challenges, encompassing hefty parameter demands, susceptibility to numerical computation errors, and inefficiencies in encoding high-resolution graphs.

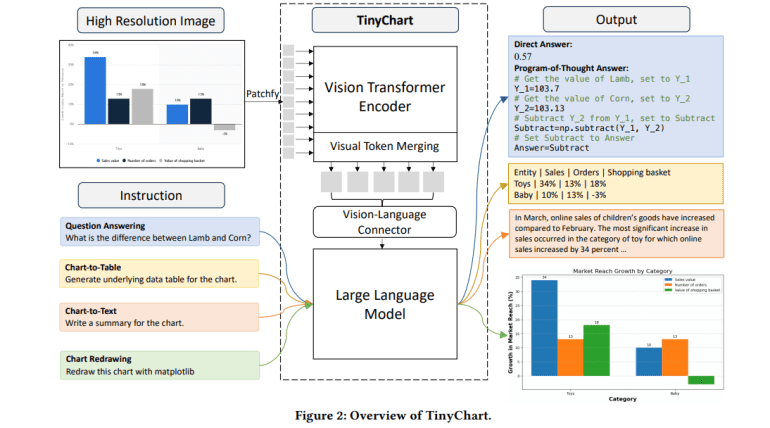

To counteract these constraints, a cadre of researchers from China has put forth an innovative remedy: MicroGraph. Despite its modest 3 billion parameters, MicroGraph delivers cutting-edge performance across diverse graph comprehension benchmarks while boasting expedited inference velocities. This efficiency is underpinned by a fusion of methodologies, including streamlined visual encoding and Program-of-Thoughts (PoT) learning strategies. Drawing inspiration from antecedent endeavors, Visual Token Consolidation enhances visual feature sequences by amalgamating akin tokens, thereby facilitating streamlined encoding of high-resolution graph images sans overwhelming computational requisites.

Moreover, MicroGraph’s Program-of-Thoughts (PoT) learning strategy substantially bolsters the model’s prowess in grappling with numerical computations, a task frequently perplexing existing graph comprehension models. Through training the model to iteratively craft Python programs for computational quandaries, MicroGraph garners precise answers with heightened efficiency. The researchers have painstakingly curated the GraphQA-PoT dataset to buttress this learning paradigm, harnessing both template-driven and GPT-based methodologies to fashion question-answer pairs.

The debut of MicroGraph signifies a momentous leap forward in deciphering multimodal graphs. It outshines bulkier MLLMs in terms of efficacy and velocity, rendering it a pragmatic recourse for real-world scenarios where computational assets are finite. By amalgamating Visual Token Consolidation and Program-of-Thoughts learning, MicroGraph showcases how innovative strategies can surmount the hurdles plaguing extant graph comprehension models, thereby charting a course for more efficient and precise data scrutiny and decision-making processes.

Beyond its technological innovations, MicroGraph’s contributions reverberate through its impact on graph comprehension. By introducing a pioneering approach to mastering numerical computations through a programmatic thought process, the model elevates its own performance and establishes a precedent for forthcoming research endeavors in this sphere. The creation of the GraphQA-PoT dataset further enriches the arsenal of resources available for training and assessing graph comprehension models, furnishing a prized asset for researchers and practitioners alike.

Embracing Visual Token Consolidation within MicroGraph signifies a pivotal stride toward tackling the challenge of efficiently encoding high-resolution graph images. This methodology not only streamlines computational workflows but also upholds the fidelity of visual data, ensuring that crucial nuances remain intact throughout the encoding journey. Consequently, MicroGraph adeptly navigates intricate graph structures with precision and accuracy, empowering users to distill meaningful insights from an array of datasets.

Conclusion:

MicroGraph’s emergence signifies a significant advancement in graph analysis, offering a highly efficient solution for businesses, researchers, and decision-makers. Its blend of innovative techniques not only improves performance and speed but also lays the groundwork for further developments in the field. By addressing key challenges in graph comprehension, MicroGraph paves the way for more effective data analysis and informed decision-making processes, enhancing overall market competitiveness and productivity.