- PEBOL algorithm combines LLMs and Bayesian optimization for efficient preference elicitation.

- It models user preferences using Beta distributions and employs decision-theoretic strategies for natural language queries.

- PEBOL updates probabilistic beliefs iteratively, facilitating systematic exploration of user preferences.

- Evaluation across datasets showcases significant improvements in MAP@10 over monolithic LLM baselines.

- PEBOL’s resilience against performance drops in noisy environments underscores its robustness.

Main AI News:

In today’s digital landscape, optimizing user experiences stands as a paramount goal for businesses across diverse sectors. Picture this scenario: you aim to aid a friend in selecting the perfect movie for their evening entertainment, yet their preferences remain nebulous. The conventional method of listing random movie titles lacks efficacy, much like attempting to navigate a labyrinth blindfolded. Similarly, developers of conversational recommender systems encounter challenges in swiftly discerning user preferences sans prior data.

Traditionally, users would directly rate or compare items, but this approach falters when faced with unfamiliar options. Enter Large Language Models (LLMs), such as GPT-3, touted for their prowess in understanding and generating human-like text. Theoretically, they could engage users in dialogues to organically unveil preferences. However, the rudimentary approach of inundating LLMs with item descriptions and expecting preference-eliciting conversations presents notable drawbacks. Firstly, the computational overhead of furnishing detailed descriptions for every item proves prohibitive. Moreover, monolithic LLMs lack the strategic acumen to navigate conversations effectively, often meandering into irrelevant tangents.

Enter PEBOL (Preference Elicitation with Bayesian Optimization Augmented LLMs), a groundbreaking algorithm engineered to seamlessly blend the language comprehension capabilities of LLMs with a principled Bayesian optimization framework. Here’s a concise overview of its modus operandi:

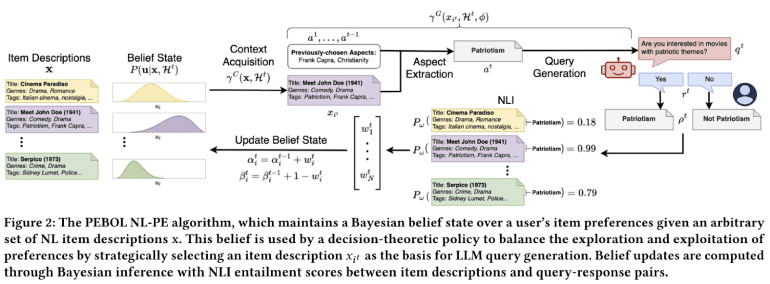

- Modeling User Preferences: PEBOL postulates the existence of a latent “utility function” governing user preferences for each item, employing Beta distributions to encapsulate uncertainty.

- Natural Language Queries: Employing decision-theoretic techniques like Thompson Sampling, PEBOL selects item descriptions and solicits aspect-based queries from the LLM, guiding conversations effectively.

- Inferring Preferences via NLI: Rather than accepting user responses at face value, PEBOL utilizes Natural Language Inference to gauge the likelihood of implicit preferences.

- Bayesian Belief Updates: Leveraging predicted preferences, PEBOL updates its probabilistic beliefs, facilitating systematic exploration and exploitation of user preferences.

- Iterative Process: PEBOL iteratively refines queries, honing in on uncertain preferences to identify the user’s most favored items.

The crux of PEBOL’s innovation lies in its fusion of LLMs for natural query generation and Bayesian optimization for strategic conversation orchestration. This synergy minimizes the context required for each LLM prompt while adeptly navigating the exploration-exploitation trade-off.

In comprehensive evaluations across MovieLens25M, Yelp, and Recipe-MPR datasets, PEBOL showcased remarkable efficacy. Compared to the monolithic GPT-3.5 baseline (MonoLLM), PEBOL exhibited substantial improvements in Mean Average Precision at 10 (MAP@10) after a mere 10 conversational turns. Notably, PEBOL’s incremental belief updates conferred resilience against performance dips witnessed by MonoLLM, particularly evident in simulated noisy environments.

While PEBOL represents a pioneering advancement, the researchers concede the need for further refinement. Future iterations could explore generating contrastive multi-item queries or seamlessly integrating preference elicitation into broader conversational recommendation frameworks. Nonetheless, by amalgamating LLM strengths with Bayesian optimization, PEBOL heralds a new era in AI systems, poised to engage users in natural language dialogues, unraveling preferences, and delivering personalized recommendations.

Conclusion:

The integration of PEBOL’s innovative approach into conversational AI systems marks a significant stride forward in user preference discovery. Businesses stand to benefit from enhanced user engagement, more personalized recommendations, and improved overall user experiences, fostering greater customer satisfaction and loyalty in competitive markets.