- OpenAI introduces new tool for detecting images created by its DALL-E AI image generator.

- The company also unveils enhanced watermarking methods to improve content identification.

- A sophisticated image detection classifier achieves 98% accuracy in identifying DALL-E 3 generated images.

- OpenAI extends watermarking capabilities to audio clips generated by Voice Engine.

- Collaboration with Coalition of Content Provenance and Authority (C2PA) strengthens content authenticity standards.

Main AI News:

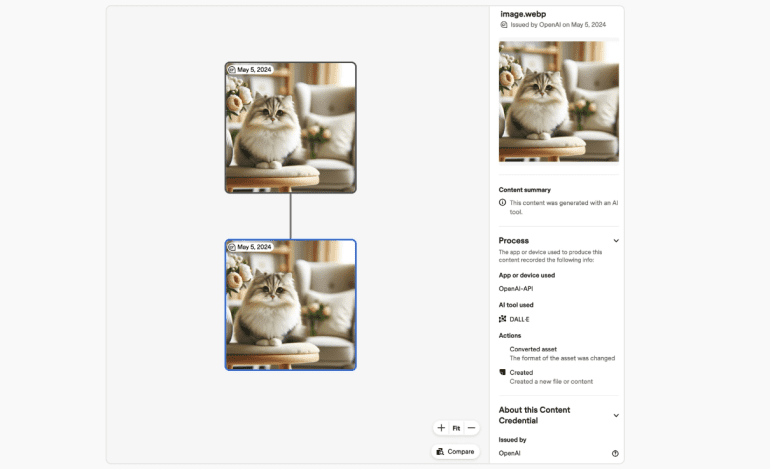

In a recent announcement, OpenAI disclosed its latest advancements in image detection and watermarking technologies, aiming to address the growing concerns surrounding AI-generated content. The company revealed the introduction of a novel tool designed to identify images created using its renowned DALL-E AI image generator. Additionally, it unveiled new watermarking methods intended to enhance the visibility of content generated through its platform.

The cornerstone of these developments is a state-of-the-art image detection classifier, empowered by artificial intelligence algorithms. This innovative system can accurately ascertain whether an image was produced by the DALL-E 3 model, even amidst various alterations such as cropping, compression, or adjustments in saturation. OpenAI asserts an impressive accuracy rate of approximately 98% in detecting images generated by its own AI, underscoring the robustness of its technology.

Furthermore, OpenAI has integrated tamper-resistant watermarking capabilities into its platform, enabling the seamless tagging of content with imperceptible signals. These watermarks serve as indispensable markers of authenticity, providing crucial information regarding ownership and creation methods. Such advancements align with OpenAI’s commitment to bolstering content provenance and combating misinformation in digital spaces.

Despite the notable strides made in image detection, OpenAI acknowledges ongoing efforts to refine both the classifier and watermarking functionalities. Soliciting user feedback is deemed essential in optimizing the effectiveness of these tools, thereby ensuring their relevance and reliability in real-world scenarios.

Beyond image-related initiatives, OpenAI has extended its watermarking technology to encompass audio clips generated by Voice Engine, the company’s text-to-speech platform. This expansion signifies a comprehensive approach towards safeguarding digital content integrity across diverse media formats.

In a collaborative effort to uphold content authenticity standards, OpenAI collaborates with industry peers through initiatives like the Coalition of Content Provenance and Authority (C2PA). By incorporating content credentials into image metadata, OpenAI contributes to a broader ecosystem aimed at enhancing transparency and accountability in content creation and distribution.

Looking ahead, OpenAI remains dedicated to advancing its capabilities in detecting AI-generated content, building upon years of research and development in this domain. While challenges persist, OpenAI’s relentless pursuit of innovation underscores its commitment to fostering trust and integrity in an increasingly complex digital landscape.

Conclusion:

These advancements from OpenAI signify a significant step forward in content authentication and detection of AI-generated material. Businesses operating in digital content creation and distribution may benefit from enhanced tools to safeguard authenticity and combat misinformation, fostering trust among consumers and stakeholders.