- MARKLLM, an open-source toolkit, revolutionizes LLM watermarking, aiding in identifying AI-generated text and combating misinformation.

- Developed collaboratively by prestigious institutions, MARKLLM provides a unified framework supporting nine watermarking methods.

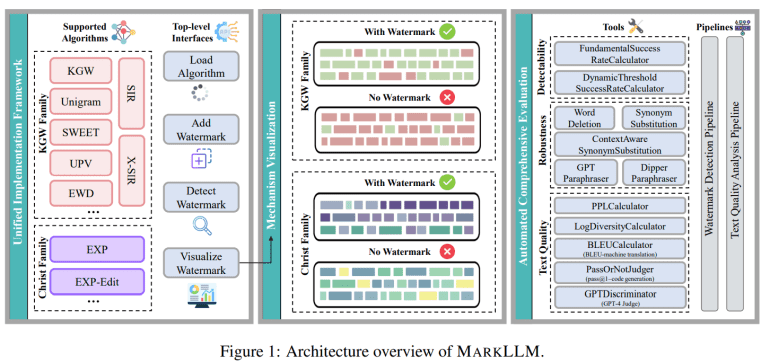

- It offers user-friendly interfaces for algorithm implementation, detection, and data visualization, enhancing accessibility and understanding.

- MARKLLM addresses challenges in LLM watermarking, streamlining algorithm invocation and evaluation with its modular design.

- Two main algorithm families, KGW and Christ, are supported, enabling comprehensive assessments of watermark detectability and robustness.

- Comprehensive evaluations using MARKLLM highlight high detection accuracy and algorithm-specific strengths across various metrics and attack scenarios.

Main AI News:

In the realm of AI-generated text, distinguishing between human and machine authorship is a critical endeavor. Enter LLM watermarking, a technique embedding subtle yet discernible signals within text to authenticate its origin. Addressing concerns such as impersonation, ghostwriting, and the proliferation of fake news, LLM watermarking holds significant promise. However, the field encounters hurdles, primarily stemming from the complexity and diversity of watermarking algorithms and evaluation methods. Achieving consensus and garnering support are imperative to propel LLM watermarking forward, ensuring the reliability of identifying AI-generated content and upholding the integrity of digital discourse.

MARKLLM: Revolutionizing LLM Watermarking Through Open-Source Innovation

Enter MARKLLM, a groundbreaking open-source toolkit developed collaboratively by esteemed researchers from Tsinghua University, Shanghai Jiao Tong University, The University of Sydney, UC Santa Barbara, the CUHK, and the HKUST. MARKLLM stands as a beacon of innovation in the realm of LLM watermarking, offering a unified and extensible framework for implementing watermarking algorithms. With support for nine distinct methods across two major algorithm families, this toolkit empowers researchers and the wider community with user-friendly interfaces for algorithm loading, text watermarking, detection, and data visualization. Moreover, MARKLLM’s modular design ensures scalability and flexibility, underscoring its significance as a catalyst for advancing LLM watermarking technology.

The Two Families of LLM Watermarking: KGW and Christ

Central to LLM watermarking are two main algorithm families: the KGW Family and the Christ Family. The former operates by modifying LLM logits to favor specific tokens, thereby imprinting watermarks that surpass statistical thresholds. Conversely, the Christ Family leverages pseudo-random sequences to guide token sampling, with methods like EXP-sampling correlating text with these sequences for detection. Evaluation of watermarking algorithms involves comprehensive analysis, including assessments of detectability, robustness against tampering, and impact on text quality, utilizing metrics such as perplexity and diversity.

MARKLLM: Addressing Challenges Through Unified Frameworks

MARKLLM emerges as a solution to the challenges plaguing LLM watermarking algorithms, notably the lack of standardization, uniformity, and code quality. By offering a unified framework, this toolkit streamlines algorithm invocation and facilitates seamless switching between methodologies. Noteworthy features include visualization modules for both KGW and Christ family algorithms, illuminating token preferences and correlations. Moreover, MARKLLM boasts 12 evaluation tools and two automated pipelines, empowering users to assess watermark detectability, robustness, and text quality impact comprehensively. Its flexible configurations enable thorough evaluations across diverse metrics and attack scenarios, positioning MARKLLM as an indispensable resource for researchers and practitioners alike.

Unveiling Insights Through Comprehensive Evaluation

Leveraging MARKLLM, researchers evaluated nine watermarking algorithms across various dimensions, including detectability, robustness, and impact on text quality. Utilizing datasets such as C4 for general text generation, WMT16 for machine translation, and HumanEval for code generation, assessments were conducted using metrics like PPL, log diversity, BLEU, pass@1, and GPT-4 Judge. Results underscored high detection accuracy, algorithm-specific strengths, and nuanced outcomes dependent on metrics and attack methodologies. MARKLLM’s intuitive design facilitated rigorous evaluations, yielding invaluable insights to propel future research endeavors forward.

Conclusion:

The introduction of the MARKLLM toolkit signifies a significant advancement in the field of LLM watermarking, offering a comprehensive solution to challenges faced in identifying AI-generated content. Its user-friendly design and robust evaluation capabilities position it as a valuable resource for researchers and practitioners, driving further innovation and fostering trust in digital communication. This innovation is likely to catalyze market growth in industries reliant on AI-generated content, enhancing security measures and mitigating risks associated with misinformation.