- UK’s Institute for AI Safety (AISIT) unveils first AI testing results, focusing on five leading models.

- Testing assesses cyber, chemical, and biological capabilities, as well as the effectiveness of safeguards.

- Partial results disclose expert-level knowledge in chemistry and biology among LLMs.

- Some models struggle with university-level cyber security challenges.

- Inability of certain LLMs to plan and execute complex tasks is highlighted.

- All tested models remain highly vulnerable to basic jailbreaks.

- Legislation informed by these findings is anticipated in the UK.

- Speculation arises about models tested, including doubts about GPT-4o and Google’s Project Astra.

- Results to be discussed at the Seoul Summit co-hosted by the UK and South Korea.

- AISIT expands with a new base in San Francisco, aiming to deepen international collaboration in AI safety research.

Main AI News:

In its inaugural release of AI testing outcomes, the UK Government’s Institute for AI Safety (AISIT) has unveiled significant insights into the safety capabilities of five prominent AI models. These assessments, meticulously conducted, delved into the cyber, chemical, and biological proficiencies of the models, while also scrutinizing the efficacy of their protective measures.

While AISIT has disclosed only partial findings to date, it’s revealed that the tested models, identified by color-coded pseudonyms such as red, purple, green, blue, and yellow, emanate from esteemed laboratories. However, specifics regarding their identities and whether AISIT had access to their latest iterations remain undisclosed.

AISIT’s modus operandi and conclusions can be summarized as follows:

“The Institute meticulously evaluated AI models across four pivotal risk domains, meticulously evaluating the practical effectiveness of developers’ implemented safeguards. Noteworthy findings from our tests include:

- Several LLMs exhibited a profound grasp of chemistry and biology, answering a plethora of expert-level questions akin to individuals possessing Ph.D. credentials in these fields.

- While adept at navigating rudimentary cyber security challenges akin to those encountered in high school curricula, several LLMs faltered when confronted with more intricate university-level challenges.

- While demonstrating proficiency in completing short-horizon agent tasks, such as rudimentary software engineering problems, two LLMs showcased inadequacies in devising and executing sequences of actions for more complex tasks.

- Despite strides in AI development, all tested LLMs remain alarmingly susceptible to basic jailbreaks, with some capable of generating harmful outputs even without deliberate attempts to subvert their protective mechanisms.”

Notably, the scope of the assessments primarily revolves around the potential exploitation of these models to compromise national security, with the disclosed results yet to address immediate concerns such as bias or misinformation.

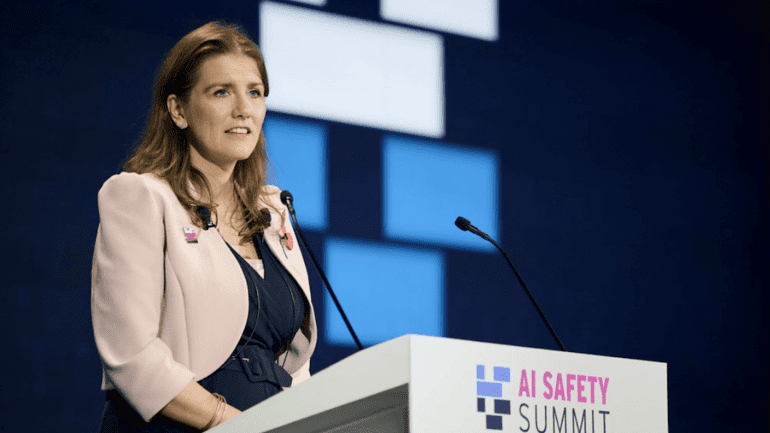

Saqib Bhatti MP, Undersecretary of State for the Department of Science, Innovation, and Technology, hinted at forthcoming legislation shaped by these findings. Emphasizing the UK’s stance as “pro-innovation, pro-regulatory,” Bhatti suggested a regulatory approach distinct from that of the EU.

Amid mounting speculation regarding the versions subjected to testing, BBC Technology editor Zoe Kleinman raised doubts about the inclusion of GPT-4o or Google’s Project Astra in the evaluations.

Anticipation looms over the forthcoming Seoul Summit, co-hosted by the UK and the Republic of Korea, where these findings are slated for discussion.

In a strategic move, the Institute announced its upcoming establishment of a San Francisco base, nestled in the heart of Silicon Valley. This initiative, coupled with collaborative endeavors with its Canadian counterpart, aims to bolster international cooperation in systemic safety research.

Commenting on the expansion, DSIT highlighted the significance of establishing closer ties with the US, fostering collaborative partnerships, and facilitating the exchange of insights crucial for shaping global AI safety policies.

Conclusion:

The release of AI safety findings by the UK’s Institute for AI Safety (AISIT) heralds a new era of scrutiny and regulation in the AI market. As vulnerabilities in leading models are exposed and legislative action looms on the horizon, businesses operating in AI development and deployment must prepare for heightened regulatory oversight and prioritize the enhancement of safeguards to mitigate risks and ensure the responsible deployment of AI technologies.