- Major tech companies, including Microsoft, Amazon, and OpenAI, have reached an international agreement on AI safety at the Seoul AI Safety Summit.

- They commit to voluntary measures to ensure safe development of advanced AI models, including publishing safety frameworks and implementing a “kill switch” for extreme circumstances.

- These commitments build upon prior agreements and involve input from trusted actors, with a focus on frontier AI models like OpenAI’s GPT series.

- Concerns over AI risks have led to regulatory actions such as the EU’s AI Act, while the UK adopts a more flexible approach to AI regulation.

Main AI News:

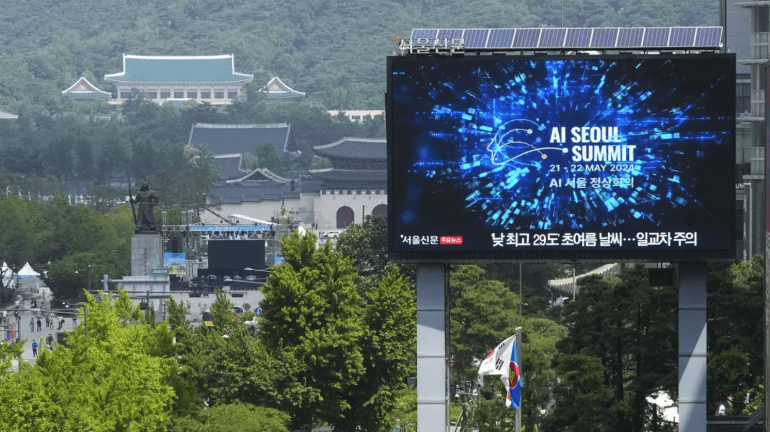

In a groundbreaking move, major players in the tech industry, such as Microsoft, Amazon, and OpenAI, have converged in a historic international accord on artificial intelligence safety during the Seoul AI Safety Summit. This significant agreement sees companies hailing from diverse nations like the US, China, Canada, the UK, France, South Korea, and the United Arab Emirates voluntarily pledging to ensure the secure advancement of their most sophisticated AI models.

Among the commitments outlined, AI developers have committed to unveiling safety frameworks that delineate their strategies for tackling the challenges posed by cutting-edge AI models. These frameworks are aimed at averting potential misuse of the technology by malicious entities. Integral to these frameworks are “red lines” that delineate the unacceptable risks associated with frontier AI systems, encompassing threats such as automated cyberattacks and the specter of bioweapons.

In response to such critical scenarios, companies have proposed the implementation of a fail-safe mechanism—a “kill switch” that would halt the development of AI models if they cannot ensure the mitigation of these risks. This proactive measure underscores the industry’s dedication to prioritizing safety in AI development.

“This collective commitment represents a watershed moment, with leading AI companies worldwide aligning on crucial safety measures,” remarked Rishi Sunak, Prime Minister of the UK, in a statement on Tuesday. “These commitments are pivotal in ensuring transparency and accountability as we forge ahead with AI innovation.“

Expanding on prior agreements, this pact builds upon commitments made by companies involved in generative AI software development last November. Notably, these companies have agreed to solicit input on safety thresholds from “trusted actors,” including relevant governmental bodies, prior to their release ahead of the forthcoming AI Action Summit slated for early 2025 in France.

However, it’s important to note that the commitments made on Tuesday exclusively pertain to frontier models. This designation encompasses the technology underpinning generative AI systems like OpenAI’s GPT series, which includes the widely used ChatGPT AI chatbot.

Since the debut of ChatGPT in November 2022, concerns among regulators and industry leaders regarding the risks associated with advanced AI systems capable of producing text and visual content comparable to, or surpassing, human capabilities have been mounting. While the European Union has taken steps to regulate AI development with the approval of the AI Act by the EU Council, the UK has adopted a more hands-off approach, opting to apply existing laws to AI technology.

While the UK government has hinted at the possibility of legislating for frontier models in the future, concrete timelines for the introduction of formal laws have yet to be established.

Conclusion:

The collaboration among leading tech companies to prioritize AI safety through voluntary commitments and fail-safe measures signals a proactive stance toward addressing concerns about AI risks. This concerted effort not only enhances transparency and accountability but also underscores the industry’s commitment to responsible AI development. In the market, this signifies a potential shift towards more regulated and secure AI technologies, which could foster greater trust and adoption among consumers and businesses alike.