- Large Language Models (LLMs) improve through Reinforcement Learning from Human Feedback (RLHF).

- RLHF optimizes reward functions using human preference data for prompt-response pairs.

- Online alignment iteratively gathers preference data, but may prioritize local optima.

- SELM introduces proactive exploration, enhancing alignment efficiency.

- Experimental evidence highlights SELM’s efficacy in improving model performance.

Main AI News:

In recent years, Large Language Models (LLMs) have witnessed remarkable advancements, primarily attributed to their heightened ability to efficiently interpret human instructions. At the forefront of this progress lies Reinforcement Learning from Human Feedback (RLHF), a pivotal technique in aligning LLMs with human intent. This methodology hinges on optimizing a reward function, a process that can either occur within the LLM’s policy framework or as an independent model.

The essence of this reward function derives from data reflecting human preferences for prompt-response pairs. The richness and diversity inherent in this preference data play a pivotal role in the efficacy of alignment. Such diversity fosters the creation of more versatile and robust language models, steering clear of the pitfalls of local optima.

Alignment procedures can be broadly categorized into offline and online approaches. Offline alignment involves the manual generation of diverse responses to predefined prompts. However, this method often falls short in capturing the full spectrum of natural language possibilities. In contrast, online alignment adopts an iterative methodology wherein new preference data, crucial for training the reward model, is generated through feedback obtained after sampling responses from the LLM.

While online alignment allows for random sampling, facilitating exploration of out-of-distribution (OOD) regions, it tends to prioritize maximizing expected rewards from gathered data. This passive exploration often results in responses clustering around local optima, risking overfitting and premature convergence, thereby neglecting high-reward regions.

Preference optimization emerges as a potent tool in aligning LLMs with human objectives, particularly in conjunction with Reinforcement Learning from Human Feedback. The iterative process of online feedback collection, whether from humans or AI, fosters the development of more adept reward models and better-aligned LLMs. This stands in stark contrast to offline alignment, reliant on fixed datasets. However, achieving a globally accurate reward model demands systematic exploration across the expansive landscape of natural language, a feat unattainable solely through random sampling from conventional reward-maximizing LLMs.

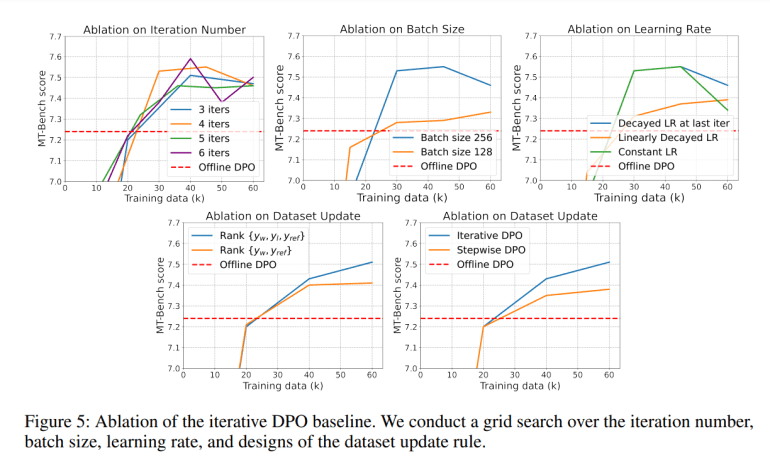

To tackle this challenge, a bilevel objective favoring potentially high-reward responses has been proposed. This proactive approach actively explores OOD regions, thus mitigating the indiscriminate preference for unseen extrapolations witnessed in Direct Preference Optimization (DPO). Experimental evidence underscores the efficacy of this approach, particularly evident in the notable performance enhancements on instruction-following benchmarks such as MT-Bench and AlpacaEval 2.0, when tailored to models like Zephyr-7B-SFT and Llama-3-8B-Instruct. SELM also demonstrates strong performance across various academic standards and diverse contexts, underscoring its versatility and utility.

Conclusion:

Microsoft’s active preference elicitation method, SELM, signifies a significant stride in refining language model alignment. By proactively exploring out-of-distribution regions, SELM addresses the limitations of traditional online alignment methods, leading to enhanced model performance. This advancement underscores the importance of continuous innovation in language model development, offering promising implications for various industries reliant on natural language processing technologies.