- AI’s expanding implementation intensifies demand for memory and storage solutions to accommodate data processing needs.

- DRAM, NAND flash, HDD, and emerging non-volatile memories benefit from this trend.

- Price increases in solid-state memory and storage result from growing demand and manufacturing cutbacks.

- Companies like Samsung, SK hynix, Micron, and Western Digital (WDC) are actively innovating to meet AI storage demands.

- WDC introduces a comprehensive suite of products supporting AI workflows, including high-capacity HDDs and diverse SSD offerings.

Main AI News:

The pervasive integration of artificial intelligence (AI) continues to fuel a substantial surge in the need for advanced memory and storage solutions. This demand is propelled by the imperative to accommodate the intensive data processing requirements inherent in AI applications, encompassing both the storage of training datasets and the retention of AI-generated results utilized in inference engines.

DRAM, NAND flash, HDD, and even magnetic tape suppliers are poised to capitalize on this escalating demand for memory and storage solutions. Moreover, emerging non-volatile memory technologies are poised to reap significant benefits, particularly in end-point AI inference applications.

In response to this burgeoning demand, compounded by the repercussions of manufacturing cutbacks in memory and storage technology in the Fall of 2023, prices of these products, notably solid-state memory and storage, have witnessed a notable upsurge. Notably, within the realm of volatile memory, DRAM tailored for high-bandwidth memory (HBM) in AI applications has emerged as a focal point.

At the 2024 Computex event in Taiwan, Dinesh Bahal, General Manager of Micron Consumer and Components Group, underscored the concerted efforts of industry giants such as Samsung, SK hynix, and Micron in bolstering the development of HBM products to meet the escalating demand fuelled by AI.

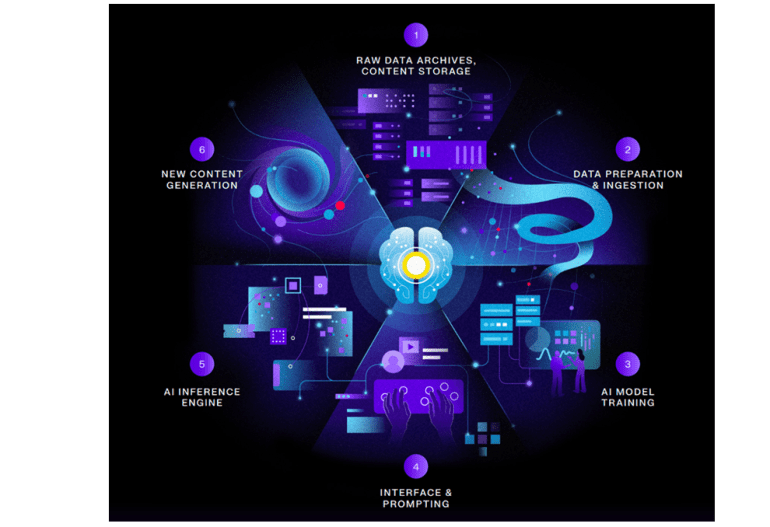

Western Digital (WDC) recently unveiled a suite of innovative products designed to cater to burgeoning AI workloads, accompanied by an illuminating visual depiction delineating the data workflow for AI applications. Termed as the “six-stage AI Data Cycle framework,” WDC’s initiative aims to delineate optimal storage infrastructures geared towards maximizing AI investments while simultaneously enhancing operational efficiency and lowering the overall cost of ownership for AI workflows.

Effectively and cost-efficiently processing and storing this data necessitates leveraging an array of storage and memory products, including HBM for AI training and various NAND-based solid-state storage solutions to facilitate seamless data flows to and from the HBM. This entails both high-performance NAND SSDs and higher-capacity, albeit lower-performance SSDs, collectively constituting primary storage.

In tandem with SSDs, hard disk drives (HDDs) serve as a more cost-effective avenue for secondary storage. Furthermore, archival media provides a repository for data that is no longer required for training purposes, as well as certain outcomes derived from the training process. This archival approach proves particularly pertinent for datasets, such as scientific or engineering data, that may be leveraged for subsequent training iterations or in-depth analysis aimed at uncovering novel insights and correlations.

WDC’s recent unveiling of digital storage products underscores the company’s commitment to fortifying various components of the storage supply chain, thereby catering to the intricate demands of AI training and inference workflows. Noteworthy among these releases is the introduction of a 32TB ePMR Enterprise HDD, currently undergoing sampling with select clientele. The Ultrastar DC HC690 UltraSMR HDD represents WDC’s premier offering in terms of storage capacity. It is important to note that while Seagate and Toshiba have also announced 32TB HAMR HDDs, they lack shingling technology.

Furthermore, WDC’s extensive repertoire extends to NAND flash and SSDs, with plans to establish a dedicated NAND and SSD entity in the near future. The company’s product portfolio encompasses a diverse array of SSDs tailored to support multifaceted AI workflows, ranging from high-performance PCIe Gen5 SSDs optimized for both training and inference to high-capacity SSDs, boasting storage capacities of up to 64TB, tailored to expedite AI data processing in fast-paced data lake environments.

Conclusion:

The surge in AI-driven memory and storage demand signifies a lucrative market opportunity for industry players. Companies investing in R&D to meet evolving AI storage needs stand to capitalize on this burgeoning market and drive innovation in the sector. Meeting these demands necessitates a comprehensive approach encompassing diverse storage solutions tailored to optimize AI workflows and maximize operational efficiency.