- Georgia Tech introduces LARS-VSA, a Vector Symbolic Architecture for abstract rule learning

- LARS-VSA bridges the gap in machine learning for abstract reasoning, distinct from semantic and procedural knowledge

- It addresses the relational bottleneck problem by segregating relational information from object-level features

- LARS-VSA’s context-based self-attention mechanism operates in a bipolar high-dimensional space, eliminating the need for prior knowledge of abstract rules

- Streamlining computational operations, LARS-VSA offers a lightweight alternative to conventional attention mechanisms

- Outperforms standard transformer architectures and state-of-the-art methods in discriminative relational tasks

- Demonstrates high accuracy and cost efficiency across various datasets and problem-solving scenarios

Main AI News:

In today’s era of rapid technological advancement, analogical reasoning stands as a pillar of human abstraction and innovative ideation. Understanding the intricate relationships between objects fuels creative thinking and problem-solving, a skill set that sets humans apart from machines. However, bridging this cognitive gap has been a challenge for contemporary connectionist models like deep neural networks (DNNs), which excel in semantic and procedural knowledge acquisition but often struggle with abstract reasoning.

Enter LARS-VSA (Learning with Abstract RuleS), a groundbreaking innovation from the Georgia Institute of Technology. This Vector Symbolic Architecture (VSA) promises to revolutionize the landscape of machine learning by unlocking the power of abstract rule learning. By isolating abstract relational rules from object representations, LARS-VSA transcends the limitations of traditional connectionist approaches.

The crux of LARS-VSA lies in its ability to address the relational bottleneck problem, a longstanding challenge in machine learning. This bottleneck stems from the interference between object-level and abstract-level features, often resulting in inefficient generalization and increased processing demands. LARS-VSA tackles this issue head-on by leveraging high-dimensional vector representations to perform explicit bindings, effectively segregating relational information from object-level features.

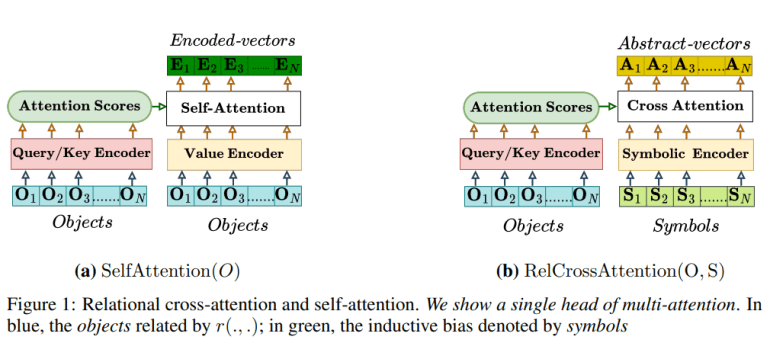

One of the key innovations of LARS-VSA is its context-based self-attention mechanism, operating within a bipolar high-dimensional space. This mechanism autonomously develops vectors that encapsulate relationships between symbols, eliminating the need for prior knowledge of abstract rules. Moreover, by streamlining attention score matrix multiplication to binary operations, LARS-VSA significantly reduces computational overhead, offering a lightweight alternative to conventional attention mechanisms.

In extensive evaluations, LARS-VSA showcased its prowess by outperforming the Abstractor, a standard transformer architecture, and other state-of-the-art methods in discriminative relational tasks. From synthetic sequence-to-sequence datasets to complex mathematical problem-solving scenarios, LARS-VSA demonstrated remarkable accuracy and cost efficiency, underscoring its potential for real-world applications.

Conclusion:

The emergence of LARS-VSA marks a significant advancement in machine learning, particularly in abstract rule learning. Its ability to bridge the gap between object-level and abstract-level features while offering cost efficiency and high accuracy signifies a promising trajectory for the market. As businesses seek more efficient and robust machine learning solutions, LARS-VSA’s capabilities position it as a frontrunner in the evolving landscape of artificial intelligence technologies.