- Princeton University study highlights predominant focus on AI accuracy over deployment costs.

- Traditional evaluation methods prioritize precision, neglecting practical operational costs.

- Study identifies gaps: real-world applicability and developer misalignment on cost priorities.

- Proposed solution: new evaluation framework balancing accuracy and cost-effectiveness.

- Techniques include hyperparameter optimization, model trimming, and hardware acceleration.

- DSPy framework demonstrated effective cost savings on HotPotQA benchmark.

Main AI News:

Recent advancements in AI have predominantly focused on achieving high accuracy, often at the expense of practical deployment costs, according to a new study from Princeton University. The study underscores the need for a paradigm shift in evaluating AI agents, moving beyond accuracy metrics to include cost-effectiveness in agent development.

Traditionally, the evaluation of AI agents has revolved around enhancing precision through increasingly complex models. However, the computational demands of these models can render them impractical for real-world applications, despite their benchmark performance.

The research identifies two critical gaps in current evaluation methods:

- Real-World Applicability: Highly accurate AI agents developed without considering operational costs may not be viable in resource-constrained environments.

- Developer Misalignment: Discrepancies between model developers prioritizing accuracy and downstream developers concerned with operational costs can lead to high-cost, high-accuracy agents unsuitable for practical use.

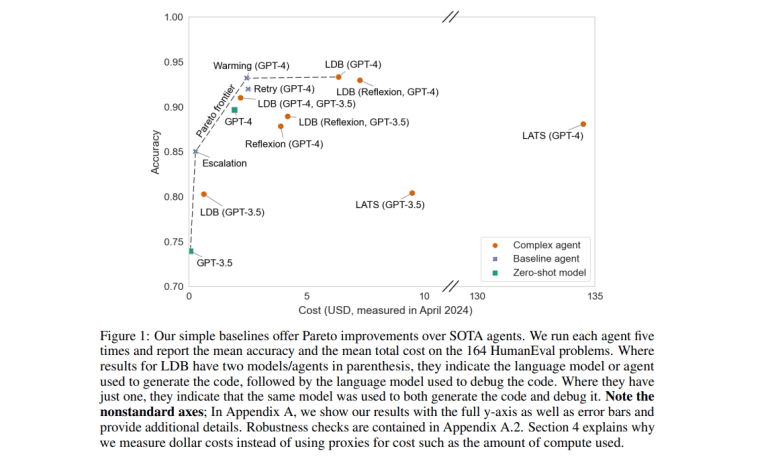

To address these challenges, the study proposes a new evaluation framework that balances accuracy and cost as dual optimization objectives. By mapping the cost-accuracy trade-offs using a Pareto frontier approach, researchers suggest a path to designing AI agents that are both cost-effective and accurate across various deployment scenarios, including latency-sensitive applications.

The study advocates for optimizing AI agent design parameters, such as hyperparameters and computational resources, to minimize fixed and variable costs associated with agent operation. Techniques like model trimming and hardware acceleration are highlighted as effective strategies to reduce operational costs without compromising accuracy.

Moreover, the research introduces DSPy, a framework tested on the HotPotQA benchmark, demonstrating the efficacy of joint optimization in enhancing AI agent performance. By integrating DSPy’s capabilities for multi-hop question-answering and leveraging optimized few-shot examples, the study achieves significant cost savings while maintaining competitive accuracy levels.

In conclusion, the study calls for a holistic reassessment of current AI benchmarking practices to ensure that benchmarks reflect real-world deployment challenges. By incorporating cost considerations into AI agent evaluation frameworks, researchers can foster the development of more practical and economically viable AI solutions.

The team emphasizes the importance of safety evaluations as AI agents become more sophisticated, stressing the need for existing frameworks to regulate AI deployment responsibly. While the study focuses primarily on cost optimization, it underscores the broader implications for AI safety and deployment ethics.

By empowering stakeholders to evaluate cost-effectiveness alongside capability risks, the research aims to preemptively identify and mitigate potential safety issues. The inclusion of cost assessments in AI safety benchmarks is proposed as a crucial step towards developing responsible and effective AI solutions.

Ultimately, the study advocates for a paradigm shift in how AI agents are evaluated and deployed. By prioritizing both accuracy and cost-effectiveness, researchers can design AI solutions that are not only technically robust but also economically feasible for real-world implementation.

Conclusion:

The Princeton University study underscores a critical need for the AI market to shift towards more cost-effective solutions alongside traditional accuracy benchmarks. By advocating a dual optimization approach that considers both accuracy and deployment costs, the study suggests a pathway for developing AI agents that are not only technically proficient but also economically viable. This shift is essential as AI technologies evolve, emphasizing the importance of integrating cost evaluations into standard benchmarks to ensure responsible and practical deployment.