- Introduction to Natural Language Processing (NLP) and its reliance on large language models (LLMs) for tasks like translation and sentiment analysis.

- Challenges in evaluating LLMs due to resource-intensive nature, requiring significant time and computational investment.

- Traditional methods involve exhaustive testing across entire datasets, leading to high costs and time consumption.

- UCB-E and UCB-E-LRF algorithms introduced by Cornell and UCSD researchers leverage multi-armed bandit frameworks and low-rank factorization.

- These algorithms strategically allocate evaluation resources by prioritizing method-example pairs based on past performance.

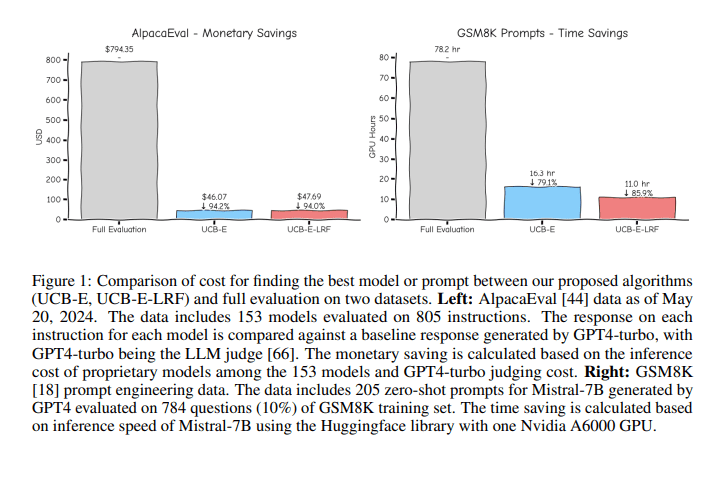

- Experimental results show 85-95% cost reduction compared to traditional methods, while maintaining high evaluation precision.

- UCB-E-LRF excels in scenarios with large method sets or minimal performance differences.

Main AI News:

Researchers from Cornell University and the University of California, San Diego, have recently introduced two novel algorithms, UCB-E and UCB-E-LRF, designed to revolutionize the evaluation of large language models (LLMs). This advancement addresses the substantial resource requirements associated with traditional evaluation methods, where selecting the optimal model or configuration involves exhaustive testing across entire datasets.

The field of Natural Language Processing (NLP) focuses on the interaction between computers and humans through natural language. It encompasses tasks such as translation, sentiment analysis, and question answering, leveraging large language models (LLMs) to achieve high accuracy and performance. LLMs find application in various domains, from automated customer support to content generation, demonstrating impressive proficiency across diverse tasks.

Evaluating LLMs is inherently resource-intensive, demanding significant computational power, time, and financial investment. The challenge lies in efficiently identifying top-performing models or methods from a plethora of options without exhausting resources on full-scale evaluations. Practitioners often face the daunting task of selecting the optimal model, prompt, or hyperparameters from hundreds of available choices tailored to their specific needs.

Traditional evaluation methods typically involve exhaustive testing of multiple candidates on entire test sets, which can be both costly and time-consuming. Techniques such as prompt engineering and hyperparameter tuning necessitate extensive testing across multiple configurations to identify the best-performing setup, leading to high resource consumption. For example, projects like AlpacaEval benchmark over 200 models against a diverse set of 805 questions, requiring significant investments in time and computing resources. Similarly, evaluating 153 models in the Chatbot Arena demands substantial computational power, highlighting the inefficiency of current evaluation methodologies.

In response to these challenges, researchers from Cornell University and the University of California, San Diego, have introduced the UCB-E and UCB-E-LRF algorithms. These methods leverage multi-armed bandit frameworks combined with low-rank factorization to dynamically allocate evaluation resources. By strategically prioritizing method-example pairs for evaluation based on past performance, these algorithms minimize the number of evaluations needed and reduce associated costs.

UCB-E extends classical multi-armed bandit principles by estimating upper confidence bounds for method performance at each step. This approach ensures efficient resource allocation by directing attention towards methods with the highest estimated potential for performance improvement. UCB-E-LRF takes this a step further by incorporating low-rank factorization to predict unobserved scores, optimizing evaluation efficiency even in scenarios with large method sets or minimal performance gaps.

Experimental results illustrate significant cost reductions of 85-95% compared to traditional exhaustive evaluations. For example, evaluating 205 zero-shot prompts on 784 GSM8K questions using Mistral-7B required only 78.2 Nvidia A6000 GPU hours, demonstrating substantial resource savings without compromising evaluation precision. UCB-E and UCB-E-LRF excel particularly in scenarios where evaluating large sets of methods or identifying subtle performance differences are critical, showcasing their adaptability and efficacy in modern LLM evaluation practices.

Conclusion:

The introduction of UCB-E and UCB-E-LRF algorithms signifies a significant advancement in the efficiency of evaluating large language models. By drastically reducing costs and computational resources required for evaluation, these methods not only streamline the research and development process but also democratize access to advanced NLP technologies. This innovation is poised to accelerate the pace of innovation in the market, enabling faster iterations and improvements in LLM performance across various applications.