- Google introduces GenC, focusing on enhancing privacy in generative AI.

- GenC combines Confidential Computing with Gemma open-source models for secure data processing.

- Applications include personal assistants and confidential business tasks.

- On-device processing with Gemma 2B for speed and cost-efficiency.

- Cloud-hosted Gemma 7B in Trusted Execution Environments for complex tasks.

- Ensures data integrity with encrypted memory and communication channels.

- Developers benefit from a portable Intermediate Representation (IR) for cross-platform deployment.

- Future plans include support for advanced hardware accelerators like Intel TDX and Nvidia H100 GPUs.

Main AI News:

Google is spearheading a transformative shift in generative AI (gen AI) with its latest GenC initiative, aimed at fortifying privacy and confidentiality in data processing. As gen AI gains momentum across various applications, Google recognizes the imperative to harness its potential while safeguarding sensitive information.

The crux of Google’s approach lies in the GenC open-source project, which seamlessly integrates Confidential Computing principles with the powerful capabilities of Gemma open-source models. This strategic amalgamation empowers developers to explore and innovate with gen AI applications that demand stringent privacy measures.

“Generative AI presents immense opportunities, but ensuring data privacy remains paramount, especially in contexts such as personal assistants and confidential business operations,” emphasized a Google spokesperson. By leveraging GenC, developers can now build applications that process sensitive data while upholding privacy, transparency, and verifiability standards.

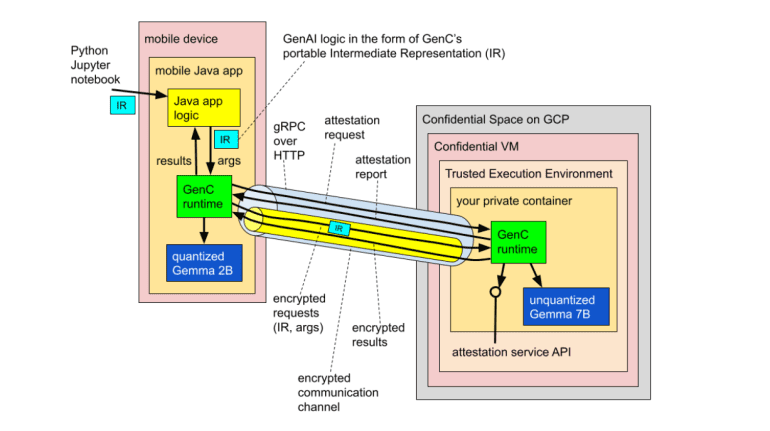

The deployment architecture revolves around a hybrid model, harmonizing on-device and cloud-based processing capabilities to optimize performance and security. For instance, a mobile app utilizing Google’s technology can execute lightweight tasks locally using a quantized Gemma 2B model. This approach not only ensures rapid response times and cost-efficiency but also mitigates network dependency, making it ideal for scenarios where continuous connectivity may be unreliable.

Conversely, for more complex computations requiring extensive resources, Google offers a cloud-hosted, unquantized Gemma 7B model within Trusted Execution Environments (TEEs). These TEEs act as secure extensions of end-user devices, ensuring encrypted memory and communication channels. Prior to any interaction, devices validate TEE integrity through rigorous attestation protocols, thereby fostering trust and accountability in data handling practices.

“The integration of GenC with Gemma models represents a significant leap forward in empowering developers to create gen AI applications that adhere to rigorous privacy and confidentiality standards,” affirmed the spokesperson. Google’s commitment extends beyond technical innovation to include a portable, language-independent Intermediate Representation (IR) framework. This enables developers to prototype and scale gen AI solutions seamlessly across diverse platforms—from initial concept in a Jupyter notebook to deployment in production-grade Java or C++ environments.

Looking ahead, Google anticipates enhancing GenC compatibility with advanced hardware accelerators like Intel TDX and Nvidia H100 GPUs in confidential mode. Such enhancements promise to unlock superior performance capabilities and broader model applicability, bolstering Google’s leadership in responsible gen AI innovation.

For developers and enterprises venturing into gen AI applications requiring heightened privacy assurances, Google’s GenC initiative and Gemma models offer a robust foundation. These advancements underscore Google’s ongoing commitment to democratizing access to secure gen AI capabilities while setting new benchmarks in data privacy and computational integrity.

Conclusion:

Google’s GenC initiative marks a significant advancement in balancing generative AI’s capabilities with stringent privacy requirements. By integrating Confidential Computing and versatile Gemma models, Google not only enhances data security but also empowers developers to innovate across diverse computing environments. This initiative underscores Google’s commitment to leading responsible AI innovation and sets a new standard for privacy-centric AI solutions in the market.