- HuggingFace has introduced Docmatix, a new dataset for Document Visual Question Answering (DocVQA).

- The dataset includes 2.4 million images and 9.5 million question-answer pairs from 1.3 million PDF documents.

- Docmatix is a 240-fold increase in size compared to previous datasets.

- It was built using the PDFA collection of over two million PDFs and a Phi-3-small model for Q/A pair generation.

- The dataset underwent rigorous quality checks, removing 15% of inaccurate Q/A pairs.

- Images are processed at 150 dpi and are available on the Hugging Face Hub.

- Fine-tuning efforts aimed for approximately four Q/A pairs per page, with a focus on human-like response quality.

- Performance testing with the Florence-2 model showed a 20% improvement when trained on Docmatix compared to other datasets.

Main AI News:

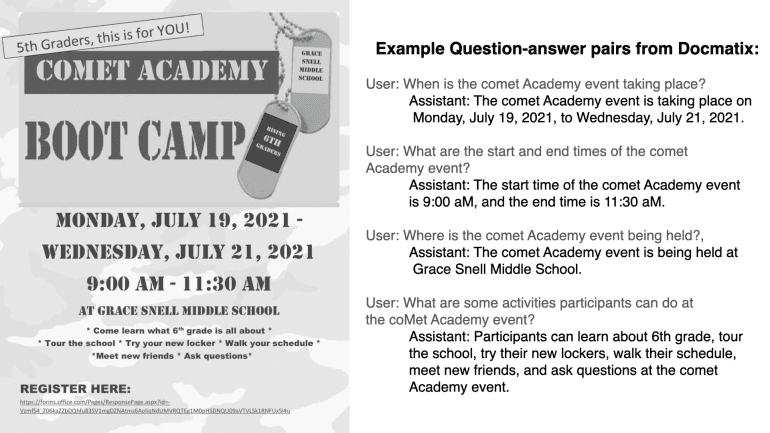

Document Visual Question Answering (DocVQA) represents a sophisticated branch of visual question answering, concentrating on responding to queries regarding the content within documents. These documents can encompass various forms, including scanned photographs, PDFs, and digital files that integrate both text and visual elements. The development of robust DocVQA datasets has been notably challenging due to the complexity involved in collecting and annotating such data. This complexity arises from the need to comprehend the context, structure, and layout of different document formats, which demands significant manual effort. Additionally, the sensitive nature of the information contained within many documents poses privacy concerns, making them difficult to share or utilize. The lack of uniformity across document structures and domain-specific differences further exacerbate the difficulty of creating comprehensive datasets, with challenges in multi-modal fusion and the accuracy of optical character recognition also contributing to the issue.

Acknowledging the critical need for expansive DocVQA datasets, HuggingFace has introduced Docmatix, a groundbreaking dataset designed to overcome these obstacles. With an impressive scale of 2.4 million images and 9.5 million question-answer pairs derived from 1.3 million PDF documents, Docmatix represents a 240-fold increase in size compared to previous datasets. This substantial expansion underscores the dataset’s potential to significantly enhance model performance and document accessibility across various applications.

Docmatix originates from the PDFA collection, which includes over two million PDFs. Researchers utilized a Phi-3-small model to generate question-answer pairs from PDFA transcriptions, ensuring high data quality by removing 15% of pairs identified as inaccurate through a rigorous filtering process. This process involved eliminating responses flagged with the term “unanswerable” via regular expressions designed to detect coding issues. Each PDF in the dataset is meticulously represented, with processed images saved at 150 dpi and made readily accessible on the Hugging Face Hub.

To ensure the dataset’s effectiveness, HuggingFace researchers conducted extensive fine-tuning and ablation experiments. They aimed to achieve a balance of approximately four question-answer pairs per page, avoiding both excessive overlap and insufficient detail. The team also focused on crafting responses that closely mimic human speech, striving for a natural and concise style. Efforts were made to diversify the questions, minimizing repetition and ensuring a broad range of queries. For performance evaluation, the Florence-2 model was employed, demonstrating a notable relative improvement of about 20% when trained on a subset of Docmatix. This performance boost highlights the dataset’s potential to bridge the gap between open-source and proprietary Vision-Language Models (VLMs).

HuggingFace’s release of Docmatix marks a significant advancement in the field of DocVQA, providing the open-source community with a valuable resource to drive forward model development and improve document-based accessibility. By facilitating more accurate and comprehensive training, Docmatix promises to make a substantial impact on the future of document visual question answering and related technologies.

Conclusion:

The introduction of Docmatix represents a significant advancement in the field of Document Visual Question Answering. Its extensive scale and rigorous quality controls address the longstanding challenges of dataset development in this domain. For the market, this means enhanced model training capabilities and improved benchmarking for VLMs, potentially narrowing the performance gap between open-source and proprietary models. The dataset’s availability could lead to increased innovation and accessibility in document-based AI applications, setting a new standard for future developments in this area.