- MIRAGE introduces a new framework for Multi-Image Visual Question Answering (MIQA), addressing challenges in analyzing extensive image collections.

- The model builds on LLaVA with innovations including a compressive image encoder, a query-aware relevance filter, and augmented training with synthetic and real MIQA data.

- MIRAGE achieves up to an 11% accuracy improvement over closed-source models like GPT-4o and up to 3.4x improvements in efficiency compared to traditional multi-stage methods.

- The compressive image encoder reduces token intensity per image from 576 to 32, allowing the model to handle more images within the same context constraints.

- The query-aware relevance filter selects relevant images for analysis, enhancing accuracy.

- The model’s training includes both MIQA datasets and synthetic data, improving robustness across diverse scenarios.

- The Visual Haystacks (VHs) dataset used for benchmarking includes 880 single-needle and 1000 multi-needle question-answer pairs.

Main AI News:

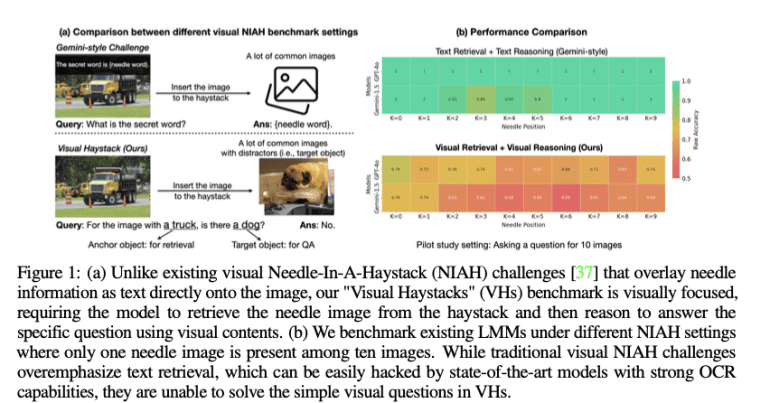

The task of Multi-Image Visual Question Answering (MIQA) poses a notable challenge in the visual question answering (VQA) domain, demanding accurate and contextually grounded responses from extensive image collections. While Large Multimodal Models (LMMs) like Gemini 1.5-pro and GPT-4V excel in analyzing individual images, they struggle significantly with large-scale queries spanning numerous images. This limitation impacts practical applications such as searching through large image databases, retrieving specific details from online content, and analyzing satellite imagery for environmental monitoring.

Current visual question answering approaches primarily concentrate on single-image contexts, which restricts their effectiveness for complex queries involving multiple images. Existing models face difficulties in efficiently retrieving and synthesizing relevant visual information from extensive datasets, often resulting in computational inefficiencies and diminished accuracy. These challenges are compounded by issues such as positional bias and difficulties in integrating disparate visual inputs.

In response, researchers from the University of California have introduced MIRAGE (Multi-Image Retrieval Augmented Generation), a groundbreaking framework designed specifically for MIQA. MIRAGE builds upon the LLaVA model, incorporating a series of advancements: a compressive image encoder, a query-aware relevance filter, and augmented training with both synthetic and real MIQA data. These enhancements enable MIRAGE to manage larger image contexts with improved efficiency and accuracy. The framework achieves up to an 11% improvement in accuracy over closed-source models like GPT-4o on the Visual Haystacks (VHs) benchmark, alongside a notable 3.4x increase in efficiency compared to conventional text-focused multi-stage methods.

MIRAGE’s compressive image encoding, utilizing a Q-former to reduce token intensity from 576 to 32 tokens per image, allows for processing a greater number of images within the same context constraints. The query-aware relevance filter, a single-layer MLP, assesses image relevance to the query, facilitating the selection of pertinent images for in-depth analysis. The model’s training incorporates both existing MIQA datasets and synthetic data from single-image QA sources, enhancing robustness across diverse MIQA scenarios. The VHs dataset, consisting of 880 single-needle and 1000 multi-needle question-answer pairs, offers a comprehensive evaluation framework for MIQA models.

Evaluation results indicate that MIRAGE significantly outperforms current models on the Visual Haystacks benchmark, with up to 11% greater accuracy for single-needle questions compared to closed-source alternatives like GPT-4o, and demonstrates remarkable improvements in efficiency. MIRAGE maintains superior performance as image set sizes increase, underscoring its capability to manage extensive visual contexts effectively.

Conclusion:

MIRAGE’s advancements in Multi-Image Visual Question Answering represent a significant leap forward in handling complex visual queries. By addressing key limitations of existing models, MIRAGE offers enhanced accuracy and efficiency, setting a new standard for large-scale visual retrieval and reasoning. This positions MIRAGE as a pivotal tool for industries requiring advanced image analysis capabilities, such as digital content management, environmental monitoring, and comprehensive data search applications. Its performance improvements highlight a trend towards more sophisticated and efficient multimodal AI solutions, potentially influencing future developments and investments in the field.