- The increasing advancement of AI systems heightens the need for effective governance to manage risks such as misuse and ethical concerns.

- Current governance relies on computational power thresholds (measured in FLOP) to regulate AI systems, assuming higher compute power correlates with greater risk.

- Cohere AI researchers argue that these compute thresholds are outdated and inadequate for managing the evolving risks of AI.

- The paper proposes a dynamic evaluation system that includes refining FLOP metrics, considering additional risk factors, and implementing adaptive thresholds.

- Greater transparency and standardization in reporting AI risks are recommended, focusing on real-world performance and potential hazards.

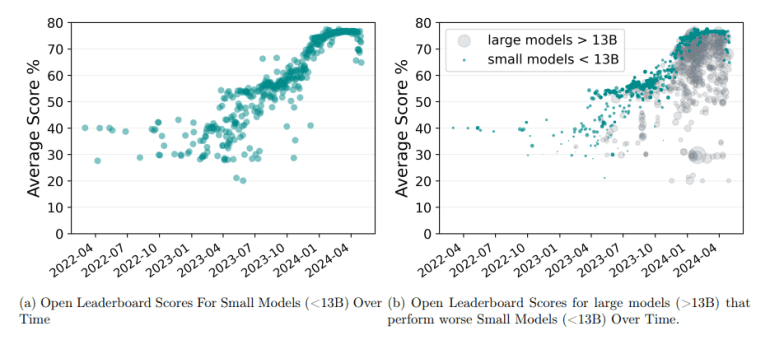

- Fixed compute thresholds often miss risks associated with smaller, highly optimized AI models, which can perform as well as larger models.

- Recent studies show that optimized smaller models can achieve high performance scores, indicating the need for revised governance frameworks.

Main AI News:

As artificial intelligence systems advance at an unprecedented pace, the imperative to ensure their safe and ethical deployment has become a significant concern for researchers and policymakers alike. The evolving capabilities of AI bring with them a range of risks, including potential misuse, ethical dilemmas, and unforeseen consequences. Addressing these challenges requires effective governance strategies that can predict and manage potential harms as AI technology scales and evolves.

Current governance strategies often rely on defining computational power thresholds—measured in floating-point operations (FLOP)—to regulate AI systems. The premise is that higher computational power correlates with increased risk, leading to policies like those outlined in the White House Executive Orders on AI Safety and the EU AI Act. These frameworks incorporate compute thresholds as a means of controlling and monitoring the potential risks associated with AI systems that exceed certain levels of computational intensity.

However, a recent paper from researchers at Cohere AI critically examines the efficacy of these compute thresholds as a governance tool. They argue that the current approach is too narrow and fails to address the full spectrum of risks associated with AI advancements. The researchers emphasize that the relationship between compute power and risk is highly uncertain and rapidly changing, making fixed thresholds an inadequate measure of potential harm.

To address these limitations, the Cohere AI paper proposes a more nuanced approach to AI governance. Instead of relying solely on static compute thresholds, the researchers advocate for a dynamic evaluation system that takes into account multiple factors influencing AI’s risk profile. This includes refining the use of FLOP as a metric, incorporating additional dimensions of AI performance and risk, and establishing adaptive thresholds that evolve in response to advancements in AI technology.

The proposed approach calls for greater transparency and standardization in reporting AI risks, ensuring that governance practices are aligned with the actual performance and potential hazards of AI systems. This comprehensive evaluation involves examining factors such as the quality of training data, optimization techniques, and specific applications of AI models. By doing so, the researchers aim to provide a more accurate assessment of potential risks, moving beyond the limitations of fixed compute thresholds.

The paper highlights that traditional compute thresholds often miss significant risks associated with smaller, highly optimized AI models. Empirical evidence suggests that advancements in optimization techniques can make smaller models as capable and risky as larger ones. For instance, recent studies show that smaller models can outperform larger models in certain tasks, achieving performance scores of up to 77.15% on benchmark tests—a notable increase from the 38.59% average observed just two years ago. This discrepancy underscores the need for a revised approach to governance that better captures the nuances of model performance and risk.

In conclusion, the Cohere AI research underscores the inadequacies of current compute threshold-based governance and advocates for a more sophisticated framework. By considering a broader range of factors and implementing adaptive thresholds, policymakers can better manage the risks associated with advanced AI systems, ensuring that governance practices remain relevant and effective in an ever-evolving technological landscape.

Conclusion:

The research from Cohere AI highlights the limitations of traditional governance approaches that rely solely on fixed compute thresholds. As AI technology advances, a more dynamic and nuanced approach is necessary to address emerging risks effectively. For the market, this suggests a shift towards governance frameworks that adapt to evolving AI capabilities and incorporate a broader range of risk factors. Policymakers and industry leaders will need to embrace more sophisticated methods of evaluating AI systems to ensure effective risk management and maintain regulatory relevance in an increasingly complex technological landscape.