- Meta introduces SAM 2, the latest version of its Segment Anything Model.

- SAM 2 integrates video data for real-time object segmentation and tracking across frames.

- The model features three times less interaction time and improved accuracy over previous versions.

- SAM 2 is versatile, applicable in creative industries, data annotation, scientific research, and medical diagnostics.

- The model’s code and weights are open-source under an Apache 2.0 license, promoting community collaboration.

- Meta has released the SA-V dataset, including 51,000 videos and over 600,000 masks, for enhanced training and testing.

- Key technical innovations include a memory mechanism and promptable visual segmentation task.

Main AI News:

Meta has officially launched SAM 2, the latest evolution of its Segment Anything Model, promising a leap forward in real-time object segmentation across both images and videos. SAM 2 builds upon the success of its predecessor by introducing a unified approach that integrates video data, allowing for seamless and real-time object tracking and segmentation across frames. This advancement is achieved without the need for custom adaptations, thanks to SAM 2’s robust zero-shot generalization capabilities, making it adept at handling any object in diverse visual contexts.

One of SAM 2’s standout features is its enhanced efficiency. The model reduces interaction time by a factor of three compared to its predecessors, while delivering superior accuracy in both image and video segmentation. This efficiency is particularly valuable in applications where speed and precision are critical.

SAM 2’s applications span various industries. In the creative sector, it can facilitate new video effects, offering advanced capabilities for generative video models and expanding creative possibilities. For data annotation, SAM 2 accelerates visual data labeling, benefiting fields that depend on extensive datasets for training, such as autonomous driving and robotics.

The model also shows promise in scientific and medical research. It can segment moving cells in microscopic videos, aiding in research and diagnostics. Additionally, SAM 2’s ability to track objects in drone footage is useful for wildlife monitoring and environmental studies.

Reflecting Meta’s dedication to open science, SAM 2’s code and weights are released under an Apache 2.0 license, fostering collaboration and innovation within the AI community. Additionally, Meta has provided the SA-V dataset, a substantial collection of about 51,000 real-world videos and over 600,000 spatio-temporal masks, under a CC BY 4.0 license. This dataset significantly expands the available resources for training and evaluating segmentation models.

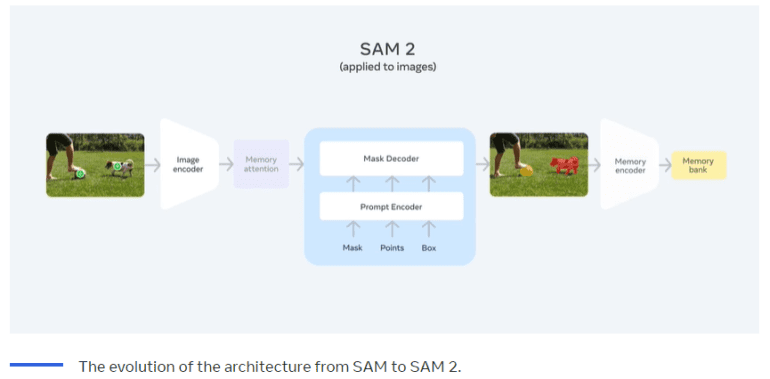

Technically, SAM 2 advances upon SAM’s foundation with innovations including a memory mechanism that manages video data complexities. Components such as the memory encoder, memory bank, and memory attention module enable effective handling of object motion, deformation, and occlusion. The model’s promptable visual segmentation task further refines its capabilities, allowing for precise segmentation across video frames through iterative processing.

Conclusion:

Meta’s launch of SAM 2 represents a significant advancement in real-time object segmentation technology, offering notable improvements in efficiency and accuracy. By integrating video data and enhancing zero-shot generalization, SAM 2 expands the potential applications across multiple industries, including creative media, data annotation, and scientific research. The open-source release of the model and its comprehensive dataset further positions Meta as a leader in fostering innovation and collaboration within the AI community. This strategic move is likely to drive competitive advantages for businesses leveraging advanced segmentation capabilities and could set new standards in the market for visual data processing and analysis.