- TII has launched the FalconMamba 7B, an attention-free AI model.

- FalconMamba 7B uses innovative Mamba architecture that diverges from traditional transformers.

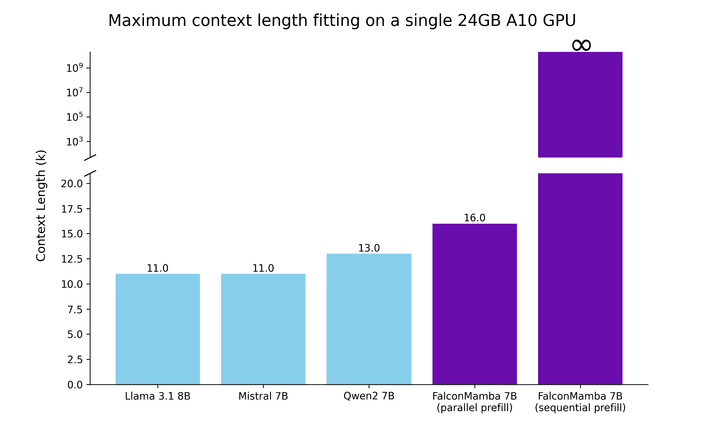

- The model handles large sequences efficiently, fitting on a single A10 24GB GPU.

- Consistent token generation time regardless of context size.

- Trained on 5500GT of high-quality data, excelling in benchmarks.

- Compatible with Hugging Face, supports bits and bytes quantization.

- TII also released an instruction-tuned version for enhanced task performance.

- The model is widely accessible to researchers and industry professionals.

Main AI News:

The Technology Innovation Institute (TII) in Abu Dhabi has launched the FalconMamba 7B, a groundbreaking AI model designed to push the limits of artificial intelligence. As the first robust attention-free 7B model, FalconMamba 7B addresses critical challenges in existing AI architectures, particularly in handling large data sequences. Released under the TII Falcon License 2.0, this innovative model is an open-source resource on Hugging Face, accessible to researchers and developers worldwide.

FalconMamba 7B distinguishes itself from the Mamba architecture, introduced in the paper “Mamba: Linear-Time Sequence Modeling with Selective State Spaces.” This architecture moves away from traditional transformer models, which struggle with processing large sequences due to their reliance on attention mechanisms that increase computational and memory demands. FalconMamba 7B overcomes these limitations with additional RMS normalization layers, allowing it to handle sequences of any length without increasing memory requirements, making it suitable for a single A10 24GB GPU.

A standout feature of FalconMamba 7B is its consistent token generation time, regardless of context size, which is a significant advantage over traditional models. The Mamba architecture maintains only its recurrent state, preventing the linear increase in memory and generation time that plagues transformer models.

Trained on 5500GT of data from RefinedWeb and high-quality technical and code sources, FalconMamba 7B demonstrates strong performance in benchmarks, with scores of 33.36 in MATH, 19.88 in MMLU-IFEval, and 3.63 in BBH. These results highlight its capability in tasks requiring extensive sequence processing.

FalconMamba 7B also efficiently accommodates more extensive sequences on a single 24GB A10 GPU while maintaining a steady generation throughput without increasing CUDA peak memory. This makes it an ideal tool for applications requiring large-scale data processing.

Fully compatible with the Hugging Face transformers library (version >4.45.0), FalconMamba 7B supports bits and bytes quantization, allowing it to run on smaller GPU memory. This ensures accessibility for a wide range of users, from academia to industry.

TII also offers an instruction-tuned version of FalconMamba, fine-tuned with an additional 5 billion tokens for enhanced instructional task performance.

Conclusion:

The release of FalconMamba 7B by TII represents a significant shift in the AI market. By overcoming the limitations of traditional transformer models, this technology enhances the efficiency and scalability of AI applications, particularly those involving large sequences. The model’s accessibility through Hugging Face and its capability to run on more modest hardware makes it a powerful tool for a broad audience, from academia to industry. This development could accelerate innovation across sectors reliant on AI, pushing the market towards more cost-effective and resource-efficient solutions.