- AI alignment with human preferences is crucial for maximizing the efficiency and safety of large language models (LLMs).

- Underspecification in AI alignment, where preference data and training objectives aren’t clearly defined, can lead to suboptimal performance.

- Traditional methods of aligning LLMs, using contrastive learning and preference pair datasets, often provide inconsistent learning signals.

- Researchers have developed two innovative approaches: Contrastive Learning from AI Revisions (CLAIR) and Anchored Preference Optimization (APO).

- CLAIR refines model outputs by generating minimally contrasting preference pairs, improving the learning signal.

- APO offers precise control over the alignment process by adjusting output probabilities based on the model’s performance.

- CLAIR and APO significantly improved the Llama-3-8B-Instruct model, narrowing its performance gap with GPT-4-turbo by 45%.

Main AI News:

As the field of artificial intelligence (AI) continues to grow, particularly in developing large language models (LLMs), aligning these models with human preferences is essential for maximizing efficiency and safety. This alignment is crucial for ensuring that AI interactions are accurate and in harmony with human expectations and values. Achieving this involves a sophisticated strategy that utilizes preference data to guide the model toward desired outcomes and employs alignment objectives to direct the training process. These factors are key to enhancing the model’s performance and ensuring it meets user expectations effectively.

A significant challenge in AI model alignment is underspecification, where the connection between preference data and training objectives is not clearly defined. This lack of clarity can result in suboptimal performance, as the model may struggle to learn effectively from the provided data.

Underspecification occurs when preference pairs used in training include irrelevant differences from the intended outcomes. These irrelevant variations make it difficult for the model to focus on the aspects that truly matter. Existing alignment methods often do not sufficiently address the relationship between the model’s performance and the preference data, which can lead to a decline in the model’s capabilities.

Current techniques for aligning LLMs, such as those based on contrastive learning objectives and preference pair datasets, have made significant progress but are still imperfect. Typically, these methods involve generating two outputs from the model and using a judge—another AI model or a human—to select the preferred output. However, this process can result in inconsistent preference signals, as the criteria for choosing the preferred response may not always be clear or consistent. This inconsistency in the learning signal can hinder the model’s ability to improve during training, as it may not always receive clear guidance on how to adjust its outputs to better align with human preferences.

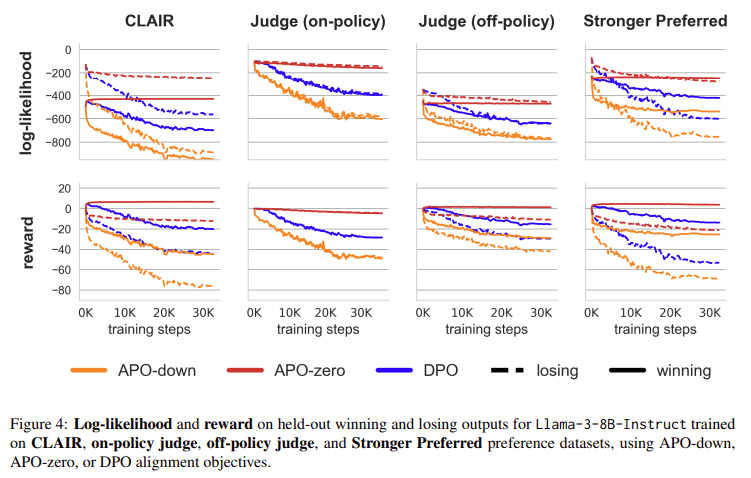

To address these challenges, researchers from Ghent University – imec, Stanford University, and Contextual AI have developed two innovative approaches: Contrastive Learning from AI Revisions (CLAIR) and Anchored Preference Optimization (APO). CLAIR is an advanced data-creation method designed to generate minimally contrasting preference pairs by slightly revising a model’s output to create a preferred response. This approach ensures that the contrast between the winning and losing outputs is minimal yet meaningful, providing a more precise learning signal for the model. In contrast, APO is a set of alignment objectives that offer enhanced control over the training process. By explicitly considering the interaction between the model and the preference data, APO ensures a more stable and effective alignment process.

CLAIR operates by first generating a suboptimal output from the target model, then using a more powerful model, like GPT-4-turbo, to revise this output into a superior one. The revision process introduces only slight changes, ensuring that the contrast between the two outputs is focused on the most relevant aspects.

This method marks a significant departure from traditional techniques, which might rely on a judge to choose the preferred output from two independently generated responses. CLAIR delivers a more transparent and effective learning signal during training by creating preference pairs with minimal yet significant contrasts.

Anchored Preference Optimization (APO) complements CLAIR by providing fine-tuned control over the alignment process. APO adjusts the likelihood of winning or losing outputs based on the model’s performance relative to the preference data. For example, the APO-zero variant increases the probability of winning outputs while reducing the likelihood of losing ones, which is particularly beneficial when the model’s outputs generally fall short of the preferred outcomes. Conversely, APO-down decreases the probability of winning and losing outputs, which can be advantageous when the model’s outputs are already superior to the preferred responses. This level of control allows researchers to tailor the alignment process to better suit the model’s specific needs and the data.

The success of CLAIR and APO was demonstrated by aligning the Llama-3-8B-Instruct model using a variety of datasets and alignment objectives. The results were remarkable: CLAIR, combined with the APO-zero objective, led to a 7.65% improvement in performance on the MixEval-Hard benchmark, which measures model accuracy across a range of complex queries. This improvement represents a significant step towards closing the performance gap between Llama-3-8B-Instruct and GPT-4-turbo, reducing the difference by 45%. These findings underscore the importance of minimally contrasting preference pairs and customized alignment objectives in improving AI model performance.

Conclusion:

The introduction of CLAIR and APO represents a pivotal advancement in AI model alignment, offering more refined and effective methods for training LLMs. For the market, this means a potential leap forward in the capabilities of AI-driven applications, particularly in areas where nuanced and precise language understanding is critical. As these methods are widely adopted, companies that leverage AI will likely see improved user interactions, higher satisfaction, and a competitive edge in the market. The ability to better align AI with human preferences is poised to be a key differentiator in the next generation of AI products and services.