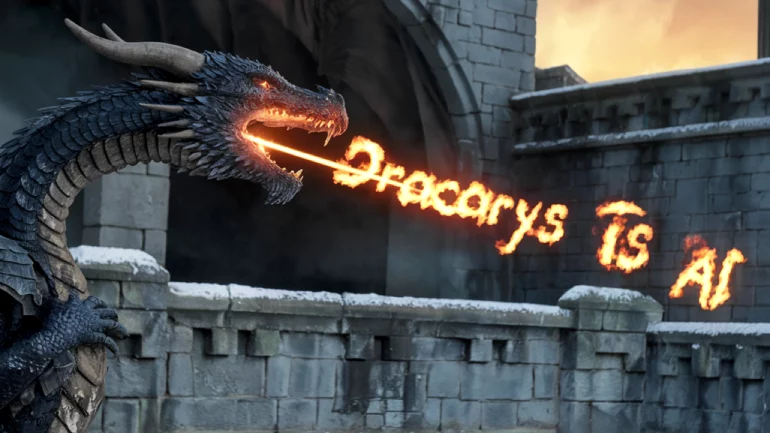

- Abacus.ai introduces Dracarys, a new family of open-source LLMs optimized for coding tasks.

- Dracarys is inspired by a command from “Game of Thrones”, and is designed to enhance coding capabilities.

- It builds on the success of Abacus.ai’s previous LLM, Smaug-72B, which was a general-purpose model.

- The Dracarys recipe combines fine-tuning and advanced techniques to improve the performance of open-source LLMs.

- Significant performance improvements have been shown in models like Qwen-2 72B and Llama-3.1 70B.

- Dracarys offers a robust open-source alternative to proprietary models like Anthropic’s Claude 3.5.

- Performance benchmarks reveal notable increases in coding scores after applying the Dracarys recipe.

Main AI News:

Abacus.ai is turning up the heat in artificial intelligence with its latest innovation, “Dracarys.” Inspired by the powerful command from HBO’s “Game of Thrones,” Dracarys is a new family of open-source large language models (LLMs) engineered specifically for coding tasks.

Known for its cutting-edge AI tools, Abacus.ai previously introduced Smaug-72B, a general-purpose LLM named after the dragon in J.R.R. Tolkien’s “The Hobbit.” Dracarys, however, is focused on optimizing coding performance. The first release in this line targets models in the 70B parameter class, with the “Dracarys recipe” combining fine-tuning and advanced techniques to enhance the coding capabilities of open-source LLMs.

Bindu Reddy, CEO and co-founder of Abacus.ai, highlighted that the Dracarys recipe has already improved models like Qwen-2 72B and Llama-3.1 70B. While platforms like GitHub Copilot, Tabnine, and Replit have been pioneers in leveraging LLMs for code generation, Dracarys stands out by offering a fine-tuned version of Meta’s Llama 3.1 model, presenting a strong open-source alternative to closed systems like Anthropic’s Claude 3.5.

Performance metrics underscore Dracarys’ impact on coding productivity. According to LiveBench benchmarks, applying the Dracarys recipe leads to a noticeable increase in coding scores. The meta-llama-3.1-70b-instruct turbo model’s score rose from 32.67 to 35.23, while the Qwen2-72B-instruct model experienced a more significant jump from 32.38 to 38.95.

Conclusion:

Abacus.ai’s introduction of Dracarys significantly advances the AI-driven coding tools market. By enhancing the performance of open-source LLMs, Dracarys offers a competitive edge over existing proprietary models and broadens access to powerful coding optimization tools. This development could accelerate the adoption of open-source solutions in coding, challenge the dominance of closed models, and stimulate further innovation in AI-powered development environments. As a result, the market may see a shift toward more collaborative and accessible AI tools that drive productivity and efficiency in coding tasks.